Arvid Kappas

A Robot by Any Other Frame: Framing and Behaviour Influence Mind Perception in Virtual but not Real-World Environments

Apr 16, 2020

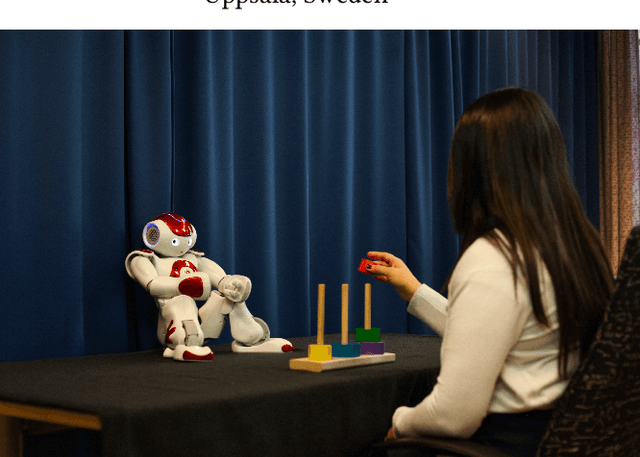

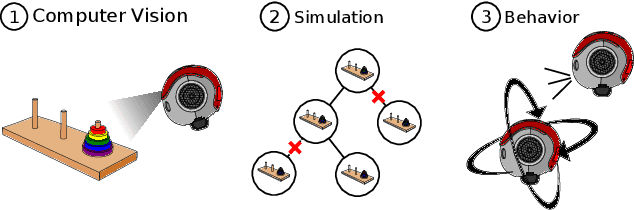

Abstract:Mind perception in robots has been an understudied construct in human-robot interaction (HRI) compared to similar concepts such as anthropomorphism and the intentional stance. In a series of three experiments, we identify two factors that could potentially influence mind perception and moral concern in robots: how the robot is introduced (framing), and how the robot acts (social behaviour). In the first two online experiments, we show that both framing and behaviour independently influence participants' mind perception. However, when we combined both variables in the following real-world experiment, these effects failed to replicate. We hence identify a third factor post-hoc: the online versus real-world nature of the interactions. After analysing potential confounds, we tentatively suggest that mind perception is harder to influence in real-world experiments, as manipulations are harder to isolate compared to virtual experiments, which only provide a slice of the interaction.

Estimating Gradual-Emotional Behavior in One-Minute Videos with ESNs

May 02, 2018

Abstract:In this paper, we describe our approach for the OMG- Emotion Challenge 2018. The goal is to produce utterance-level valence and arousal estimations for videos of approximately 1 minute length. We tackle this problem by first extracting facial expressions features of videos as time series data, and then using Recurrent Neural Networks of the Echo State Network type to model the correspondence between the time series data and valence-arousal values. Experimentally we show that the proposed approach surpasses the baseline methods provided by the organizers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge