Arpit Patil

AI coach for badminton

Mar 13, 2024

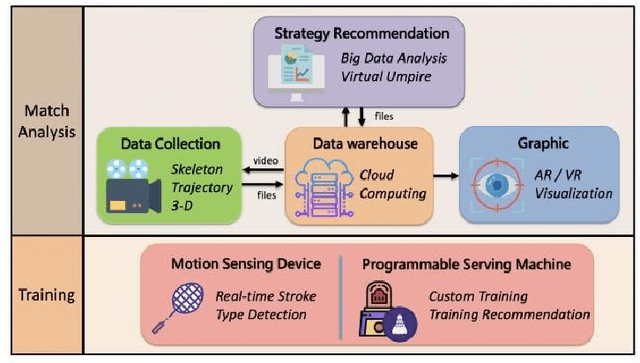

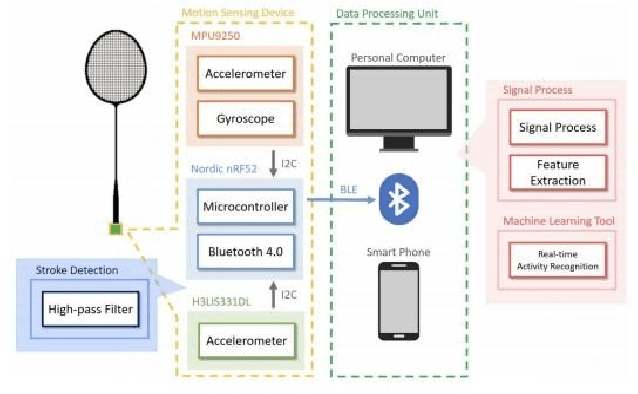

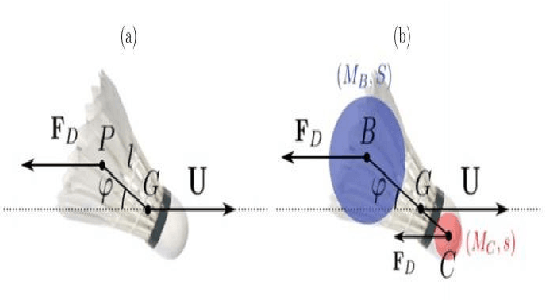

Abstract:In the competitive realm of sports, optimal performance necessitates rigorous management of nutrition and physical conditioning. Specifically, in badminton, the agility and precision required make it an ideal candidate for motion analysis through video analytics. This study leverages advanced neural network methodologies to dissect video footage of badminton matches, aiming to extract detailed insights into player kinetics and biomechanics. Through the analysis of stroke mechanics, including hand-hip coordination, leg positioning, and the execution angles of strokes, the research aims to derive predictive models that can suggest improvements in stance, technique, and muscle orientation. These recommendations are designed to mitigate erroneous techniques, reduce the risk of joint fatigue, and enhance overall performance. Utilizing a vast array of data available online, this research correlates players' physical attributes with their in-game movements to identify muscle activation patterns during play. The goal is to offer personalized training and nutrition strategies that align with the specific biomechanical demands of badminton, thereby facilitating targeted performance enhancements.

* 7 pages, 11 figures. https://ieeexplore.ieee.org/document/9825164

Exploring the Numerical Reasoning Capabilities of Language Models: A Comprehensive Analysis on Tabular Data

Nov 03, 2023Abstract:Numbers are crucial for various real-world domains such as finance, economics, and science. Thus, understanding and reasoning with numbers are essential skills for language models to solve different tasks. While different numerical benchmarks have been introduced in recent years, they are limited to specific numerical aspects mostly. In this paper, we propose a hierarchical taxonomy for numerical reasoning skills with more than ten reasoning types across four levels: representation, number sense, manipulation, and complex reasoning. We conduct a comprehensive evaluation of state-of-the-art models to identify reasoning challenges specific to them. Henceforth, we develop a diverse set of numerical probes employing a semi-automated approach. We focus on the tabular Natural Language Inference (TNLI) task as a case study and measure models' performance shifts. Our results show that no model consistently excels across all numerical reasoning types. Among the probed models, FlanT5 (few-/zero-shot) and GPT-3.5 (few-shot) demonstrate strong overall numerical reasoning skills compared to other models. Label-flipping probes indicate that models often exploit dataset artifacts to predict the correct labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge