Arnavi Chheda-Kothary

OLMoTrace: Tracing Language Model Outputs Back to Trillions of Training Tokens

Apr 09, 2025Abstract:We present OLMoTrace, the first system that traces the outputs of language models back to their full, multi-trillion-token training data in real time. OLMoTrace finds and shows verbatim matches between segments of language model output and documents in the training text corpora. Powered by an extended version of infini-gram (Liu et al., 2024), our system returns tracing results within a few seconds. OLMoTrace can help users understand the behavior of language models through the lens of their training data. We showcase how it can be used to explore fact checking, hallucination, and the creativity of language models. OLMoTrace is publicly available and fully open-source.

Engaging with Children's Artwork in Mixed Visual-Ability Families

Jul 26, 2024

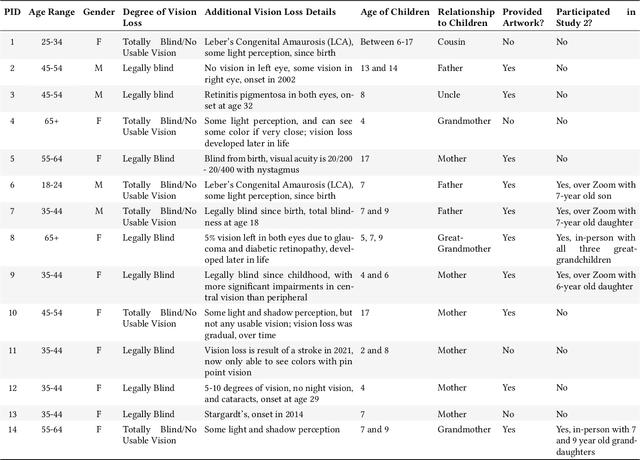

Abstract:We present two studies exploring how blind or low-vision (BLV) family members engage with their sighted children's artwork, strategies to support understanding and interpretation, and the potential role of technology, such as AI, therein. Our first study involved 14 BLV individuals, and the second included five groups of BLV individuals with their children. Through semi-structured interviews with AI descriptions of children's artwork and multi-sensory design probes, we found that BLV family members value artwork engagement as a bonding opportunity, preferring the child's storytelling and interpretation over other nonvisual representations. Additionally, despite some inaccuracies, BLV family members felt that AI-generated descriptions could facilitate dialogue with their children and aid self-guided art discovery. We close with specific design considerations for supporting artwork engagement in mixed visual-ability families, including enabling artwork access through various methods, supporting children's corrections of AI output, and distinctions in context vs. content and interpretation vs. description of children's artwork.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge