Aranyak Acharyya

Optimal control of the future via prospective learning with control

Nov 19, 2025Abstract:Optimal control of the future is the next frontier for AI. Current approaches to this problem are typically rooted in either reinforcement learning (RL). While powerful, this learning framework is mathematically distinct from supervised learning, which has been the main workhorse for the recent achievements in AI. Moreover, RL typically operates in a stationary environment with episodic resets, limiting its utility to more realistic settings. Here, we extend supervised learning to address learning to control in non-stationary, reset-free environments. Using this framework, called ''Prospective Learning with Control (PL+C)'', we prove that under certain fairly general assumptions, empirical risk minimization (ERM) asymptotically achieves the Bayes optimal policy. We then consider a specific instance of prospective learning with control, foraging -- which is a canonical task for any mobile agent -- be it natural or artificial. We illustrate that modern RL algorithms fail to learn in these non-stationary reset-free environments, and even with modifications, they are orders of magnitude less efficient than our prospective foraging agents.

Concentration bounds on response-based vector embeddings of black-box generative models

Nov 11, 2025

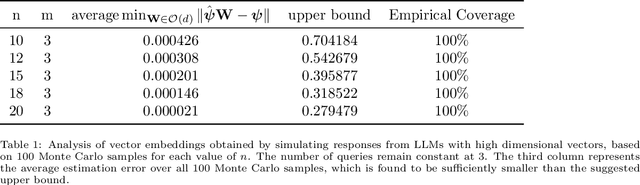

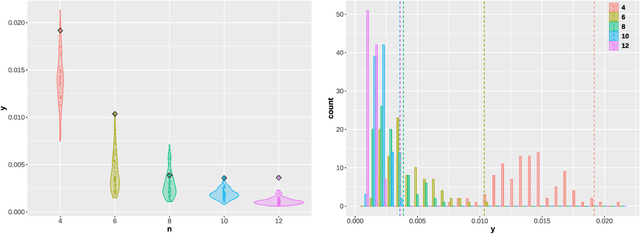

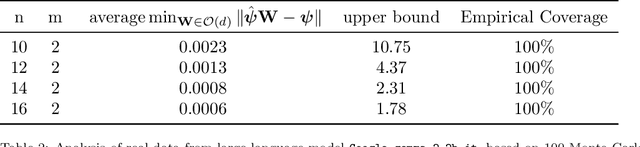

Abstract:Generative models, such as large language models or text-to-image diffusion models, can generate relevant responses to user-given queries. Response-based vector embeddings of generative models facilitate statistical analysis and inference on a given collection of black-box generative models. The Data Kernel Perspective Space embedding is one particular method of obtaining response-based vector embeddings for a given set of generative models, already discussed in the literature. In this paper, under appropriate regularity conditions, we establish high probability concentration bounds on the sample vector embeddings for a given set of generative models, obtained through the method of Data Kernel Perspective Space embedding. Our results tell us the required number of sample responses needed in order to approximate the population-level vector embeddings with a desired level of accuracy. The algebraic tools used to establish our results can be used further for establishing concentration bounds on Classical Multidimensional Scaling embeddings in general, when the dissimilarities are observed with noise.

Embedding-based statistical inference on generative models

Oct 01, 2024

Abstract:The recent cohort of publicly available generative models can produce human expert level content across a variety of topics and domains. Given a model in this cohort as a base model, methods such as parameter efficient fine-tuning, in-context learning, and constrained decoding have further increased generative capabilities and improved both computational and data efficiency. Entire collections of derivative models have emerged as a byproduct of these methods and each of these models has a set of associated covariates such as a score on a benchmark, an indicator for if the model has (or had) access to sensitive information, etc. that may or may not be available to the user. For some model-level covariates, it is possible to use "similar" models to predict an unknown covariate. In this paper we extend recent results related to embedding-based representations of generative models -- the data kernel perspective space -- to classical statistical inference settings. We demonstrate that using the perspective space as the basis of a notion of "similar" is effective for multiple model-level inference tasks.

Consistent estimation of generative model representations in the data kernel perspective space

Sep 25, 2024

Abstract:Generative models, such as large language models and text-to-image diffusion models, produce relevant information when presented a query. Different models may produce different information when presented the same query. As the landscape of generative models evolves, it is important to develop techniques to study and analyze differences in model behaviour. In this paper we present novel theoretical results for embedding-based representations of generative models in the context of a set of queries. We establish sufficient conditions for the consistent estimation of the model embeddings in situations where the query set and the number of models grow.

Semisupervised regression in latent structure networks on unknown manifolds

May 04, 2023Abstract:Random graphs are increasingly becoming objects of interest for modeling networks in a wide range of applications. Latent position random graph models posit that each node is associated with a latent position vector, and that these vectors follow some geometric structure in the latent space. In this paper, we consider random dot product graphs, in which an edge is formed between two nodes with probability given by the inner product of their respective latent positions. We assume that the latent position vectors lie on an unknown one-dimensional curve and are coupled with a response covariate via a regression model. Using the geometry of the underlying latent position vectors, we propose a manifold learning and graph embedding technique to predict the response variable on out-of-sample nodes, and we establish convergence guarantees for these responses. Our theoretical results are supported by simulations and an application to Drosophila brain data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge