Aram M. Ahmed

Video Forgery Detection for Surveillance Cameras: A Review

May 04, 2025Abstract:The widespread availability of video recording through smartphones and digital devices has made video-based evidence more accessible than ever. Surveillance footage plays a crucial role in security, law enforcement, and judicial processes. However, with the rise of advanced video editing tools, tampering with digital recordings has become increasingly easy, raising concerns about their authenticity. Ensuring the integrity of surveillance videos is essential, as manipulated footage can lead to misinformation and undermine judicial decisions. This paper provides a comprehensive review of existing forensic techniques used to detect video forgery, focusing on their effectiveness in verifying the authenticity of surveillance recordings. Various methods, including compression-based analysis, frame duplication detection, and machine learning-based approaches, are explored. The findings highlight the growing necessity for more robust forensic techniques to counteract evolving forgery methods. Strengthening video forensic capabilities will ensure that surveillance recordings remain credible and admissible as legal evidence.

Multi-objective Cat Swarm Optimization Algorithm based on a Grid System

Feb 22, 2025

Abstract:This paper presents a multi-objective version of the Cat Swarm Optimization Algorithm called the Grid-based Multi-objective Cat Swarm Optimization Algorithm (GMOCSO). Convergence and diversity preservation are the two main goals pursued by modern multi-objective algorithms to yield robust results. To achieve these goals, we first replace the roulette wheel method of the original CSO algorithm with a greedy method. Then, two key concepts from Pareto Archived Evolution Strategy Algorithm (PAES) are adopted: the grid system and double archive strategy. Several test functions and a real-world scenario called the Pressure vessel design problem are used to evaluate the proposed algorithm's performance. In the experiment, the proposed algorithm is compared with other well-known algorithms using different metrics such as Reversed Generational Distance, Spacing metric, and Spread metric. The optimization results show the robustness of the proposed algorithm, and the results are further confirmed using statistical methods and graphs. Finally, conclusions and future directions were presented..

From A-to-Z Review of Clustering Validation Indices

Jul 18, 2024

Abstract:Data clustering involves identifying latent similarities within a dataset and organizing them into clusters or groups. The outcomes of various clustering algorithms differ as they are susceptible to the intrinsic characteristics of the original dataset, including noise and dimensionality. The effectiveness of such clustering procedures directly impacts the homogeneity of clusters, underscoring the significance of evaluating algorithmic outcomes. Consequently, the assessment of clustering quality presents a significant and complex endeavor. A pivotal aspect affecting clustering validation is the cluster validity metric, which aids in determining the optimal number of clusters. The main goal of this study is to comprehensively review and explain the mathematical operation of internal and external cluster validity indices, but not all, to categorize these indices and to brainstorm suggestions for future advancement of clustering validation research. In addition, we review and evaluate the performance of internal and external clustering validation indices on the most common clustering algorithms, such as the evolutionary clustering algorithm star (ECA*). Finally, we suggest a classification framework for examining the functionality of both internal and external clustering validation measures regarding their ideal values, user-friendliness, responsiveness to input data, and appropriateness across various fields. This classification aids researchers in selecting the appropriate clustering validation measure to suit their specific requirements.

Evaluating e-Government Services in Kurdistan Institution for Strategic Studies and Scientific Research Using the EGOVSAT Model

May 06, 2021

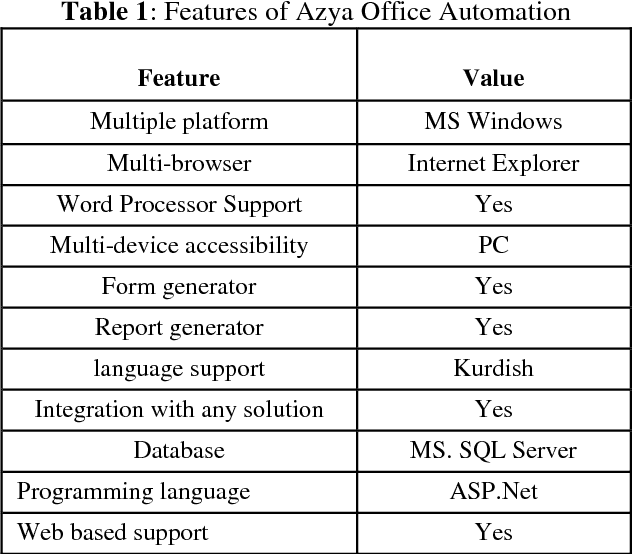

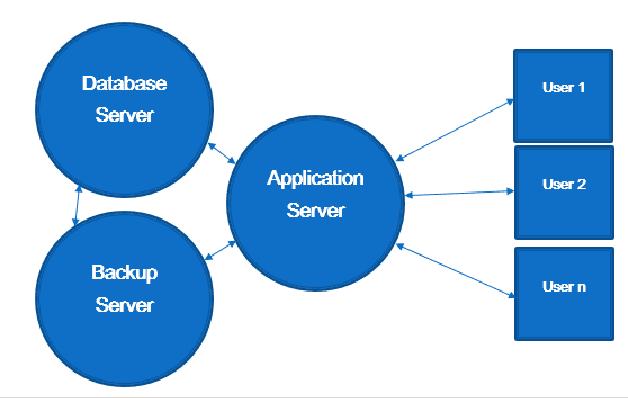

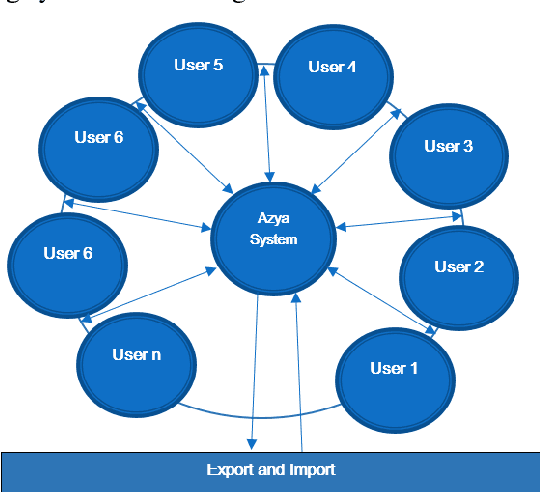

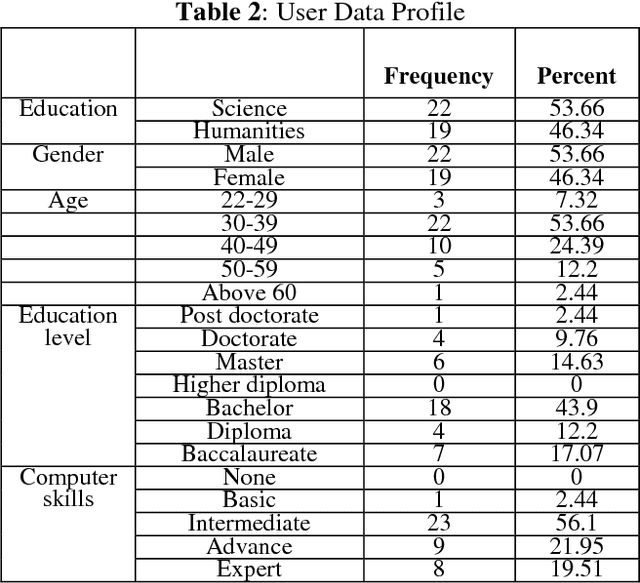

Abstract:Office automation is an initiative used to digitally deliver services to citizens, private and public sectors. It is used to digitally collect, store, create, and manipulate office information as a need of accomplishing basic tasks. Azya Office Automation has been implemented as a pilot project in Kurdistan Institution for Strategic Studies and Scientific Research (KISSR) since 2013. The efficiency of governance in Kurdistan Institution for Strategic Studies and Scientific Research has been improved, thanks to its implementation. The aims of this research paper is to evaluate user satisfaction of this software and identify its significant predictors using EGOVSAT Model. The user satisfaction of this model encompasses five main parts, which are utility, reliability, efficiency, customization, and flexibility. For that purpose, a detailed survey is conducted to measure the level of user satisfaction. A total of sixteen questions have distributed among forty one users of the software in KISSR. In order to evaluate the software, three measurement have been used which are reliability test, regression analysis and correlation analysis. The results indicate that the software is successful to a decent extent based on user satisfaction feedbacks obtained by using EGOVSAT Model.

Cat Swarm Optimization Algorithm -- A Survey and Performance Evaluation

Jan 10, 2020

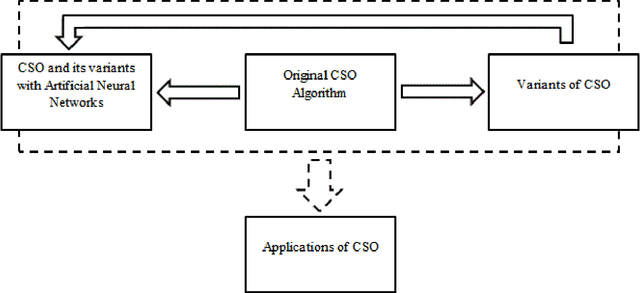

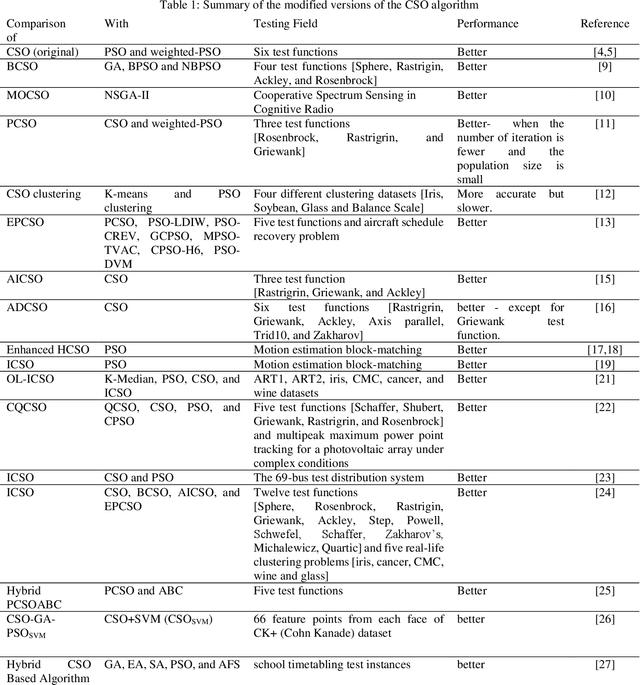

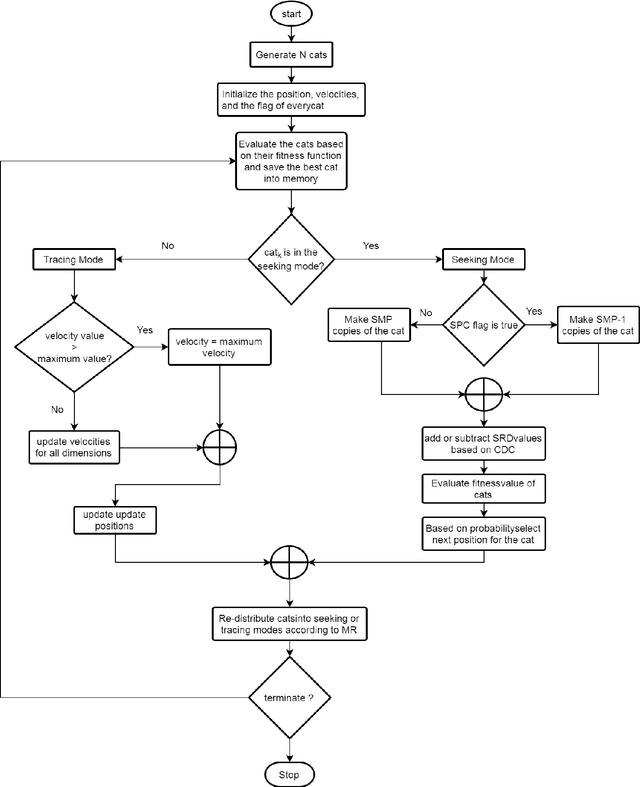

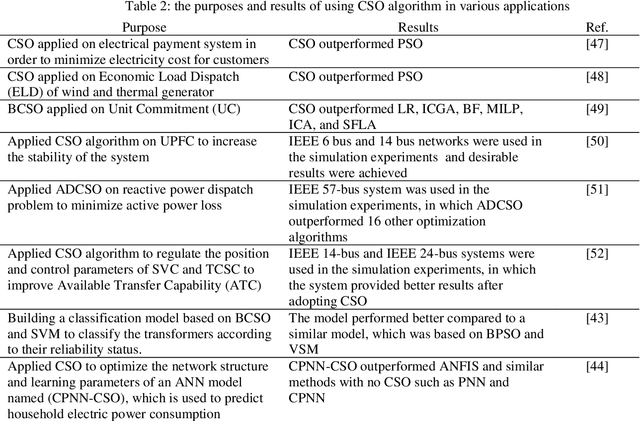

Abstract:This paper presents an in-depth survey and performance evaluation of the Cat Swarm Optimization (CSO) Algorithm. CSO is a robust and powerful metaheuristic swarm-based optimization approach that has received very positive feedback since its emergence. It has been tackling many optimization problems and many variants of it have been introduced. However, the literature lacks a detailed survey or a performance evaluation in this regard. Therefore, this paper is an attempt to review all these works, including its developments and applications, and group them accordingly. In addition, CSO is tested on 23 classical benchmark functions and 10 modern benchmark functions (CEC 2019). The results are then compared against three novel and powerful optimization algorithms, namely Dragonfly algorithm (DA), Butterfly optimization algorithm (BOA) and Fitness Dependent Optimizer (FDO). These algorithms are then ranked according to Friedman test and the results show that CSO ranks first on the whole. Finally, statistical approaches are employed to further confirm the outperformance of CSO algorithm.

* 31 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge