Aqeel Labash

Emergence of Adaptive Circadian Rhythms in Deep Reinforcement Learning

Jul 22, 2023

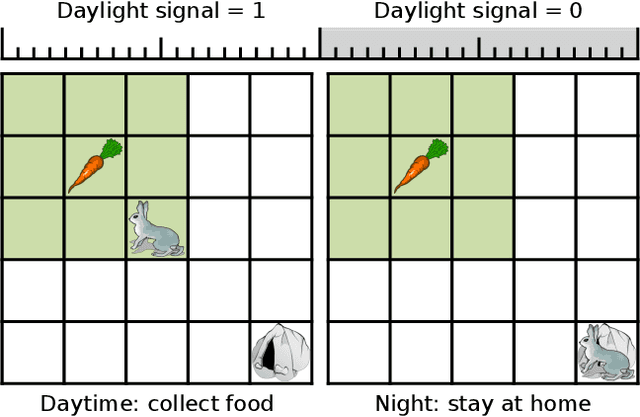

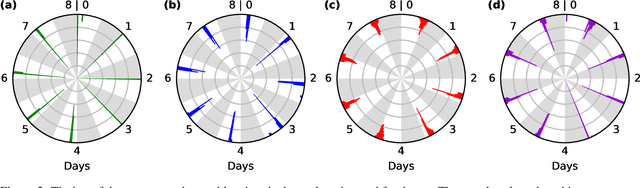

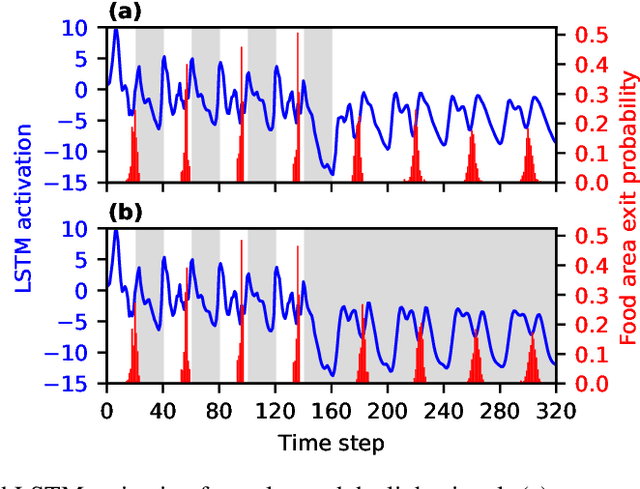

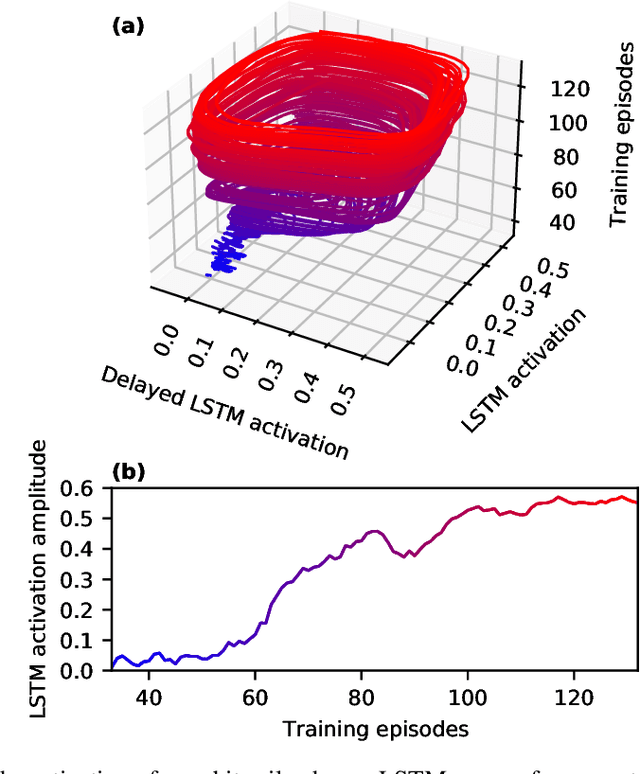

Abstract:Adapting to regularities of the environment is critical for biological organisms to anticipate events and plan. A prominent example is the circadian rhythm corresponding to the internalization by organisms of the $24$-hour period of the Earth's rotation. In this work, we study the emergence of circadian-like rhythms in deep reinforcement learning agents. In particular, we deployed agents in an environment with a reliable periodic variation while solving a foraging task. We systematically characterize the agent's behavior during learning and demonstrate the emergence of a rhythm that is endogenous and entrainable. Interestingly, the internal rhythm adapts to shifts in the phase of the environmental signal without any re-training. Furthermore, we show via bifurcation and phase response curve analyses how artificial neurons develop dynamics to support the internalization of the environmental rhythm. From a dynamical systems view, we demonstrate that the adaptation proceeds by the emergence of a stable periodic orbit in the neuron dynamics with a phase response that allows an optimal phase synchronisation between the agent's dynamics and the environmental rhythm.

Mind the gap: Challenges of deep learning approaches to Theory of Mind

Mar 30, 2022

Abstract:Theory of Mind is an essential ability of humans to infer the mental states of others. Here we provide a coherent summary of the potential, current progress, and problems of deep learning approaches to Theory of Mind. We highlight that many current findings can be explained through shortcuts. These shortcuts arise because the tasks used to investigate Theory of Mind in deep learning systems have been too narrow. Thus, we encourage researchers to investigate Theory of Mind in complex open-ended environments. Furthermore, to inspire future deep learning systems we provide a concise overview of prior work done in humans. We further argue that when studying Theory of Mind with deep learning, the research's main focus and contribution ought to be opening up the network's representations. We recommend researchers use tools from the field of interpretability of AI to study the relationship between different network components and aspects of Theory of Mind.

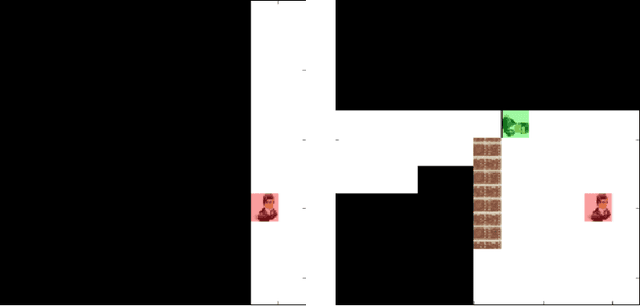

Perspective Taking in Deep Reinforcement Learning Agents

Jul 03, 2019

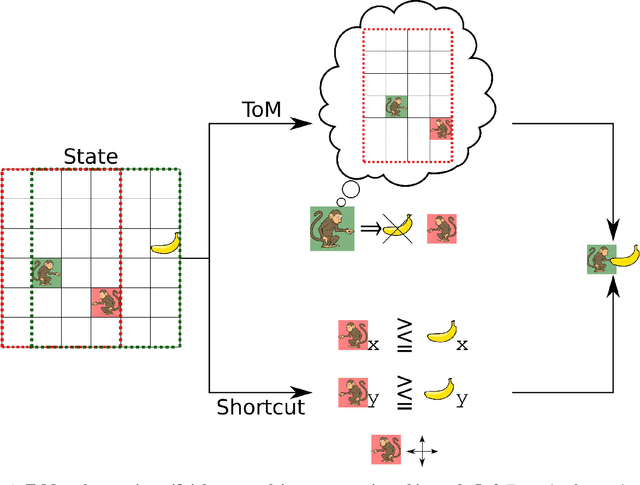

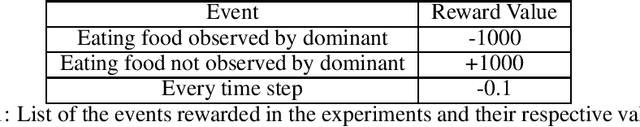

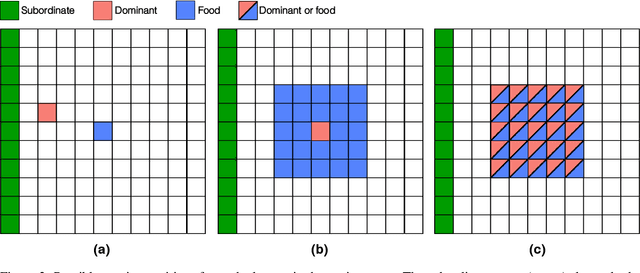

Abstract:Perspective taking is the ability to take the point of view of another agent. This skill is not unique to humans as it is also displayed by other animals like chimpanzees. It is an essential ability for efficient social interactions, including cooperation, competition, and communication. In this work, we present our progress toward building artificial agents with such abilities. To this end we implemented a perspective taking task that was inspired by experiments done with chimpanzees. We show that agents controlled by artificial neural networks can learn via reinforcement learning to pass simple tests that require perspective taking capabilities. In particular, this ability is more readily learned when the agent has allocentric information about the objects in the environment. Building artificial agents with perspective taking ability will help to reverse engineer how computations underlying theory of mind might be accomplished in our brains.

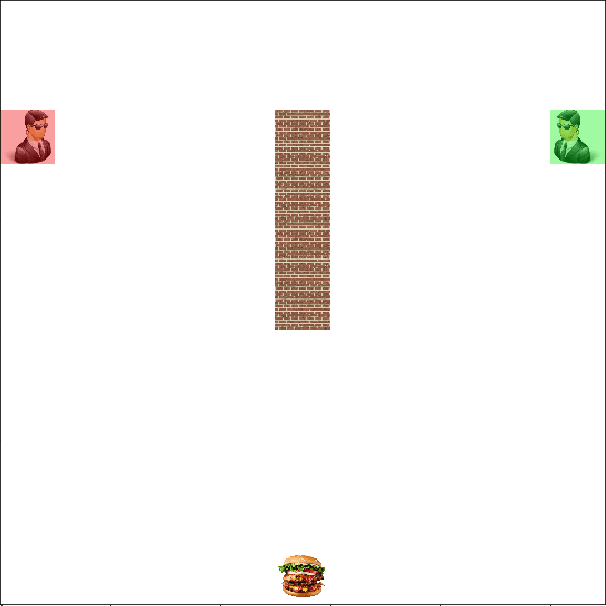

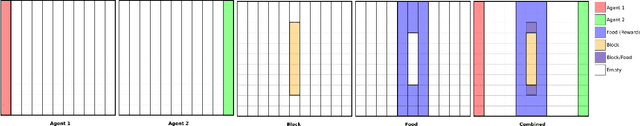

APES: a Python toolbox for simulating reinforcement learning environments

Aug 31, 2018

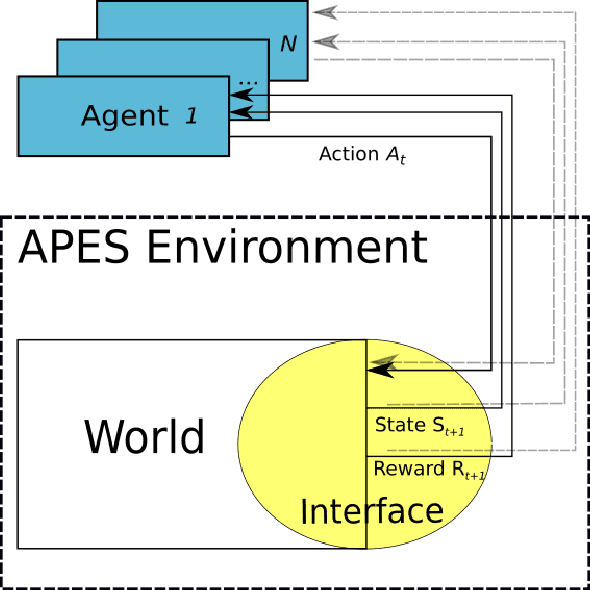

Abstract:Assisted by neural networks, reinforcement learning agents have been able to solve increasingly complex tasks over the last years. The simulation environment in which the agents interact is an essential component in any reinforcement learning problem. The environment simulates the dynamics of the agents' world and hence provides feedback to their actions in terms of state observations and external rewards. To ease the design and simulation of such environments this work introduces $\texttt{APES}$, a highly customizable and open source package in Python to create 2D grid-world environments for reinforcement learning problems. $\texttt{APES}$ equips agents with algorithms to simulate any field of vision, it allows the creation and positioning of items and rewards according to user-defined rules, and supports the interaction of multiple agents.

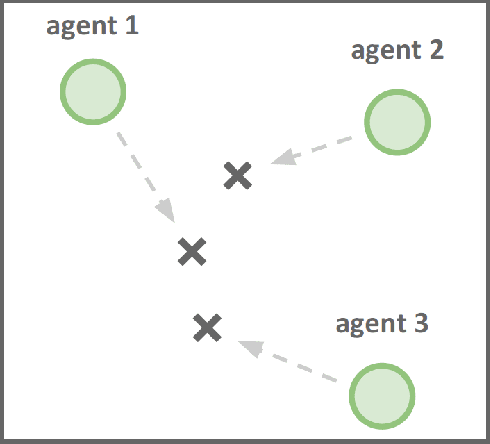

Do deep reinforcement learning agents model intentions?

May 21, 2018

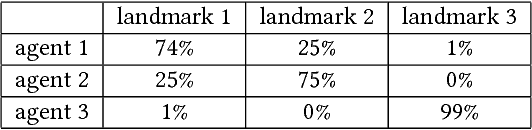

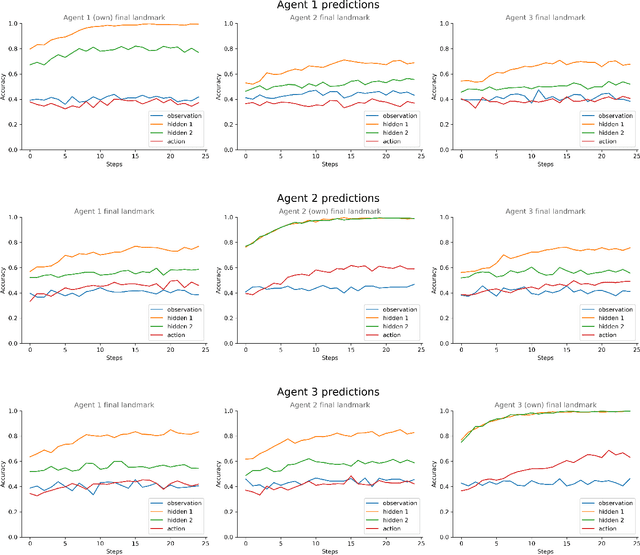

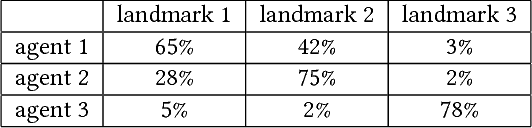

Abstract:Inferring other agents' mental states such as their knowledge, beliefs and intentions is thought to be essential for effective interactions with other agents. Recently, multiagent systems trained via deep reinforcement learning have been shown to succeed in solving different tasks, but it remains unclear how each agent modeled or represented other agents in their environment. In this work we test whether deep reinforcement learning agents explicitly represent other agents' intentions (their specific aims or goals) during a task in which the agents had to coordinate the covering of different spots in a 2D environment. In particular, we tracked over time the performance of a linear decoder trained to predict the final goal of all agents from the hidden state of each agent's neural network controller. We observed that the hidden layers of agents represented explicit information about other agents' goals, i.e. the target landmark they ended up covering. We also performed a series of experiments, in which some agents were replaced by others with fixed goals, to test the level of generalization of the trained agents. We noticed that during the training phase the agents developed a differential preference for each goal, which hindered generalization. To alleviate the above problem, we propose simple changes to the MADDPG training algorithm which leads to better generalization against unseen agents. We believe that training protocols promoting more active intention reading mechanisms, e.g. by preventing simple symmetry-breaking solutions, is a promising direction towards achieving a more robust generalization in different cooperative and competitive tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge