Aobo Liang

Time Tracker: Mixture-of-Experts-Enhanced Foundation Time Series Forecasting Model with Decoupled Training Pipelines

May 21, 2025

Abstract:In the past few years, time series foundation models have achieved superior predicting accuracy. However, real-world time series often exhibit significant diversity in their temporal patterns across different time spans and domains, making it challenging for a single model architecture to fit all complex scenarios. In addition, time series data may have multiple variables exhibiting complex correlations between each other. Recent mainstream works have focused on modeling times series in a channel-independent manner in both pretraining and finetuning stages, overlooking the valuable inter-series dependencies. To this end, we propose \textbf{Time Tracker} for better predictions on multivariate time series data. Firstly, we leverage sparse mixture of experts (MoE) within Transformers to handle the modeling of diverse time series patterns, thereby alleviating the learning difficulties of a single model while improving its generalization. Besides, we propose Any-variate Attention, enabling a unified model structure to seamlessly handle both univariate and multivariate time series, thereby supporting channel-independent modeling during pretraining and channel-mixed modeling for finetuning. Furthermore, we design a graph learning module that constructs relations among sequences from frequency-domain features, providing more precise guidance to capture inter-series dependencies in channel-mixed modeling. Based on these advancements, Time Tracker achieves state-of-the-art performance in predicting accuracy, model generalization and adaptability.

WaveRoRA: Wavelet Rotary Route Attention for Multivariate Time Series Forecasting

Oct 30, 2024Abstract:In recent years, Transformer-based models (Transformers) have achieved significant success in multivariate time series forecasting (MTSF). However, previous works focus on extracting features either from the time domain or the frequency domain, which inadequately captures the trends and periodic characteristics. To address this issue, we propose a wavelet learning framework to model complex temporal dependencies of the time series data. The wavelet domain integrates both time and frequency information, allowing for the analysis of local characteristics of signals at different scales. Additionally, the Softmax self-attention mechanism used by Transformers has quadratic complexity, which leads to excessive computational costs when capturing long-term dependencies. Therefore, we propose a novel attention mechanism: Rotary Route Attention (RoRA). Unlike Softmax attention, RoRA utilizes rotary position embeddings to inject relative positional information to sequence tokens and introduces a small number of routing tokens $r$ to aggregate information from the $KV$ matrices and redistribute it to the $Q$ matrix, offering linear complexity. We further propose WaveRoRA, which leverages RoRA to capture inter-series dependencies in the wavelet domain. We conduct extensive experiments on eight real-world datasets. The results indicate that WaveRoRA outperforms existing state-of-the-art models while maintaining lower computational costs.

Bi-Mamba4TS: Bidirectional Mamba for Time Series Forecasting

Apr 24, 2024

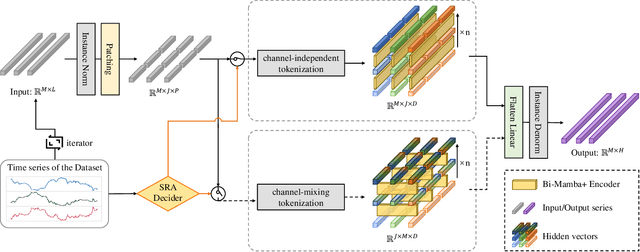

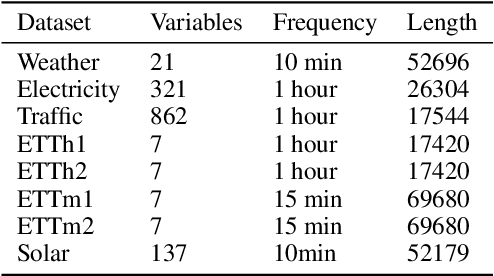

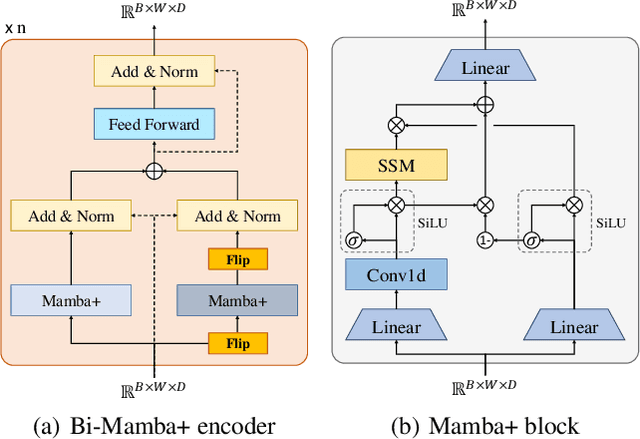

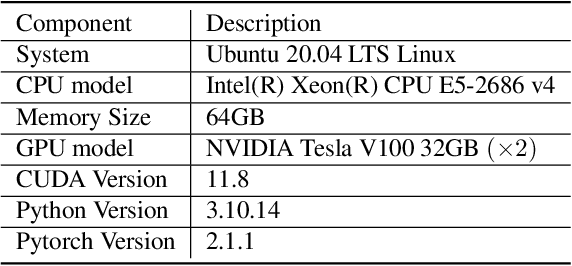

Abstract:Long-term time series forecasting (LTSF) provides longer insights into future trends and patterns. In recent years, deep learning models especially Transformers have achieved advanced performance in LTSF tasks. However, the quadratic complexity of Transformers rises the challenge of balancing computaional efficiency and predicting performance. Recently, a new state space model (SSM) named Mamba is proposed. With the selective capability on input data and the hardware-aware parallel computing algorithm, Mamba can well capture long-term dependencies while maintaining linear computational complexity. Mamba has shown great ability for long sequence modeling and is a potential competitor to Transformer-based models in LTSF. In this paper, we propose Bi-Mamba4TS, a bidirectional Mamba for time series forecasting. To address the sparsity of time series semantics, we adopt the patching technique to enrich the local information while capturing the evolutionary patterns of time series in a finer granularity. To select more appropriate modeling method based on the characteristics of the dataset, our model unifies the channel-independent and channel-mixing tokenization strategies and uses a series-relation-aware decider to control the strategy choosing process. Extensive experiments on seven real-world datasets show that our model achieves more accurate predictions compared with state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge