Anusha Mujumdar

Safe RAN control: A Symbolic Reinforcement Learning Approach

Jun 03, 2021

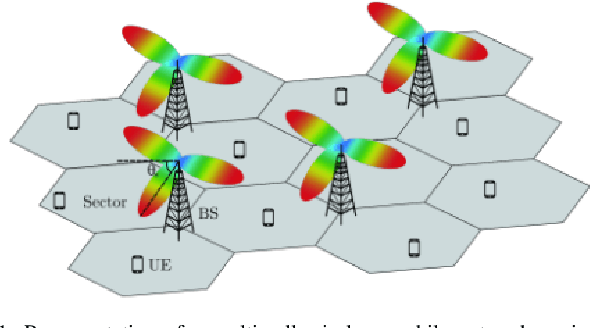

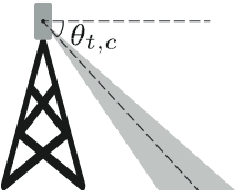

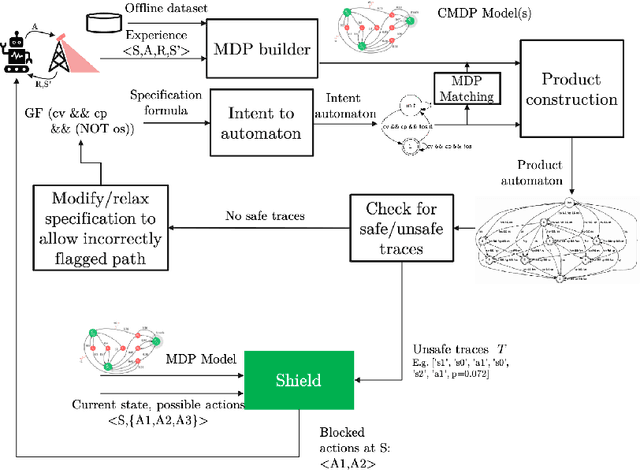

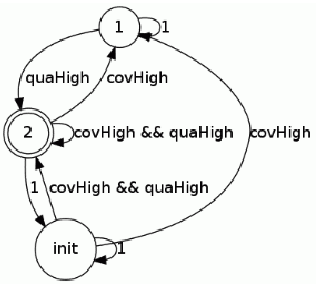

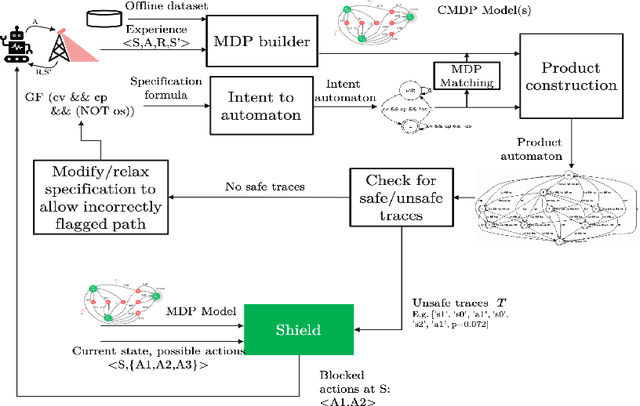

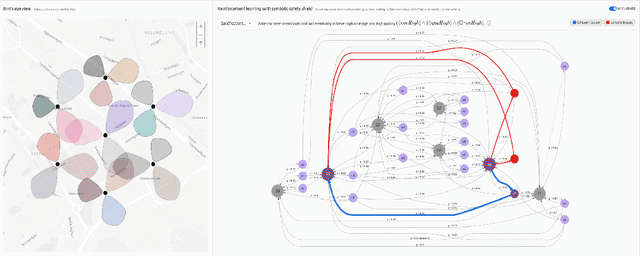

Abstract:In this paper, we present a Symbolic Reinforcement Learning (SRL) based architecture for safety control of Radio Access Network (RAN) applications. In particular, we provide a purely automated procedure in which a user can specify high-level logical safety specifications for a given cellular network topology in order for the latter to execute optimal safe performance which is measured through certain Key Performance Indicators (KPIs). The network consists of a set of fixed Base Stations (BS) which are equipped with antennas, which one can control by adjusting their vertical tilt angle. The aforementioned process is called Remote Electrical Tilt (RET) optimization. Recent research has focused on performing this RET optimization by employing Reinforcement Learning (RL) strategies due to the fact that they have self-learning capabilities to adapt in uncertain environments. The term safety refers to particular constraints bounds of the network KPIs in order to guarantee that when the algorithms are deployed in a live network, the performance is maintained. In our proposed architecture the safety is ensured through model-checking techniques over combined discrete system models (automata) that are abstracted through the learning process. We introduce a user interface (UI) developed to help a user set intent specifications to the system, and inspect the difference in agent proposed actions, and those that are allowed and blocked according to the safety specification.

Symbolic Reinforcement Learning for Safe RAN Control

Mar 11, 2021

Abstract:In this paper, we demonstrate a Symbolic Reinforcement Learning (SRL) architecture for safe control in Radio Access Network (RAN) applications. In our automated tool, a user can select a high-level safety specifications expressed in Linear Temporal Logic (LTL) to shield an RL agent running in a given cellular network with aim of optimizing network performance, as measured through certain Key Performance Indicators (KPIs). In the proposed architecture, network safety shielding is ensured through model-checking techniques over combined discrete system models (automata) that are abstracted through reinforcement learning. We demonstrate the user interface (UI) helping the user set intent specifications to the architecture and inspect the difference in allowed and blocked actions.

Machine Reasoning Explainability

Sep 01, 2020

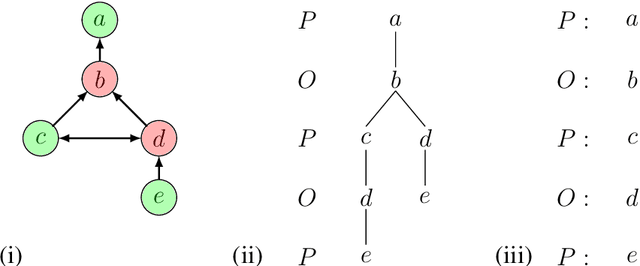

Abstract:As a field of AI, Machine Reasoning (MR) uses largely symbolic means to formalize and emulate abstract reasoning. Studies in early MR have notably started inquiries into Explainable AI (XAI) -- arguably one of the biggest concerns today for the AI community. Work on explainable MR as well as on MR approaches to explainability in other areas of AI has continued ever since. It is especially potent in modern MR branches, such as argumentation, constraint and logic programming, planning. We hereby aim to provide a selective overview of MR explainability techniques and studies in hopes that insights from this long track of research will complement well the current XAI landscape. This document reports our work in-progress on MR explainability.

Antifragility for Intelligent Autonomous Systems

Feb 26, 2018

Abstract:Antifragile systems grow measurably better in the presence of hazards. This is in contrast to fragile systems which break down in the presence of hazards, robust systems that tolerate hazards up to a certain degree, and resilient systems that -- like self-healing systems -- revert to their earlier expected behavior after a period of convalescence. The notion of antifragility was introduced by Taleb for economics systems, but its applicability has been illustrated in biological and engineering domains as well. In this paper, we propose an architecture that imparts antifragility to intelligent autonomous systems, specifically those that are goal-driven and based on AI-planning. We argue that this architecture allows the system to self-improve by uncovering new capabilities obtained either through the hazards themselves (opportunistic) or through deliberation (strategic). An AI planning-based case study of an autonomous wheeled robot is presented. We show that with the proposed architecture, the robot develops antifragile behaviour with respect to an oil spill hazard.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge