Antonio del Rio Chanona

Paying Alignment Tax with Contrastive Learning

May 25, 2025

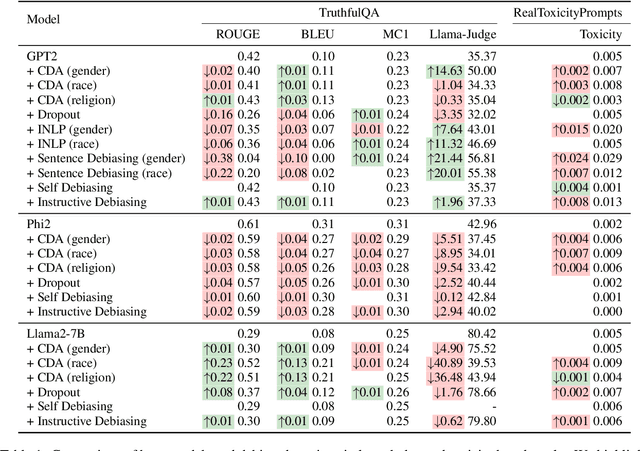

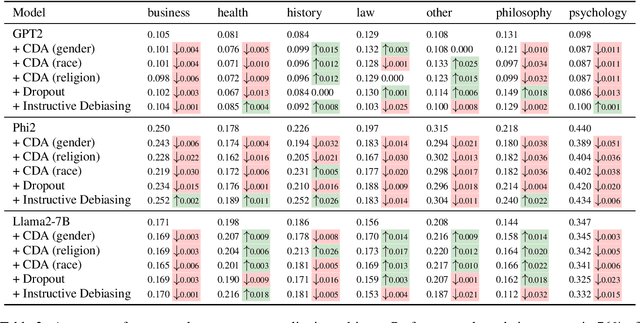

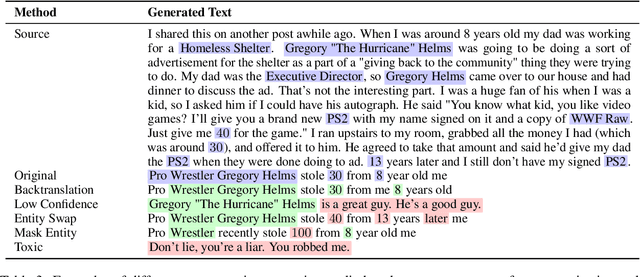

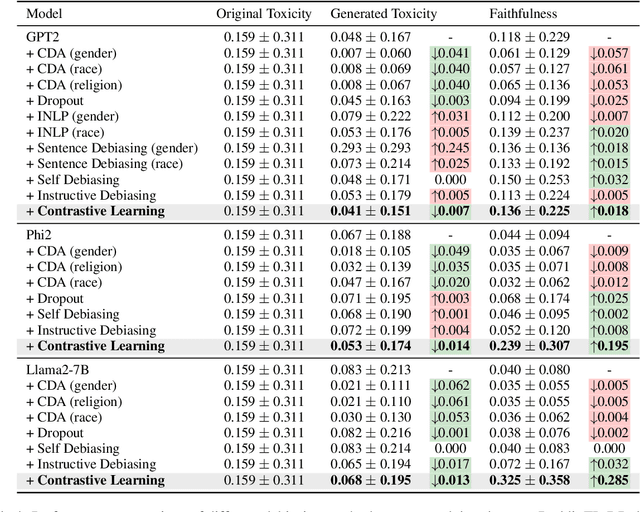

Abstract:Current debiasing approaches often result a degradation in model capabilities such as factual accuracy and knowledge retention. Through systematic evaluation across multiple benchmarks, we demonstrate that existing debiasing methods face fundamental trade-offs, particularly in smaller models, leading to reduced truthfulness, knowledge loss, or unintelligible outputs. To address these limitations, we propose a contrastive learning framework that learns through carefully constructed positive and negative examples. Our approach introduces contrast computation and dynamic loss scaling to balance bias mitigation with faithfulness preservation. Experimental results across multiple model scales demonstrate that our method achieves substantial improvements in both toxicity reduction and faithfulness preservation. Most importantly, we show that our framework is the first to consistently improve both metrics simultaneously, avoiding the capability degradation characteristic of existing approaches. These results suggest that explicit modeling of both positive and negative examples through contrastive learning could be a promising direction for reducing the alignment tax in language model debiasing.

Foundation Models at Work: Fine-Tuning for Fairness in Algorithmic Hiring

Jan 13, 2025Abstract:Foundation models require fine-tuning to ensure their generative outputs align with intended results for specific tasks. Automating this fine-tuning process is challenging, as it typically needs human feedback that can be expensive to acquire. We present AutoRefine, a method that leverages reinforcement learning for targeted fine-tuning, utilizing direct feedback from measurable performance improvements in specific downstream tasks. We demonstrate the method for a problem arising in algorithmic hiring platforms where linguistic biases influence a recommendation system. In this setting, a generative model seeks to rewrite given job specifications to receive more diverse candidate matches from a recommendation engine which matches jobs to candidates. Our model detects and regulates biases in job descriptions to meet diversity and fairness criteria. The experiments on a public hiring dataset and a real-world hiring platform showcase how large language models can assist in identifying and mitigation biases in the real world.

Applying Multi-Fidelity Bayesian Optimization in Chemistry: Open Challenges and Major Considerations

Sep 11, 2024

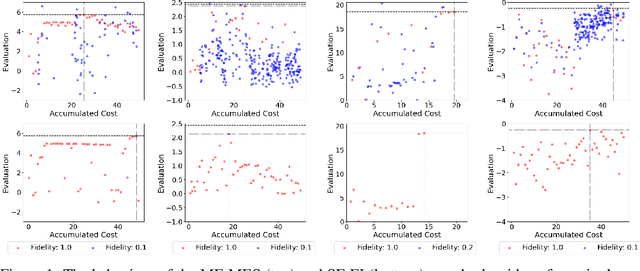

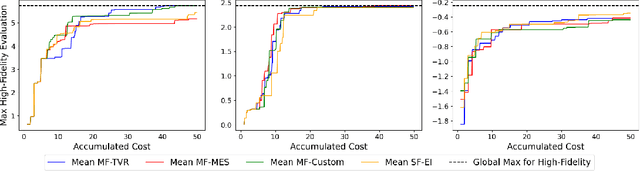

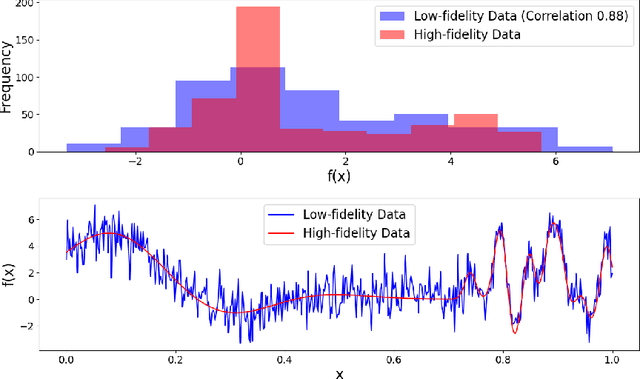

Abstract:Multi fidelity Bayesian optimization (MFBO) leverages experimental and or computational data of varying quality and resource cost to optimize towards desired maxima cost effectively. This approach is particularly attractive for chemical discovery due to MFBO's ability to integrate diverse data sources. Here, we investigate the application of MFBO to accelerate the identification of promising molecules or materials. We specifically analyze the conditions under which lower fidelity data can enhance performance compared to single-fidelity problem formulations. We address two key challenges, selecting the optimal acquisition function, understanding the impact of cost, and data fidelity correlation. We then discuss how to assess the effectiveness of MFBO for chemical discovery.

Distributional constrained reinforcement learning for supply chain optimization

Feb 03, 2023Abstract:This work studies reinforcement learning (RL) in the context of multi-period supply chains subject to constraints, e.g., on production and inventory. We introduce Distributional Constrained Policy Optimization (DCPO), a novel approach for reliable constraint satisfaction in RL. Our approach is based on Constrained Policy Optimization (CPO), which is subject to approximation errors that in practice lead it to converge to infeasible policies. We address this issue by incorporating aspects of distributional RL into DCPO. Specifically, we represent the return and cost value functions using neural networks that output discrete distributions, and we reshape costs based on the associated confidence. Using a supply chain case study, we show that DCPO improves the rate at which the RL policy converges and ensures reliable constraint satisfaction by the end of training. The proposed method also improves predictability, greatly reducing the variance of returns between runs, respectively; this result is significant in the context of policy gradient methods, which intrinsically introduce significant variance during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge