Antonio Lopez

Decomposing Control Lyapunov Functions for Efficient Reinforcement Learning

Mar 18, 2024

Abstract:Recent methods using Reinforcement Learning (RL) have proven to be successful for training intelligent agents in unknown environments. However, RL has not been applied widely in real-world robotics scenarios. This is because current state-of-the-art RL methods require large amounts of data to learn a specific task, leading to unreasonable costs when deploying the agent to collect data in real-world applications. In this paper, we build from existing work that reshapes the reward function in RL by introducing a Control Lyapunov Function (CLF), which is demonstrated to reduce the sample complexity. Still, this formulation requires knowing a CLF of the system, but due to the lack of a general method, it is often a challenge to identify a suitable CLF. Existing work can compute low-dimensional CLFs via a Hamilton-Jacobi reachability procedure. However, this class of methods becomes intractable on high-dimensional systems, a problem that we address by using a system decomposition technique to compute what we call Decomposed Control Lyapunov Functions (DCLFs). We use the computed DCLF for reward shaping, which we show improves RL performance. Through multiple examples, we demonstrate the effectiveness of this approach, where our method finds a policy to successfully land a quadcopter in less than half the amount of real-world data required by the state-of-the-art Soft-Actor Critic algorithm.

CARLA: An Open Urban Driving Simulator

Nov 10, 2017

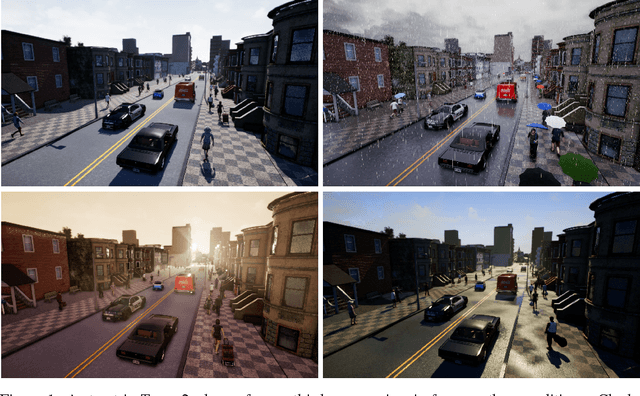

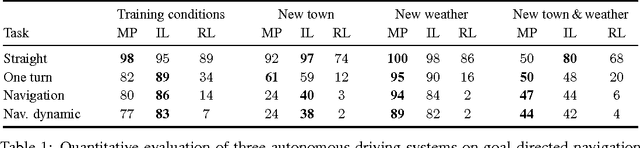

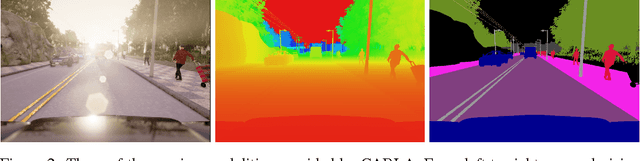

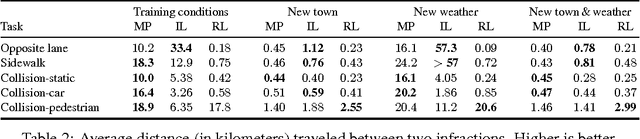

Abstract:We introduce CARLA, an open-source simulator for autonomous driving research. CARLA has been developed from the ground up to support development, training, and validation of autonomous urban driving systems. In addition to open-source code and protocols, CARLA provides open digital assets (urban layouts, buildings, vehicles) that were created for this purpose and can be used freely. The simulation platform supports flexible specification of sensor suites and environmental conditions. We use CARLA to study the performance of three approaches to autonomous driving: a classic modular pipeline, an end-to-end model trained via imitation learning, and an end-to-end model trained via reinforcement learning. The approaches are evaluated in controlled scenarios of increasing difficulty, and their performance is examined via metrics provided by CARLA, illustrating the platform's utility for autonomous driving research. The supplementary video can be viewed at https://youtu.be/Hp8Dz-Zek2E

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge