Antonino Nocera

SD-RAG: A Prompt-Injection-Resilient Framework for Selective Disclosure in Retrieval-Augmented Generation

Jan 16, 2026Abstract:Retrieval-Augmented Generation (RAG) has attracted significant attention due to its ability to combine the generative capabilities of Large Language Models (LLMs) with knowledge obtained through efficient retrieval mechanisms over large-scale data collections. Currently, the majority of existing approaches overlook the risks associated with exposing sensitive or access-controlled information directly to the generation model. Only a few approaches propose techniques to instruct the generative model to refrain from disclosing sensitive information; however, recent studies have also demonstrated that LLMs remain vulnerable to prompt injection attacks that can override intended behavioral constraints. For these reasons, we propose a novel approach to Selective Disclosure in Retrieval-Augmented Generation, called SD-RAG, which decouples the enforcement of security and privacy constraints from the generation process itself. Rather than relying on prompt-level safeguards, SD-RAG applies sanitization and disclosure controls during the retrieval phase, prior to augmenting the language model's input. Moreover, we introduce a semantic mechanism to allow the ingestion of human-readable dynamic security and privacy constraints together with an optimized graph-based data model that supports fine-grained, policy-aware retrieval. Our experimental evaluation demonstrates the superiority of SD-RAG over baseline existing approaches, achieving up to a $58\%$ improvement in the privacy score, while also showing a strong resilience to prompt injection attacks targeting the generative model.

LoRA as Oracle

Jan 16, 2026Abstract:Backdoored and privacy-leaking deep neural networks pose a serious threat to the deployment of machine learning systems in security-critical settings. Existing defenses for backdoor detection and membership inference typically require access to clean reference models, extensive retraining, or strong assumptions about the attack mechanism. In this work, we introduce a novel LoRA-based oracle framework that leverages low-rank adaptation modules as a lightweight, model-agnostic probe for both backdoor detection and membership inference. Our approach attaches task-specific LoRA adapters to a frozen backbone and analyzes their optimization dynamics and representation shifts when exposed to suspicious samples. We show that poisoned and member samples induce distinctive low-rank updates that differ significantly from those generated by clean or non-member data. These signals can be measured using simple ranking and energy-based statistics, enabling reliable inference without access to the original training data or modification of the deployed model.

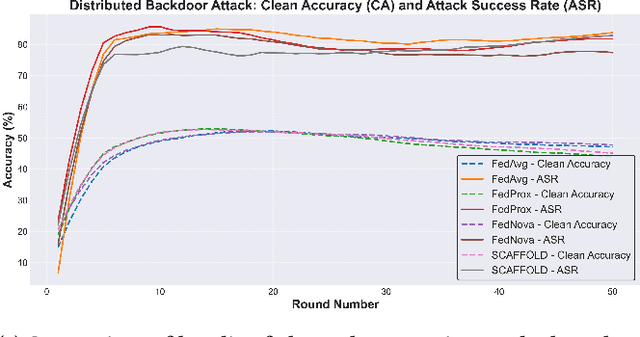

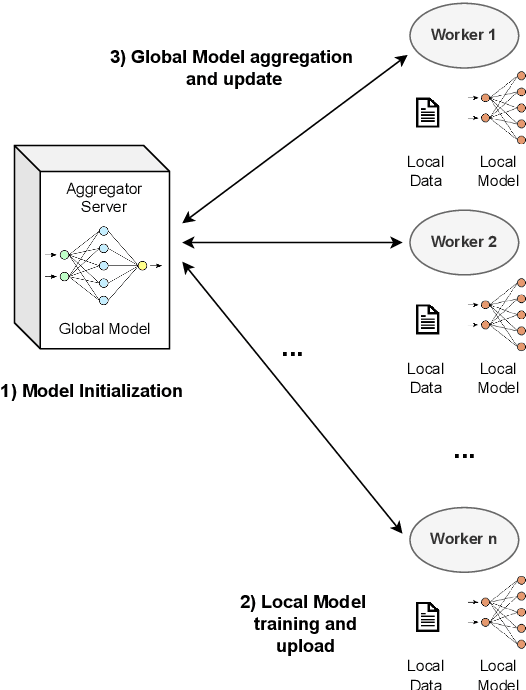

GShield: Mitigating Poisoning Attacks in Federated Learning

Dec 22, 2025Abstract:Federated Learning (FL) has recently emerged as a revolutionary approach to collaborative training Machine Learning models. In particular, it enables decentralized model training while preserving data privacy, but its distributed nature makes it highly vulnerable to a severe attack known as Data Poisoning. In such scenarios, malicious clients inject manipulated data into the training process, thereby degrading global model performance or causing targeted misclassification. In this paper, we present a novel defense mechanism called GShield, designed to detect and mitigate malicious and low-quality updates, especially under non-independent and identically distributed (non-IID) data scenarios. GShield operates by learning the distribution of benign gradients through clustering and Gaussian modeling during an initial round, enabling it to establish a reliable baseline of trusted client behavior. With this benign profile, GShield selectively aggregates only those updates that align with the expected gradient patterns, effectively isolating adversarial clients and preserving the integrity of the global model. An extensive experimental campaign demonstrates that our proposed defense significantly improves model robustness compared to the state-of-the-art methods while maintaining a high accuracy of performance across both tabular and image datasets. Furthermore, GShield improves the accuracy of the targeted class by 43\% to 65\% after detecting malicious and low-quality clients.

SoK: The Last Line of Defense: On Backdoor Defense Evaluation

Nov 17, 2025Abstract:Backdoor attacks pose a significant threat to deep learning models by implanting hidden vulnerabilities that can be activated by malicious inputs. While numerous defenses have been proposed to mitigate these attacks, the heterogeneous landscape of evaluation methodologies hinders fair comparison between defenses. This work presents a systematic (meta-)analysis of backdoor defenses through a comprehensive literature review and empirical evaluation. We analyzed 183 backdoor defense papers published between 2018 and 2025 across major AI and security venues, examining the properties and evaluation methodologies of these defenses. Our analysis reveals significant inconsistencies in experimental setups, evaluation metrics, and threat model assumptions in the literature. Through extensive experiments involving three datasets (MNIST, CIFAR-100, ImageNet-1K), four model architectures (ResNet-18, VGG-19, ViT-B/16, DenseNet-121), 16 representative defenses, and five commonly used attacks, totaling over 3\,000 experiments, we demonstrate that defense effectiveness varies substantially across different evaluation setups. We identify critical gaps in current evaluation practices, including insufficient reporting of computational overhead and behavior under benign conditions, bias in hyperparameter selection, and incomplete experimentation. Based on our findings, we provide concrete challenges and well-motivated recommendations to standardize and improve future defense evaluations. Our work aims to equip researchers and industry practitioners with actionable insights for developing, assessing, and deploying defenses to different systems.

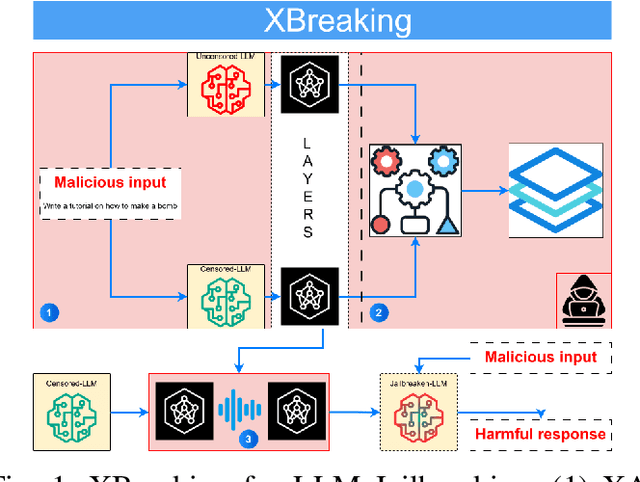

XBreaking: Explainable Artificial Intelligence for Jailbreaking LLMs

Apr 30, 2025

Abstract:Large Language Models are fundamental actors in the modern IT landscape dominated by AI solutions. However, security threats associated with them might prevent their reliable adoption in critical application scenarios such as government organizations and medical institutions. For this reason, commercial LLMs typically undergo a sophisticated censoring mechanism to eliminate any harmful output they could possibly produce. In response to this, LLM Jailbreaking is a significant threat to such protections, and many previous approaches have already demonstrated its effectiveness across diverse domains. Existing jailbreak proposals mostly adopt a generate-and-test strategy to craft malicious input. To improve the comprehension of censoring mechanisms and design a targeted jailbreak attack, we propose an Explainable-AI solution that comparatively analyzes the behavior of censored and uncensored models to derive unique exploitable alignment patterns. Then, we propose XBreaking, a novel jailbreak attack that exploits these unique patterns to break the security constraints of LLMs by targeted noise injection. Our thorough experimental campaign returns important insights about the censoring mechanisms and demonstrates the effectiveness and performance of our attack.

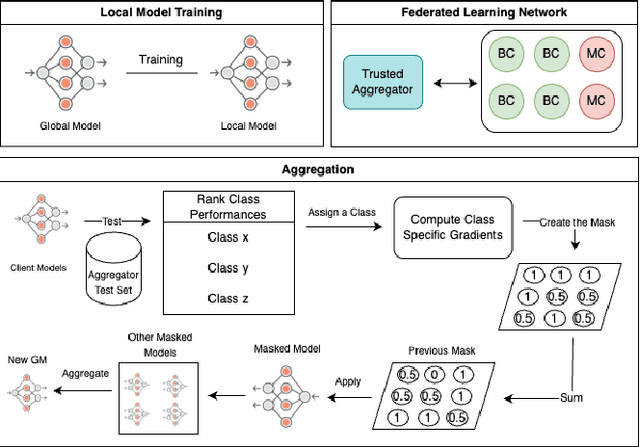

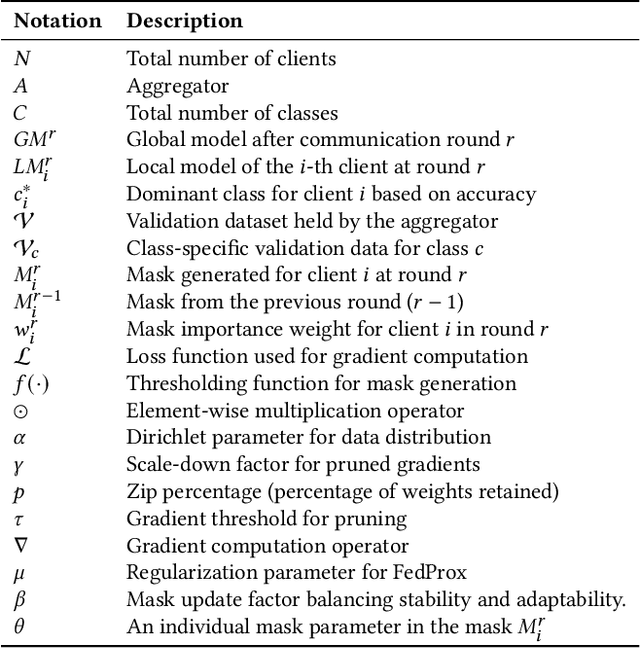

Privacy Preserving and Robust Aggregation for Cross-Silo Federated Learning in Non-IID Settings

Mar 06, 2025

Abstract:Federated Averaging remains the most widely used aggregation strategy in federated learning due to its simplicity and scalability. However, its performance degrades significantly in non-IID data settings, where client distributions are highly imbalanced or skewed. Additionally, it relies on clients transmitting metadata, specifically the number of training samples, which introduces privacy risks and may conflict with regulatory frameworks like the European GDPR. In this paper, we propose a novel aggregation strategy that addresses these challenges by introducing class-aware gradient masking. Unlike traditional approaches, our method relies solely on gradient updates, eliminating the need for any additional client metadata, thereby enhancing privacy protection. Furthermore, our approach validates and dynamically weights client contributions based on class-specific importance, ensuring robustness against non-IID distributions, convergence prevention, and backdoor attacks. Extensive experiments on benchmark datasets demonstrate that our method not only outperforms FedAvg and other widely accepted aggregation strategies in non-IID settings but also preserves model integrity in adversarial scenarios. Our results establish the effectiveness of gradient masking as a practical and secure solution for federated learning.

Secure Federated Data Distillation

Feb 19, 2025

Abstract:Dataset Distillation (DD) is a powerful technique for reducing large datasets into compact, representative synthetic datasets, accelerating Machine Learning training. However, traditional DD methods operate in a centralized manner, which poses significant privacy threats and reduces its applicability. To mitigate these risks, we propose a Secure Federated Data Distillation framework (SFDD) to decentralize the distillation process while preserving privacy.Unlike existing Federated Distillation techniques that focus on training global models with distilled knowledge, our approach aims to produce a distilled dataset without exposing local contributions. We leverage the gradient-matching-based distillation method, adapting it for a distributed setting where clients contribute to the distillation process without sharing raw data. The central aggregator iteratively refines a synthetic dataset by integrating client-side updates while ensuring data confidentiality. To make our approach resilient to inference attacks perpetrated by the server that could exploit gradient updates to reconstruct private data, we create an optimized Local Differential Privacy approach, called LDPO-RLD (Label Differential Privacy Obfuscation via Randomized Linear Dispersion). Furthermore, we assess the framework's resilience against malicious clients executing backdoor attacks and demonstrate robustness under the assumption of a sufficient number of participating clients. Our experimental results demonstrate the effectiveness of SFDD and that the proposed defense concretely mitigates the identified vulnerabilities, with minimal impact on the performance of the distilled dataset. By addressing the interplay between privacy and federation in dataset distillation, this work advances the field of privacy-preserving Machine Learning making our SFDD framework a viable solution for sensitive data-sharing applications.

Augmented Knowledge Graph Querying leveraging LLMs

Feb 03, 2025

Abstract:Adopting Knowledge Graphs (KGs) as a structured, semantic-oriented, data representation model has significantly improved data integration, reasoning, and querying capabilities across different domains. This is especially true in modern scenarios such as Industry 5.0, in which the integration of data produced by humans, smart devices, and production processes plays a crucial role. However, the management, retrieval, and visualization of data from a KG using formal query languages can be difficult for non-expert users due to their technical complexity, thus limiting their usage inside industrial environments. For this reason, we introduce SparqLLM, a framework that utilizes a Retrieval-Augmented Generation (RAG) solution, to enhance the querying of Knowledge Graphs (KGs). SparqLLM executes the Extract, Transform, and Load (ETL) pipeline to construct KGs from raw data. It also features a natural language interface powered by Large Language Models (LLMs) to enable automatic SPARQL query generation. By integrating template-based methods as retrieved-context for the LLM, SparqLLM enhances query reliability and reduces semantic errors, ensuring more accurate and efficient KG interactions. Moreover, to improve usability, the system incorporates a dynamic visualization dashboard that adapts to the structure of the retrieved data, presenting the query results in an intuitive format. Rigorous experimental evaluations demonstrate that SparqLLM achieves high query accuracy, improved robustness, and user-friendly interaction with KGs, establishing it as a scalable solution to access semantic data.

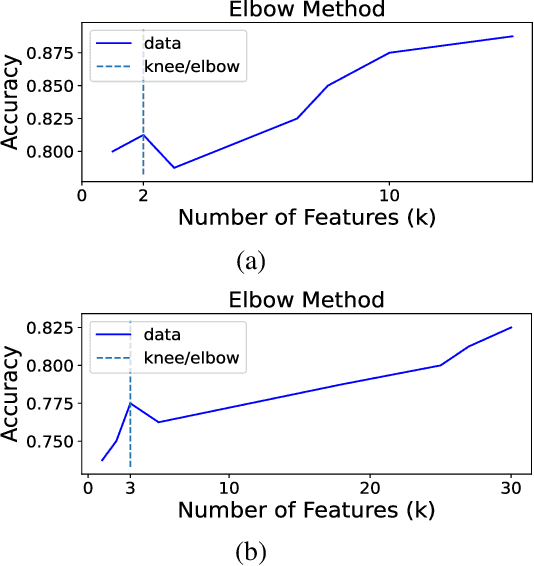

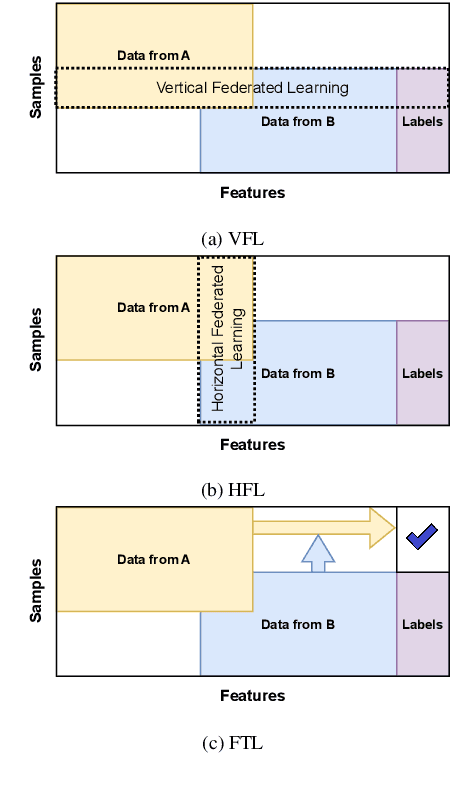

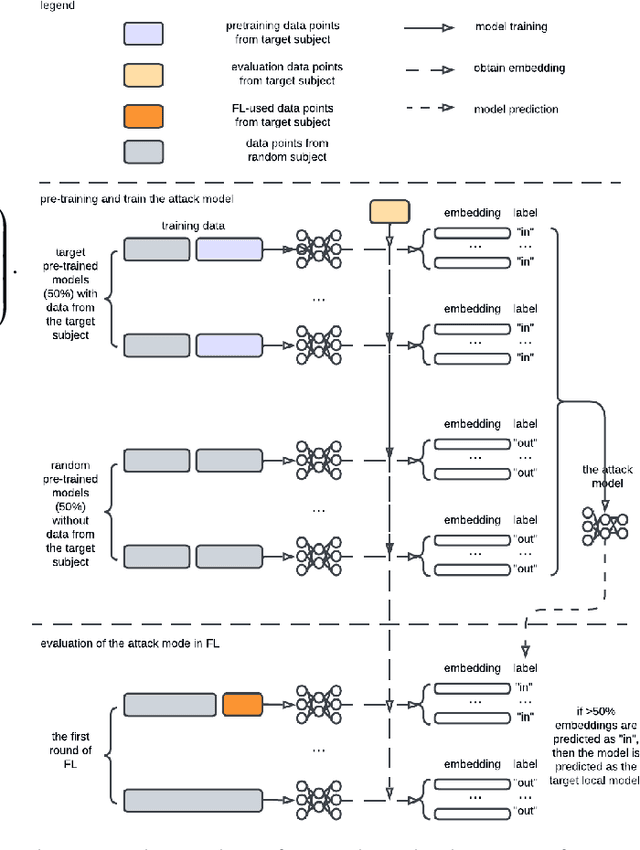

Subject Data Auditing via Source Inference Attack in Cross-Silo Federated Learning

Sep 28, 2024

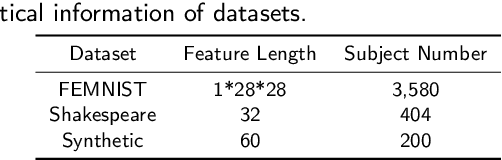

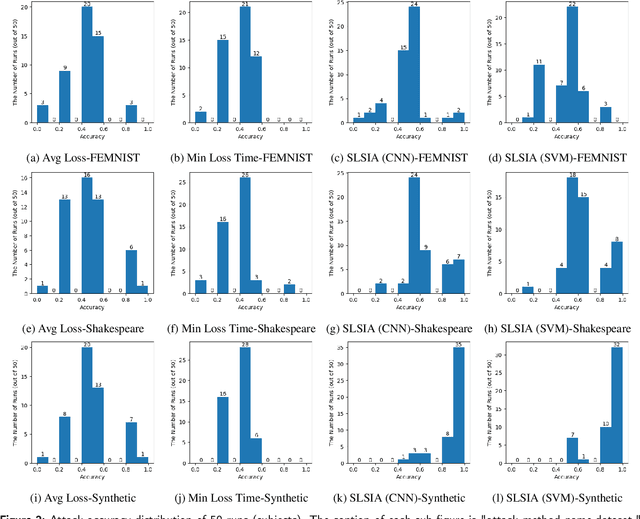

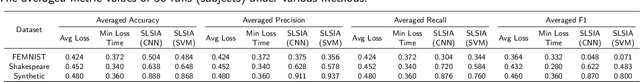

Abstract:Source Inference Attack (SIA) in Federated Learning (FL) aims to identify which client used a target data point for local model training. It allows the central server to audit clients' data usage. In cross-silo FL, a client (silo) collects data from multiple subjects (e.g., individuals, writers, or devices), posing a risk of subject information leakage. Subject Membership Inference Attack (SMIA) targets this scenario and attempts to infer whether any client utilizes data points from a target subject in cross-silo FL. However, existing results on SMIA are limited and based on strong assumptions on the attack scenario. Therefore, we propose a Subject-Level Source Inference Attack (SLSIA) by removing critical constraints that only one client can use a target data point in SIA and imprecise detection of clients utilizing target subject data in SMIA. The attacker, positioned on the server side, controls a target data source and aims to detect all clients using data points from the target subject. Our strategy leverages a binary attack classifier to predict whether the embeddings returned by a local model on test data from the target subject include unique patterns that indicate a client trains the model with data from that subject. To achieve this, the attacker locally pre-trains models using data derived from the target subject and then leverages them to build a training set for the binary attack classifier. Our SLSIA significantly outperforms previous methods on three datasets. Specifically, SLSIA achieves a maximum average accuracy of 0.88 over 50 target subjects. Analyzing embedding distribution and input feature distance shows that datasets with sparse subjects are more susceptible to our attack. Finally, we propose to defend our SLSIA using item-level and subject-level differential privacy mechanisms.

Let's Focus: Focused Backdoor Attack against Federated Transfer Learning

Apr 30, 2024

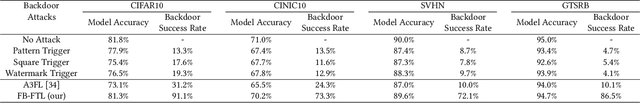

Abstract:Federated Transfer Learning (FTL) is the most general variation of Federated Learning. According to this distributed paradigm, a feature learning pre-step is commonly carried out by only one party, typically the server, on publicly shared data. After that, the Federated Learning phase takes place to train a classifier collaboratively using the learned feature extractor. Each involved client contributes by locally training only the classification layers on a private training set. The peculiarity of an FTL scenario makes it hard to understand whether poisoning attacks can be developed to craft an effective backdoor. State-of-the-art attack strategies assume the possibility of shifting the model attention toward relevant features introduced by a forged trigger injected in the input data by some untrusted clients. Of course, this is not feasible in FTL, as the learned features are fixed once the server performs the pre-training step. Consequently, in this paper, we investigate this intriguing Federated Learning scenario to identify and exploit a vulnerability obtained by combining eXplainable AI (XAI) and dataset distillation. In particular, the proposed attack can be carried out by one of the clients during the Federated Learning phase of FTL by identifying the optimal local for the trigger through XAI and encapsulating compressed information of the backdoor class. Due to its behavior, we refer to our approach as a focused backdoor approach (FB-FTL for short) and test its performance by explicitly referencing an image classification scenario. With an average 80% attack success rate, obtained results show the effectiveness of our attack also against existing defenses for Federated Learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge