Antoine Gautier

Saarland University

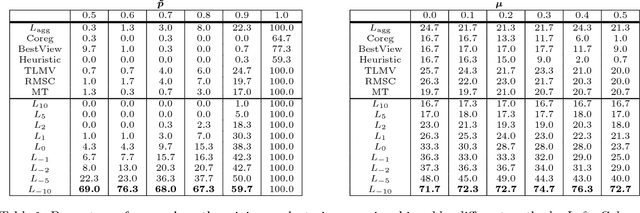

The Power Mean Laplacian for Multilayer Graph Clustering

Mar 01, 2018

Abstract:Multilayer graphs encode different kind of interactions between the same set of entities. When one wants to cluster such a multilayer graph, the natural question arises how one should merge the information different layers. We introduce in this paper a one-parameter family of matrix power means for merging the Laplacians from different layers and analyze it in expectation in the stochastic block model. We show that this family allows to recover ground truth clusters under different settings and verify this in real world data. While computing the matrix power mean can be very expensive for large graphs, we introduce a numerical scheme to efficiently compute its eigenvectors for the case of large sparse graphs.

Globally Optimal Training of Generalized Polynomial Neural Networks with Nonlinear Spectral Methods

Oct 28, 2016

Abstract:The optimization problem behind neural networks is highly non-convex. Training with stochastic gradient descent and variants requires careful parameter tuning and provides no guarantee to achieve the global optimum. In contrast we show under quite weak assumptions on the data that a particular class of feedforward neural networks can be trained globally optimal with a linear convergence rate with our nonlinear spectral method. Up to our knowledge this is the first practically feasible method which achieves such a guarantee. While the method can in principle be applied to deep networks, we restrict ourselves for simplicity in this paper to one and two hidden layer networks. Our experiments confirm that these models are rich enough to achieve good performance on a series of real-world datasets.

An Efficient Multilinear Optimization Framework for Hypergraph Matching

May 24, 2016

Abstract:Hypergraph matching has recently become a popular approach for solving correspondence problems in computer vision as it allows to integrate higher-order geometric information. Hypergraph matching can be formulated as a third-order optimization problem subject to the assignment constraints which turns out to be NP-hard. In recent work, we have proposed an algorithm for hypergraph matching which first lifts the third-order problem to a fourth-order problem and then solves the fourth-order problem via optimization of the corresponding multilinear form. This leads to a tensor block coordinate ascent scheme which has the guarantee of providing monotonic ascent in the original matching score function and leads to state-of-the-art performance both in terms of achieved matching score and accuracy. In this paper we show that the lifting step to a fourth-order problem can be avoided yielding a third-order scheme with the same guarantees and performance but being two times faster. Moreover, we introduce a homotopy type method which further improves the performance.

A Flexible Tensor Block Coordinate Ascent Scheme for Hypergraph Matching

Apr 30, 2015

Abstract:The estimation of correspondences between two images resp. point sets is a core problem in computer vision. One way to formulate the problem is graph matching leading to the quadratic assignment problem which is NP-hard. Several so called second order methods have been proposed to solve this problem. In recent years hypergraph matching leading to a third order problem became popular as it allows for better integration of geometric information. For most of these third order algorithms no theoretical guarantees are known. In this paper we propose a general framework for tensor block coordinate ascent methods for hypergraph matching. We propose two algorithms which both come along with the guarantee of monotonic ascent in the matching score on the set of discrete assignment matrices. In the experiments we show that our new algorithms outperform previous work both in terms of achieving better matching scores and matching accuracy. This holds in particular for very challenging settings where one has a high number of outliers and other forms of noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge