Annika Meyer

YOLinO++: Single-Shot Estimation of Generic Polylines for Mapless Automated Diving

Feb 01, 2024Abstract:In automated driving, highly accurate maps are commonly used to support and complement perception. These maps are costly to create and quickly become outdated as the traffic world is permanently changing. In order to support or replace the map of an automated system with detections from sensor data, a perception module must be able to detect the map features. We propose a neural network that follows the one shot philosophy of YOLO but is designed for detection of 1D structures in images, such as lane boundaries. We extend previous ideas by a midpoint based line representation and anchor definitions. This representation can be used to describe lane borders, markings, but also implicit features such as centerlines of lanes. The broad applicability of the approach is shown with the detection performance on lane centerlines, lane borders as well as the markings both on highways and in urban areas. Versatile lane boundaries are detected and can be inherently classified as dashed or solid lines, curb, road boundaries, or implicit delimitation.

YOLinO: Generic Single Shot Polyline Detection in Real Time

Mar 26, 2021

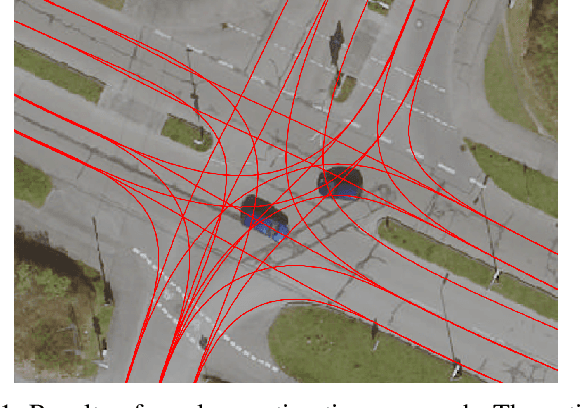

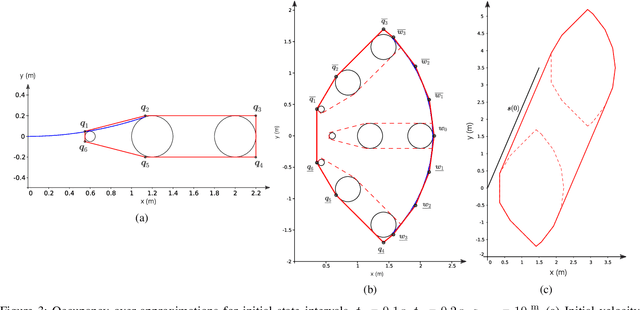

Abstract:The detection of polylines in images is usually either bound to branchless polylines or formulated in a recurrent way, prohibiting their use in real-time systems. We propose an approach that transfers the idea of single shot object detection. Reformulating the problem of polyline detection as bottom-up composition of small line segments allows to detect bounded, dashed and continuous polylines with a single head. This has several major advantages over previous methods. Not only is the method at 187 fps more than suited for real-time applications with virtually any restriction on the shapes of the detected polylines. By predicting multiple line segments for each spatial cell, even branching or crossing polylines can be detected. We evaluate our approach on three different applications for road marking, lane border and center line detection. Hereby, we demonstrate the ability to generalize to different domains as well as both implicit and explicit polyline detection tasks.

Fast Lane-Level Intersection Estimation using Markov Chain Monte Carlo Sampling and B-Spline Refinement

Jul 14, 2020

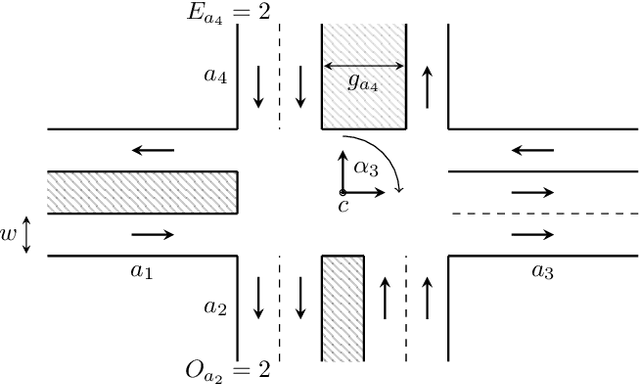

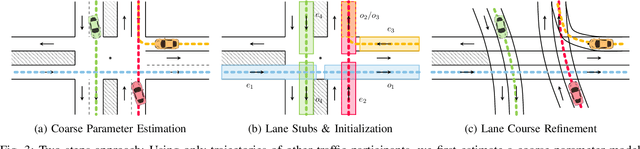

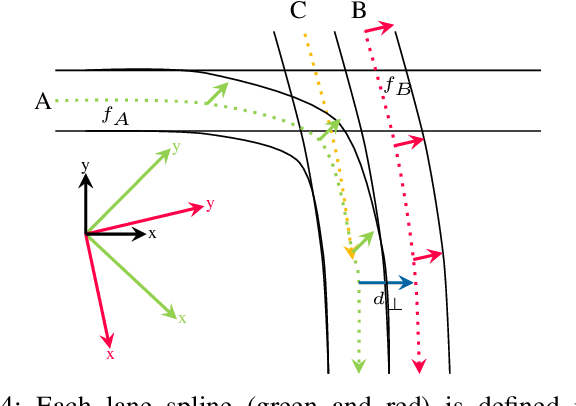

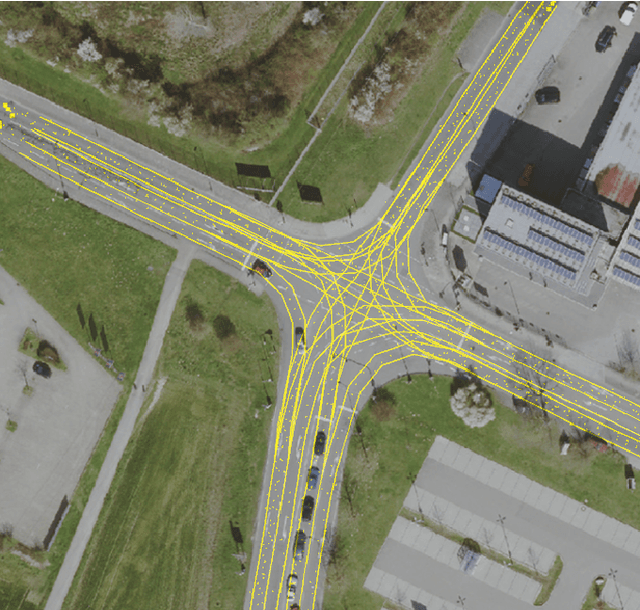

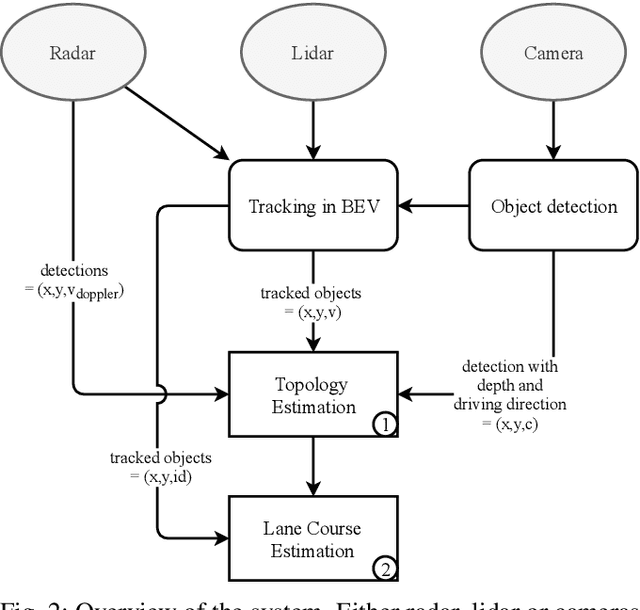

Abstract:Estimating the current scene and understanding the potential maneuvers are essential capabilities of automated vehicles. Most approaches rely heavily on the correctness of maps, but neglect the possibility of outdated information. We present an approach that is able to estimate lanes without relying on any map prior. The estimation is based solely on the trajectories of other traffic participants and is thereby able to incorporate complex environments. In particular, we are able to estimate the scene in the presence of heavy traffic and occlusions. The algorithm first estimates a coarse lane-level intersection model by Markov chain Monte Carlo sampling and refines it later by aligning the lane course with the measurements using a non-linear least squares formulation. We model the lanes as 1D cubic B-splines and can achieve error rates of less than 10cm within real-time.

Anytime Lane-Level Intersection Estimation Based on Trajectories

Jun 06, 2019

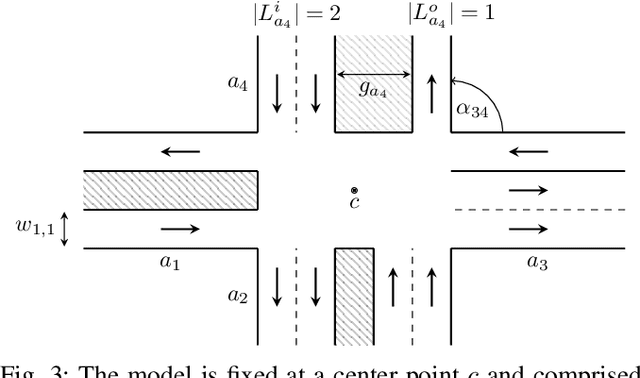

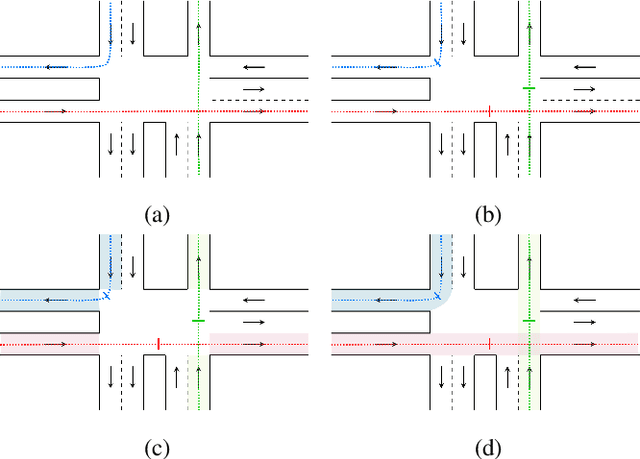

Abstract:Estimating and understanding the current scene is an inevitable capability of automated vehicles. Usually, maps are used as prior for interpreting sensor measurements in order to drive safely. Only few approaches take into account that maps might be outdated and thereby lead to wrong assumptions on the environment. This work estimates a lane-level intersection topology without any map prior based on the trajectories of other traffic participants. We are able to deliver both a coarse lane-level topology as well as the lane course inside and outside of the intersection using Markov chain Monte Carlo sampling. The model is neither limited to a number of lanes or arms nor to the topology of the intersection. We present our results on an evaluation set on about 1000 intersections and achieve 99.9% accuracy on the topology estimation that takes only 73 ms, when utilizing tracked object detections. Estimating the precise lane course on the intersection achieves results on average deviating only 20 cm from the ground truth.

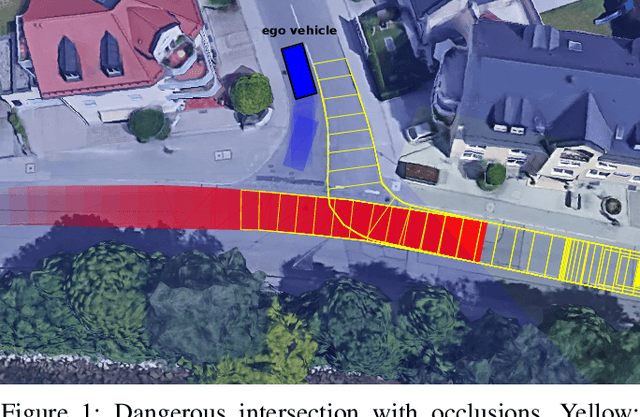

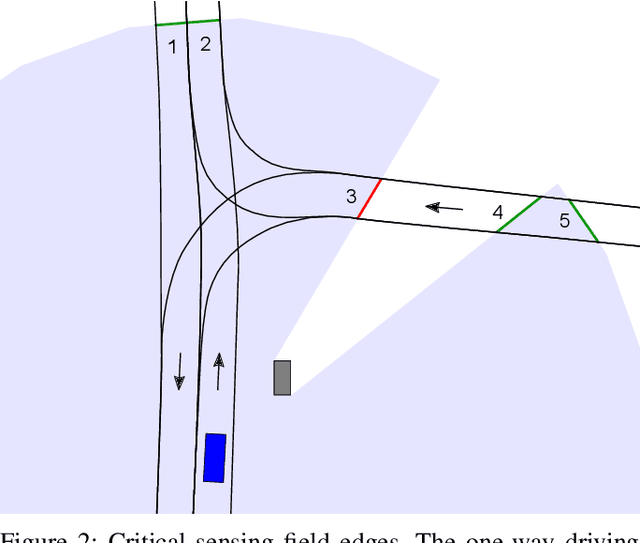

Tackling Occlusions & Limited Sensor Range with Set-based Safety Verification

Oct 01, 2018

Abstract:Provable safety is one of the most critical challenges in automated driving. The behavior of numerous traffic participants in a scene cannot be predicted reliably due to complex interdependencies and the indiscriminate behavior of humans. Additionally, we face high uncertainties and only incomplete environment knowledge. Recent approaches minimize risk with probabilistic and machine learning methods - even under occlusions. These generate comfortable behavior with good traffic flow, but cannot guarantee safety of their maneuvers. Therefore, we contribute a safety verification method for trajectories under occlusions. The field-of-view of the ego vehicle and a map are used to identify critical sensing field edges, each representing a potentially hidden obstacle. The state of occluded obstacles is unknown, but can be over-approximated by intervals over all possible states. Then set-based methods are extended to provide occupancy predictions for obstacles with state intervals. The proposed method can verify the safety of given trajectories (e.g. if they ensure collision-free fail-safe maneuver options) w.r.t. arbitrary safe-state formulations. The potential for provably safe trajectory planning is shown in three evaluative scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge