Annalisa Milella

In-field high throughput grapevine phenotyping with a consumer-grade depth camera

Apr 14, 2021

Abstract:Plant phenotyping, that is, the quantitative assessment of plant traits including growth, morphology, physiology, and yield, is a critical aspect towards efficient and effective crop management. Currently, plant phenotyping is a manually intensive and time consuming process, which involves human operators making measurements in the field, based on visual estimates or using hand-held devices. In this work, methods for automated grapevine phenotyping are developed, aiming to canopy volume estimation and bunch detection and counting. It is demonstrated that both measurements can be effectively performed in the field using a consumer-grade depth camera mounted onboard an agricultural vehicle.

Terrain assessment for precision agriculture using vehicle dynamic modelling

Apr 13, 2021

Abstract:Advances in precision agriculture greatly rely on innovative control and sensing technologies that allow service units to increase their level of driving automation while ensuring at the same time high safety standards. This paper deals with automatic terrain estimation and classification that is performed simultaneously by an agricultural vehicle during normal operations. Vehicle mobility and safety, and the successful implementation of important agricultural tasks including seeding, ploughing, fertilising and controlled traffic depend or can be improved by a correct identification of the terrain that is traversed. The novelty of this research lies in that terrain estimation is performed by using not only traditional appearance-based features, that is colour and geometric properties, but also contact-based features, that is measuring physics-based dynamic effects that govern the vehicleeterrain interaction and that greatly affect its mobility. Experimental results obtained from an all-terrain vehicle operating on different surfaces are presented to validate the system in the field. It was shown that a terrain classifier trained with contact features was able to achieve a correct prediction rate of 85.1%, which is comparable or better than that obtained with approaches using traditional feature sets. To further improve the classification performance, all feature sets were merged in an augmented feature space, reaching, for these tests, 89.1% of correct predictions.

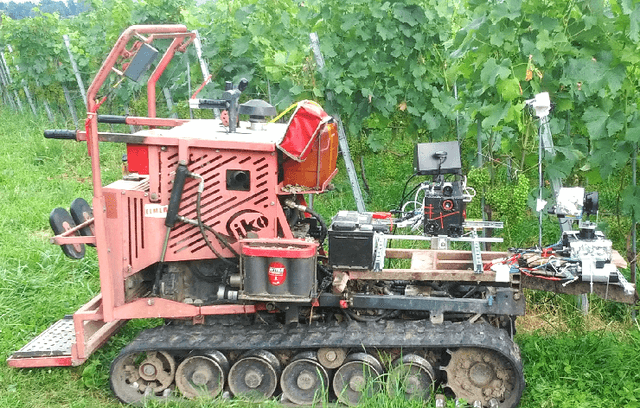

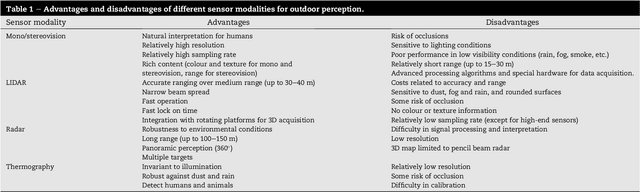

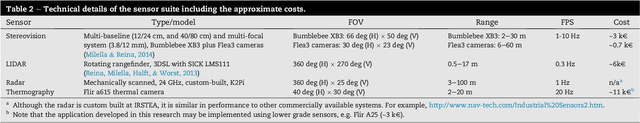

Ambient awareness for agricultural robotic vehicles

Apr 12, 2021

Abstract:In the last few years, robotic technology has been increasingly employed in agriculture to develop intelligent vehicles that can improve productivity and competitiveness. Accurate and robust environmental perception is a critical requirement to address unsolved issues including safe interaction with field workers and animals, obstacle detection in controlled traffic applications, crop row guidance, surveying for variable rate applications, and situation awareness, in general, towards increased process automation. Given the variety of conditions thatmay be encountered in the field, no single sensor exists that can guarantee reliable results in every scenario. The development of a multi-sensory perception systemto increase the ambient awareness of an agricultural vehicle operating in crop fields is the objective of the Ambient Awareness for Autonomous Agricultural Vehicles (QUAD-AV) project. Different onboard sensor technologies, namely stereovision, LIDAR, radar, and thermography, are considered. Novel methods for their combination are proposed to automatically detect obstacles and discern traversable from non-traversable areas. Experimental results, obtained in agricultural contexts, are presented showing the effectiveness of the proposed methods.

A multi-sensor robotic platform for ground mapping and estimation beyond the visible spectrum

Apr 12, 2021

Abstract:Accurate soil mapping is critical for a highly-automated agricultural vehicle to successfully accomplish important tasks including seeding, ploughing, fertilising and controlled traffic, with limited human supervision, ensuring at the same time high safety standards. In this research, a multi-sensor ground mapping and characterisation approach is proposed, whereby data coming from heterogeneous but complementary sensors, mounted on-board an unmanned rover, are combined to generate a multi-layer map of the environment and specifically of the supporting ground. The sensor suite comprises both exteroceptive and proprioceptive devices. Exteroceptive sensors include a stereo camera, a visible and near infrared camera and a thermal imager. Proprioceptive data consist of the vertical acceleration of the vehicle sprung mass as acquired by an inertial measurement unit. The paper details the steps for the integration of the different sensor data into a unique multi-layer map and discusses a set of exteroceptive and proprioceptive features for soil characterisation and change detection. Experimental results obtained with an all-terrain vehicle operating on different ground surfaces are presented. It is shown that the proposed technologies could be potentially used to develop all-terrain self-driving systems in agriculture. In addition, multi-modal soil maps could be useful to feed farm management systems that would present to the user various soil layers incorporating colour, geometric, spectral and mechanical properties.

Mind the ground: A Power Spectral Density-based estimator for all-terrain rovers

Oct 14, 2019

Abstract:There is a growing interest in new sensing technologies and processing algorithms to increase the level of driving automation towards self-driving vehicles. The challenge for autonomy is especially difficult for the negotiation of uncharted scenarios, including natural terrain. This paper proposes a method for terrain unevenness estimation that is based on the power spectral density (PSD) of the surface profile as measured by exteroceptive sensing, that is, by using a common onboard range sensor such as a stereoscopic camera. Using these components, the proposed estimator can evaluate terrain on-line during normal operations. PSD-based analysis provides insight not only on the magnitude of irregularities, but also on how these irregularities are distributed at various wavelengths. A feature vector can be defined to classify roughness that is proved a powerful statistical tool for the characterization of a given terrain fingerprint showing a limited sensitivity to vehicle tilt rotations. First, the theoretical foundations behind the PSD-based estimator are presented. Then, the system is validated in the field using an all-terrain rover that operates on various natural surfaces. It is shown its potential for automatic ground harshness estimation and, in general, for the development of driving assistance systems.

* 26 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge