Anna Zou

Learning Empirical Bregman Divergence for Uncertain Distance Representation

Apr 18, 2023

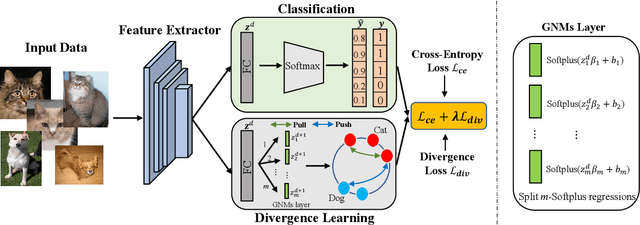

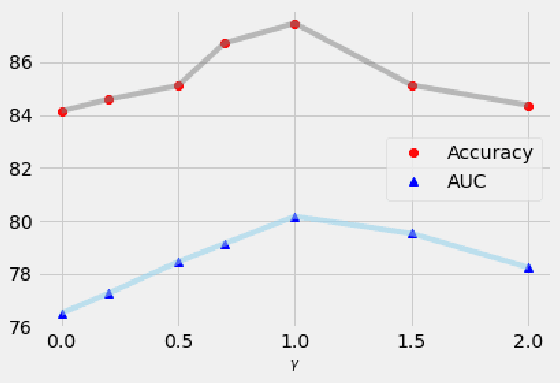

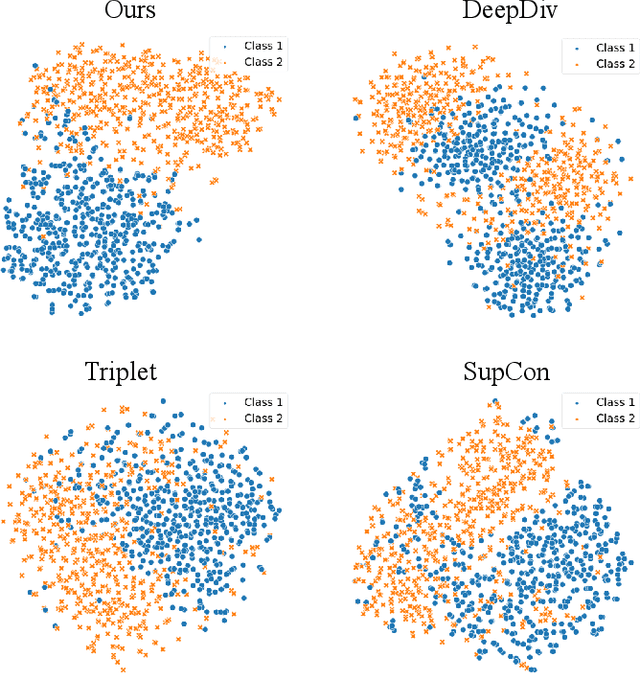

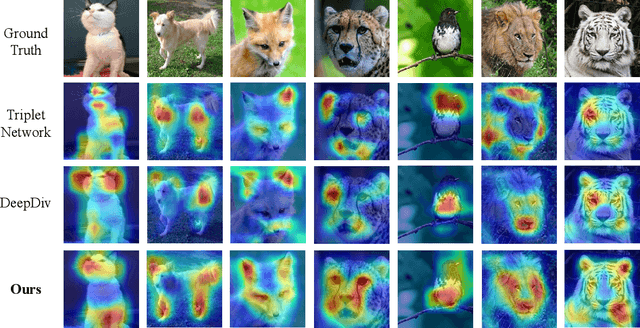

Abstract:Deep metric learning techniques have been used for visual representation in various supervised and unsupervised learning tasks through learning embeddings of samples with deep networks. However, classic approaches, which employ a fixed distance metric as a similarity function between two embeddings, may lead to suboptimal performance for capturing the complex data distribution. The Bregman divergence generalizes measures of various distance metrics and arises throughout many fields of deep metric learning. In this paper, we first show how deep metric learning loss can arise from the Bregman divergence. We then introduce a novel method for learning empirical Bregman divergence directly from data based on parameterizing the convex function underlying the Bregman divergence with a deep learning setting. We further experimentally show that our approach performs effectively on five popular public datasets compared to other SOTA deep metric learning methods, particularly for pattern recognition problems.

Interpretability of Neural Network With Physiological Mechanisms

Mar 24, 2022Abstract:Deep learning continues to play as a powerful state-of-art technique that has achieved extraordinary accuracy levels in various domains of regression and classification tasks, including images, video, signal, and natural language data. The original goal of proposing the neural network model is to improve the understanding of complex human brains using a mathematical expression approach. However, recent deep learning techniques continue to lose the interpretations of its functional process by being treated mostly as a black-box approximator. To address this issue, such an AI model needs to be biological and physiological realistic to incorporate a better understanding of human-machine evolutionary intelligence. In this study, we compare neural networks and biological circuits to discover the similarities and differences from various perspective views. We further discuss the insights into how neural networks learn from data by investigating human biological behaviors and understandable justifications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge