Ankan Ghosh Dastider

Topological, or Non-topological? A Deep Learning Based Prediction

Oct 29, 2023

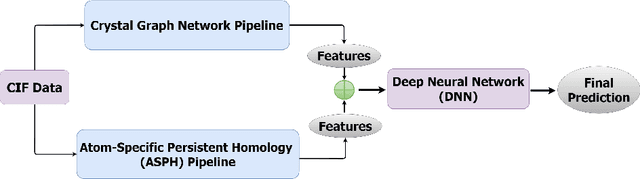

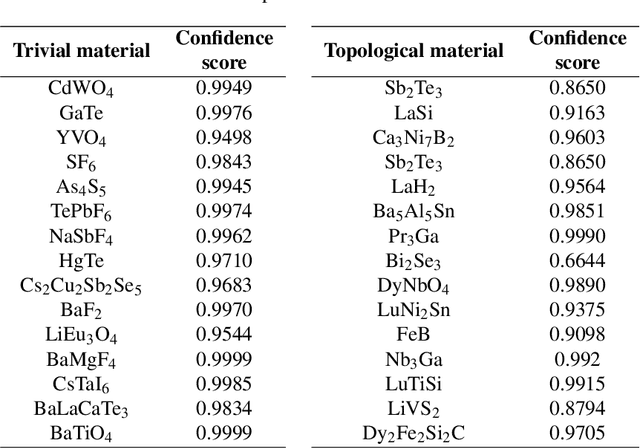

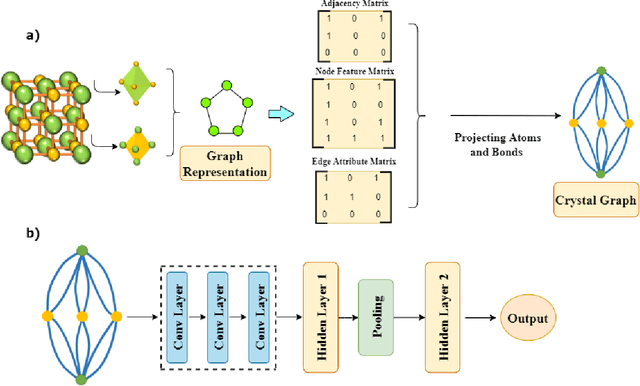

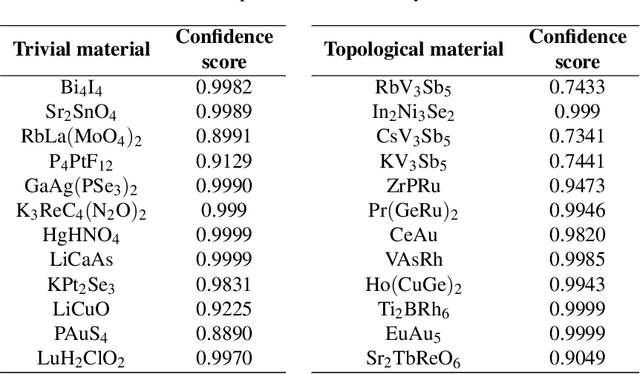

Abstract:Prediction and discovery of new materials with desired properties are at the forefront of quantum science and technology research. A major bottleneck in this field is the computational resources and time complexity related to finding new materials from ab initio calculations. In this work, an effective and robust deep learning-based model is proposed by incorporating persistent homology and graph neural network which offers an accuracy of 91.4% and an F1 score of 88.5% in classifying topological vs. non-topological materials, outperforming the other state-of-the-art classifier models. The incorporation of the graph neural network encodes the underlying relation between the atoms into the model based on their own crystalline structures and thus proved to be an effective method to represent and process non-euclidean data like molecules with a relatively shallow network. The persistent homology pipeline in the suggested neural network is capable of integrating the atom-specific topological information into the deep learning model, increasing robustness, and gain in performance. It is believed that the presented work will be an efficacious tool for predicting the topological class and therefore enable the high-throughput search for novel materials in this field.

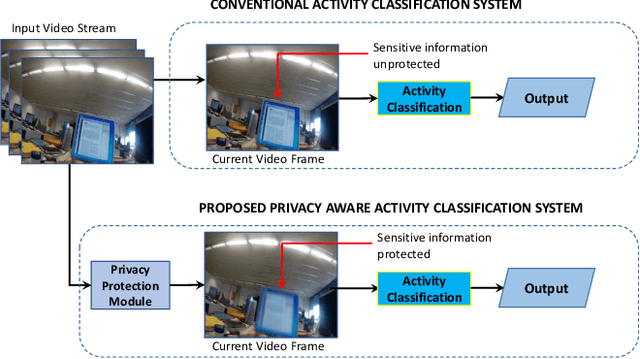

Privacy-Aware Activity Classification from First Person Office Videos

Jun 11, 2020

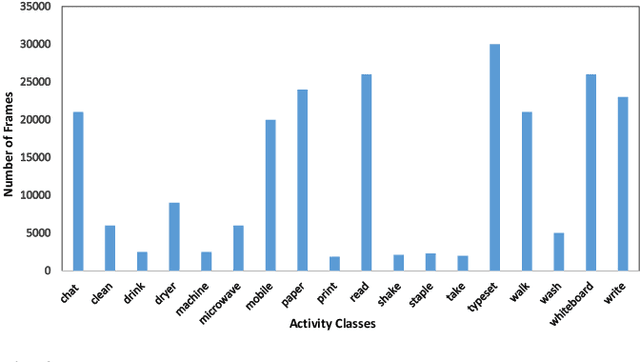

Abstract:In the advent of wearable body-cameras, human activity classification from First-Person Videos (FPV) has become a topic of increasing importance for various applications, including in life-logging, law-enforcement, sports, workplace, and healthcare. One of the challenging aspects of FPV is its exposure to potentially sensitive objects within the user's field of view. In this work, we developed a privacy-aware activity classification system focusing on office videos. We utilized a Mask-RCNN with an Inception-ResNet hybrid as a feature extractor for detecting, and then blurring out sensitive objects (e.g., digital screens, human face, paper) from the videos. For activity classification, we incorporate an ensemble of Recurrent Neural Networks (RNNs) with ResNet, ResNext, and DenseNet based feature extractors. The proposed system was trained and evaluated on the FPV office video dataset that includes 18-classes made available through the IEEE Video and Image Processing (VIP) Cup 2019 competition. On the original unprotected FPVs, the proposed activity classifier ensemble reached an accuracy of 85.078% with precision, recall, and F1 scores of 0.88, 0.85 & 0.86, respectively. On privacy protected videos, the performances were slightly degraded, with accuracy, precision, recall, and F1 scores at 73.68%, 0.79, 0.75, and 0.74, respectively. The presented system won the 3rd prize in the IEEE VIP Cup 2019 competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge