Aniket Shirke

Tracking Grow-Finish Pigs Across Large Pens Using Multiple Cameras

Nov 22, 2021

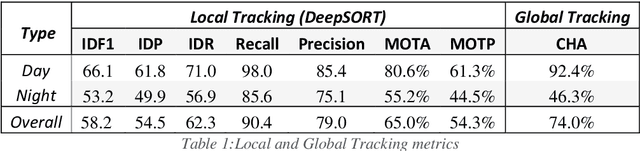

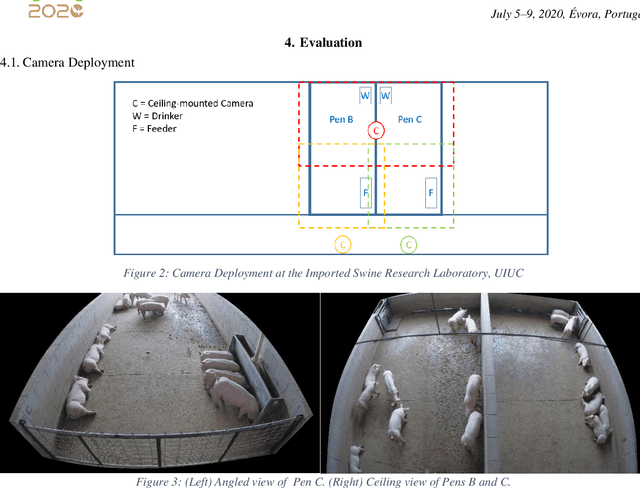

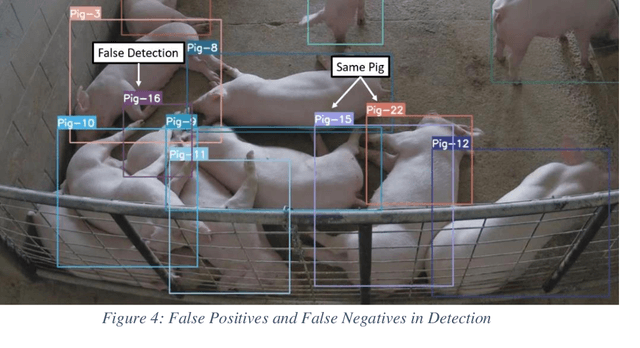

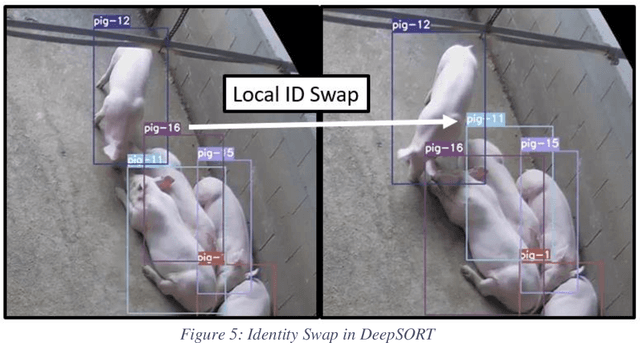

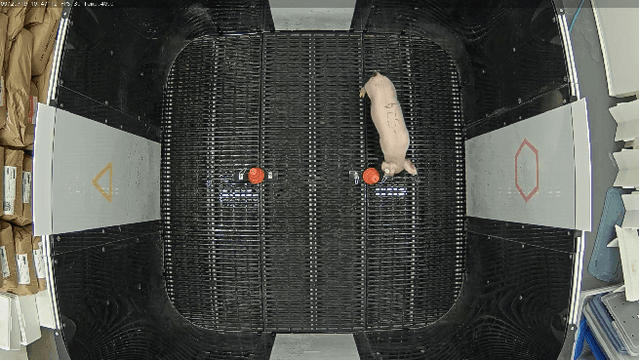

Abstract:Increasing demand for meat products combined with farm labor shortages has resulted in a need to develop new real-time solutions to monitor animals effectively. Significant progress has been made in continuously locating individual pigs using tracking-by-detection methods. However, these methods fail for oblong pens because a single fixed camera does not cover the entire floor at adequate resolution. We address this problem by using multiple cameras, placed such that the visual fields of adjacent cameras overlap, and together they span the entire floor. Avoiding breaks in tracking requires inter-camera handover when a pig crosses from one camera's view into that of an adjacent camera. We identify the adjacent camera and the shared pig location on the floor at the handover time using inter-view homography. Our experiments involve two grow-finish pens, housing 16-17 pigs each, and three RGB cameras. Our algorithm first detects pigs using a deep learning-based object detection model (YOLO) and creates their local tracking IDs using a multi-object tracking algorithm (DeepSORT). We then use inter-camera shared locations to match multiple views and generate a global ID for each pig that holds throughout tracking. To evaluate our approach, we provide five two-minutes long video sequences with fully annotated global identities. We track pigs in a single camera view with a Multi-Object Tracking Accuracy and Precision of 65.0% and 54.3% respectively and achieve a Camera Handover Accuracy of 74.0%. We open-source our code and annotated dataset at https://github.com/AIFARMS/multi-camera-pig-tracking

Vision-based Behavioral Recognition of Novelty Preference in Pigs

Jun 23, 2021

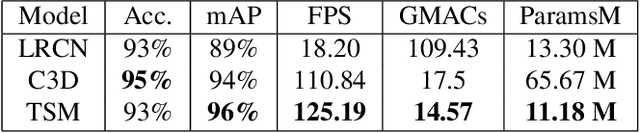

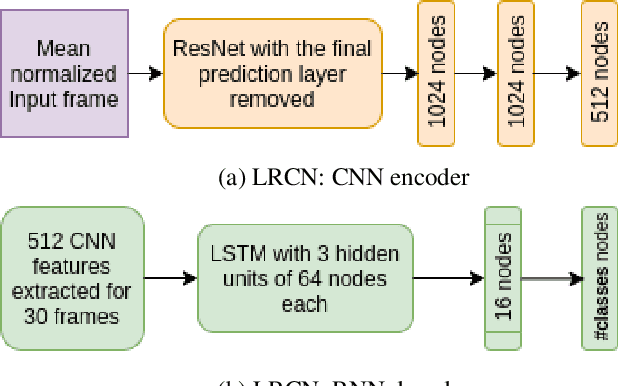

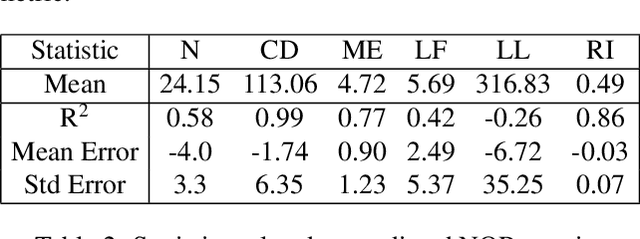

Abstract:Behavioral scoring of research data is crucial for extracting domain-specific metrics but is bottlenecked on the ability to analyze enormous volumes of information using human labor. Deep learning is widely viewed as a key advancement to relieve this bottleneck. We identify one such domain, where deep learning can be leveraged to alleviate the process of manual scoring. Novelty preference paradigms have been widely used to study recognition memory in pigs, but analysis of these videos requires human intervention. We introduce a subset of such videos in the form of the 'Pig Novelty Preference Behavior' (PNPB) dataset that is fully annotated with pig actions and keypoints. In order to demonstrate the application of state-of-the-art action recognition models on this dataset, we compare LRCN, C3D, and TSM on the basis of various analytical metrics and discuss common pitfalls of the models. Our methods achieve an accuracy of 93% and a mean Average Precision of 96% in estimating piglet behavior. We open-source our code and annotated dataset at https://github.com/AIFARMS/NOR-behavior-recognition

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge