Aniket Pramanick

CaMMT: Benchmarking Culturally Aware Multimodal Machine Translation

May 30, 2025

Abstract:Cultural content poses challenges for machine translation systems due to the differences in conceptualizations between cultures, where language alone may fail to convey sufficient context to capture region-specific meanings. In this work, we investigate whether images can act as cultural context in multimodal translation. We introduce CaMMT, a human-curated benchmark of over 5,800 triples of images along with parallel captions in English and regional languages. Using this dataset, we evaluate five Vision Language Models (VLMs) in text-only and text+image settings. Through automatic and human evaluations, we find that visual context generally improves translation quality, especially in handling Culturally-Specific Items (CSIs), disambiguation, and correct gender usage. By releasing CaMMT, we aim to support broader efforts in building and evaluating multimodal translation systems that are better aligned with cultural nuance and regional variation.

The Nature of NLP: Analyzing Contributions in NLP Papers

Sep 29, 2024

Abstract:Natural Language Processing (NLP) is a dynamic, interdisciplinary field that integrates intellectual traditions from computer science, linguistics, social science, and more. Despite its established presence, the definition of what constitutes NLP research remains debated. In this work, we quantitatively investigate what constitutes NLP by examining research papers. For this purpose, we propose a taxonomy and introduce NLPContributions, a dataset of nearly $2k$ research paper abstracts, expertly annotated to identify scientific contributions and classify their types according to this taxonomy. We also propose a novel task to automatically identify these elements, for which we train a strong baseline on our dataset. We present experimental results from this task and apply our model to $\sim$$29k$ NLP research papers to analyze their contributions, aiding in the understanding of the nature of NLP research. Our findings reveal a rising involvement of machine learning in NLP since the early nineties, alongside a declining focus on adding knowledge about language or people; again, in post-2020, there has been a resurgence of focus on language and people. We hope this work will spark discussions on our community norms and inspire efforts to consciously shape the future.

Transforming Scholarly Landscapes: Influence of Large Language Models on Academic Fields beyond Computer Science

Sep 29, 2024

Abstract:Large Language Models (LLMs) have ushered in a transformative era in Natural Language Processing (NLP), reshaping research and extending NLP's influence to other fields of study. However, there is little to no work examining the degree to which LLMs influence other research fields. This work empirically and systematically examines the influence and use of LLMs in fields beyond NLP. We curate $106$ LLMs and analyze $\sim$$148k$ papers citing LLMs to quantify their influence and reveal trends in their usage patterns. Our analysis reveals not only the increasing prevalence of LLMs in non-CS fields but also the disparities in their usage, with some fields utilizing them more frequently than others since 2018, notably Linguistics and Engineering together accounting for $\sim$$45\%$ of LLM citations. Our findings further indicate that most of these fields predominantly employ task-agnostic LLMs, proficient in zero or few-shot learning without requiring further fine-tuning, to address their domain-specific problems. This study sheds light on the cross-disciplinary impact of NLP through LLMs, providing a better understanding of the opportunities and challenges.

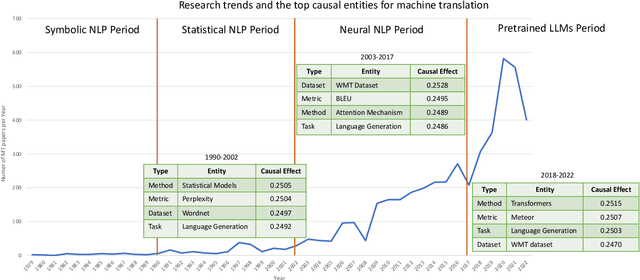

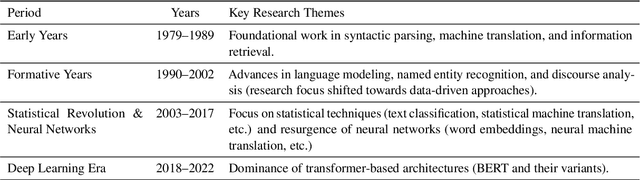

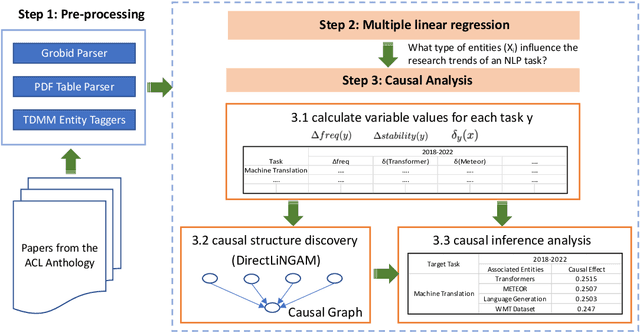

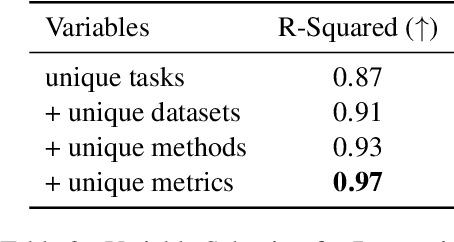

A Diachronic Analysis of the NLP Research Paradigm Shift: When, How, and Why?

May 22, 2023

Abstract:Understanding the fundamental concepts and trends in a scientific field is crucial for keeping abreast of its ongoing development. In this study, we propose a systematic framework for analyzing the evolution of research topics in a scientific field using causal discovery and inference techniques. By conducting extensive experiments on the ACL Anthology corpus, we demonstrate that our framework effectively uncovers evolutionary trends and the underlying causes for a wide range of natural language processing (NLP) research topics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge