Anery Patel

FALCON 2.0: An Entity and Relation Linking Tool over Wikidata

Feb 12, 2020

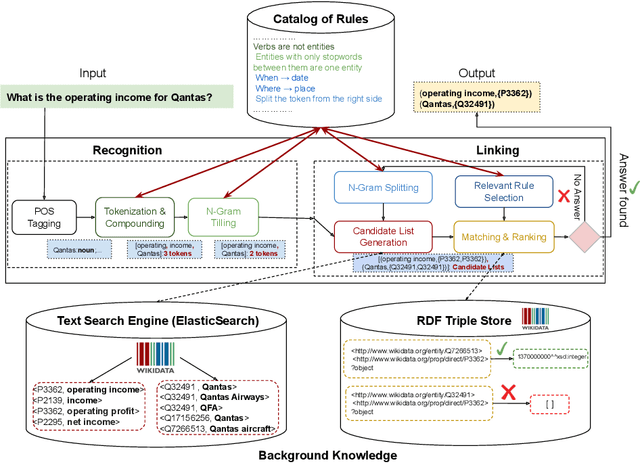

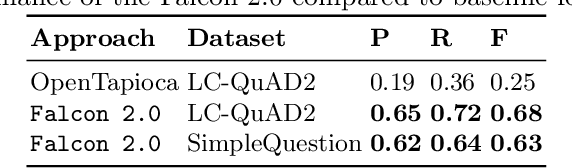

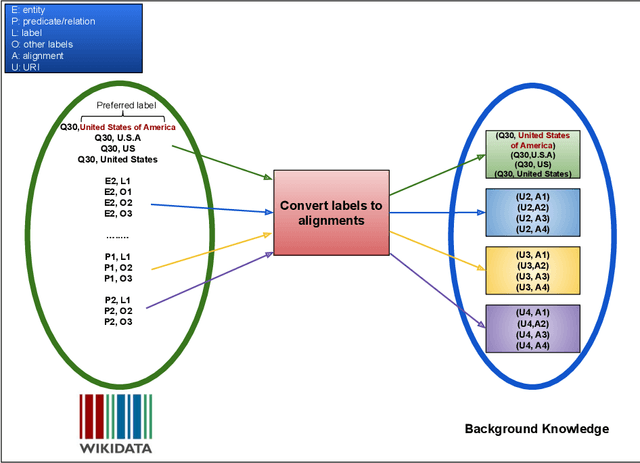

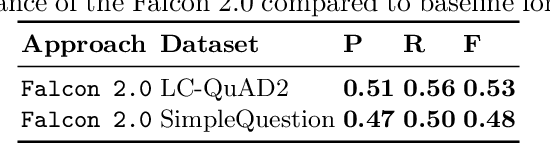

Abstract:Natural Language Processing (NLP) tools and frameworks have significantly contributed with solutions to the problems of extracting entities and relations and linking them to the related knowledge graphs. Albeit effective, the majority of existing tools are available for only one knowledge graph. In this paper, we present Falcon 2.0, a rule-based tool capable of accurately mapping entities and relations in short texts to resources in both DBpedia and Wikidata following the same approach in both cases. The input of Falcon 2.0 is a short natural language text in the English language. Falcon 2.0 resorts to fundamental principles of the English morphology (e.g., N-Gram tiling and N-Gram splitting) and background knowledge of labels alignments obtained from studied knowledge graph to return as an output; the resulting entity and relation resources are either in the DBpedia or Wikidata knowledge graphs. We have empirically studied the impact using only Wikidata on Falcon 2.0, and observed it is knowledge graph agnostic, i.e., Falcon 2.0 performance and behavior are not affected by the knowledge graph used as background knowledge. Falcon 2.0 is public and can be reused by the community. Additionally, Falcon 2.0 and its background knowledge bases are available as resources at https://labs.tib.eu/falcon/falcon2/.

Precipitation Nowcasting: Leveraging bidirectional LSTM and 1D CNN

Oct 24, 2018

Abstract:Short-term rainfall forecasting, also known as precipitation nowcasting has become a potentially fundamental technology impacting significant real-world applications ranging from flight safety, rainstorm alerts to farm irrigation timings. Since weather forecasting involves identifying the underlying structure in a huge amount of data, deep-learning based precipitation nowcasting has intuitively outperformed the traditional linear extrapolation methods. Our research work intends to utilize the recent advances in deep learning to nowcasting, a multi-variable time series forecasting problem. Specifically, we leverage a bidirectional LSTM (Long Short-Term Memory) neural network architecture which remarkably captures the temporal features and long-term dependencies from historical data. To further our studies, we compare the bidirectional LSTM network with 1D CNN model to prove the capabilities of sequence models over feed-forward neural architectures in forecasting related problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge