Andy Xie

Sensitivity Analysis on Transferred Neural Architectures of BERT and GPT-2 for Financial Sentiment Analysis

Jul 07, 2022

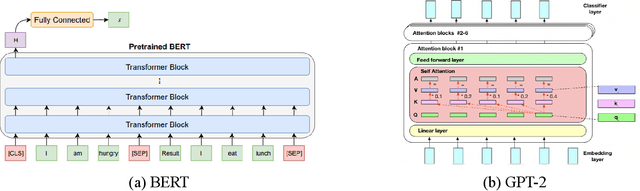

Abstract:The explosion in novel NLP word embedding and deep learning techniques has induced significant endeavors into potential applications. One of these directions is in the financial sector. Although there is a lot of work done in state-of-the-art models like GPT and BERT, there are relatively few works on how well these methods perform through fine-tuning after being pre-trained, as well as info on how sensitive their parameters are. We investigate the performance and sensitivity of transferred neural architectures from pre-trained GPT-2 and BERT models. We test the fine-tuning performance based on freezing transformer layers, batch size, and learning rate. We find the parameters of BERT are hypersensitive to stochasticity in fine-tuning and that GPT-2 is more stable in such practice. It is also clear that the earlier layers of GPT-2 and BERT contain essential word pattern information that should be maintained.

NoFADE: Analyzing Diminishing Returns on CO2 Investment

Nov 28, 2021

Abstract:Climate change continues to be a pressing issue that currently affects society at-large. It is important that we as a society, including the Computer Vision (CV) community take steps to limit our impact on the environment. In this paper, we (a) analyze the effect of diminishing returns on CV methods, and (b) propose a \textit{``NoFADE''}: a novel entropy-based metric to quantify model--dataset--complexity relationships. We show that some CV tasks are reaching saturation, while others are almost fully saturated. In this light, NoFADE allows the CV community to compare models and datasets on a similar basis, establishing an agnostic platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge