Andrew R. Lupini

Automated and Autonomous Experiment in Electron and Scanning Probe Microscopy

Mar 22, 2021

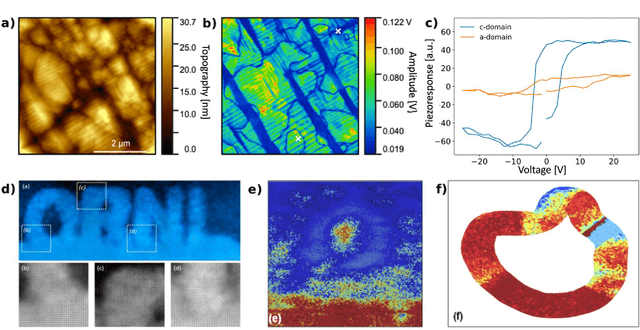

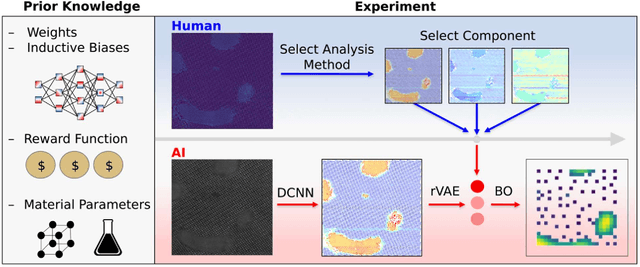

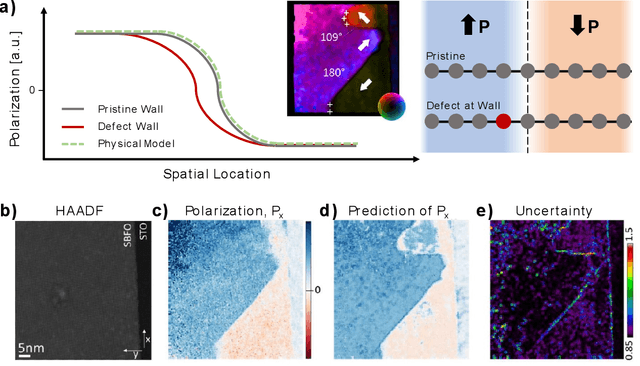

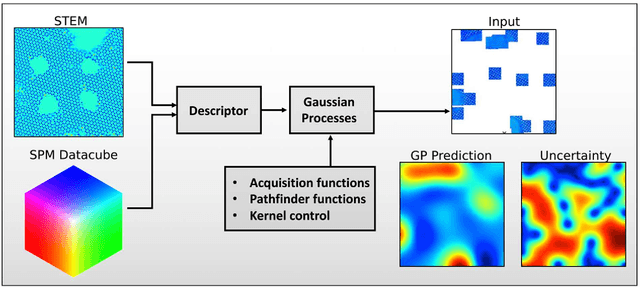

Abstract:Machine learning and artificial intelligence (ML/AI) are rapidly becoming an indispensable part of physics research, with domain applications ranging from theory and materials prediction to high-throughput data analysis. In parallel, the recent successes in applying ML/AI methods for autonomous systems from robotics through self-driving cars to organic and inorganic synthesis are generating enthusiasm for the potential of these techniques to enable automated and autonomous experiment (AE) in imaging. Here, we aim to analyze the major pathways towards AE in imaging methods with sequential image formation mechanisms, focusing on scanning probe microscopy (SPM) and (scanning) transmission electron microscopy ((S)TEM). We argue that automated experiments should necessarily be discussed in a broader context of the general domain knowledge that both informs the experiment and is increased as the result of the experiment. As such, this analysis should explore the human and ML/AI roles prior to and during the experiment, and consider the latencies, biases, and knowledge priors of the decision-making process. Similarly, such discussion should include the limitations of the existing imaging systems, including intrinsic latencies, non-idealities and drifts comprising both correctable and stochastic components. We further pose that the role of the AE in microscopy is not the exclusion of human operators (as is the case for autonomous driving), but rather automation of routine operations such as microscope tuning, etc., prior to the experiment, and conversion of low latency decision making processes on the time scale spanning from image acquisition to human-level high-order experiment planning.

Manifold Learning of Four-dimensional Scanning Transmission Electron Microscopy

Oct 18, 2018

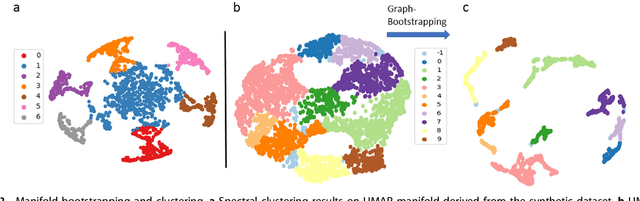

Abstract:Four-dimensional scanning transmission electron microscopy (4D-STEM) of local atomic diffraction patterns is emerging as a powerful technique for probing intricate details of atomic structure and atomic electric fields. However, efficient processing and interpretation of large volumes of data remain challenging, especially for two-dimensional or light materials because the diffraction signal recorded on the pixelated arrays is weak. Here we employ data-driven manifold leaning approaches for straightforward visualization and exploration analysis of the 4D-STEM datasets, distilling real-space neighboring effects on atomically resolved deflection patterns from single-layer graphene, with single dopant atoms, as recorded on a pixelated detector. These extracted patterns relate to both individual atom sites and sublattice structures, effectively discriminating single dopant anomalies via multi-mode views. We believe manifold learning analysis will accelerate physics discoveries coupled between data-rich imaging mechanisms and materials such as ferroelectric, topological spin and van der Waals heterostructures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge