Andres Pulido

Point Cloud Structural Similarity-based Underwater Sonar Loop Detection

Sep 21, 2024

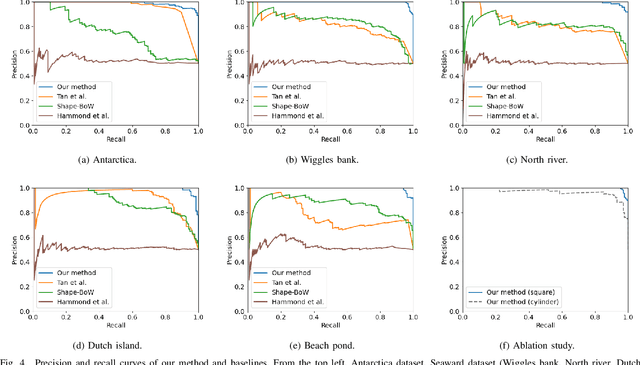

Abstract:In order to enable autonomous navigation in underwater environments, a map needs to be created in advance using a Simultaneous Localization and Mapping (SLAM) algorithm that utilizes sensors like a sonar. At this time, loop closure is employed to reduce the pose error accumulated during the SLAM process. In the case of loop detection using a sonar, some previous studies have used a method of projecting the 3D point cloud into 2D, then extracting keypoints and matching them. However, during the 2D projection process, data loss occurs due to image resolution, and in monotonous underwater environments such as rivers or lakes, it is difficult to extract keypoints. Additionally, methods that use neural networks or are based on Bag of Words (BoW) have the disadvantage of requiring additional preprocessing tasks, such as training the model in advance or pre-creating a vocabulary. To address these issues, in this paper, we utilize the point cloud obtained from sonar data without any projection to prevent performance degradation due to data loss. Additionally, by calculating the point-wise structural feature map of the point cloud using mathematical formulas and comparing the similarity between point clouds, we eliminate the need for keypoint extraction and ensure that the algorithm can operate in new environments without additional learning or tasks. To evaluate the method, we validated the performance of the proposed algorithm using the Antarctica dataset obtained from deep underwater and the Seaward dataset collected from rivers and lakes. Experimental results show that our proposed method achieves the best loop detection performance in both datasets. Our code is available at https://github.com/donghwijung/point_cloud_structural_similarity_based_underwater_sonar_loop_detection.

Time and Cost-Efficient Bathymetric Mapping System using Sparse Point Cloud Generation and Automatic Object Detection

Oct 19, 2022

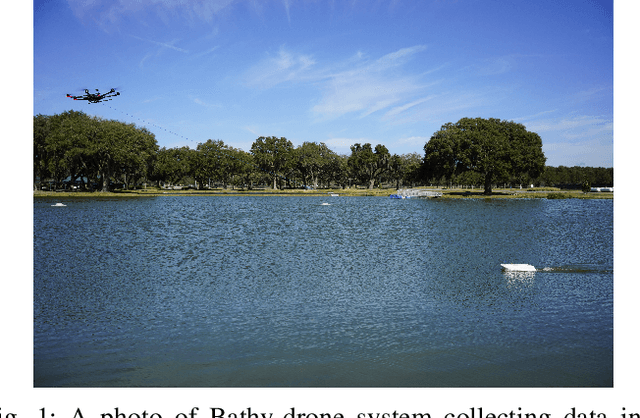

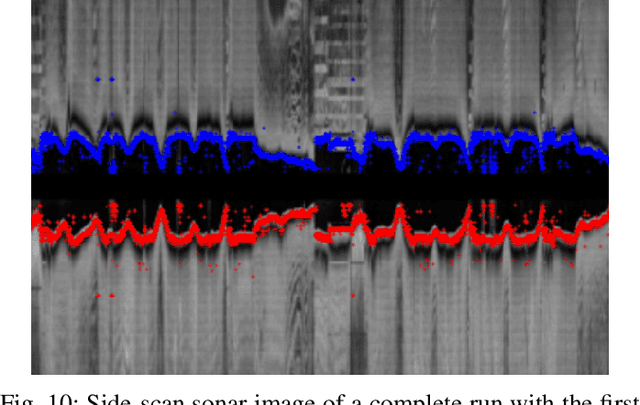

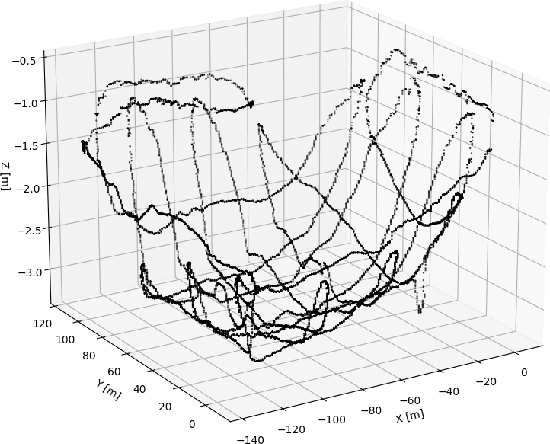

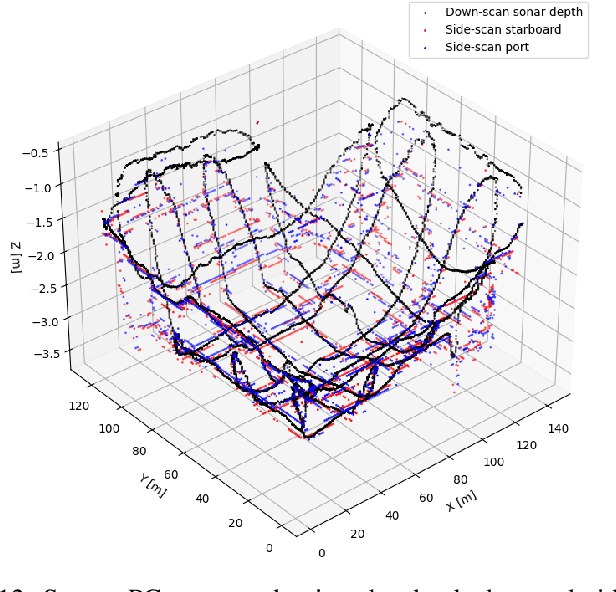

Abstract:Generating 3D point cloud (PC) data from noisy sonar measurements is a problem that has potential applications for bathymetry mapping, artificial object inspection, mapping of aquatic plants and fauna as well as underwater navigation and localization of vehicles such as submarines. Side-scan sonar sensors are available in inexpensive cost ranges, especially in fish-finders, where the transducers are usually mounted to the bottom of a boat and can approach shallower depths than the ones attached to an Uncrewed Underwater Vehicle (UUV) can. However, extracting 3D information from side-scan sonar imagery is a difficult task because of its low signal-to-noise ratio and missing angle and depth information in the imagery. Since most algorithms that generate a 3D point cloud from side-scan sonar imagery use Shape from Shading (SFS) techniques, extracting 3D information is especially difficult when the seafloor is smooth, is slowly changing in depth, or does not have identifiable objects that make acoustic shadows. This paper introduces an efficient algorithm that generates a sparse 3D point cloud from side-scan sonar images. This computation is done in a computationally efficient manner by leveraging the geometry of the first sonar return combined with known positions provided by GPS and down-scan sonar depth measurement at each data point. Additionally, this paper implements another algorithm that uses a Convolutional Neural Network (CNN) using transfer learning to perform object detection on side-scan sonar images collected in real life and generated with a simulation. The algorithm was tested on both real and synthetic images to show reasonably accurate anomaly detection and classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge