Andrei Giurgiu

Parameter-Efficient Transfer Learning for NLP

Feb 02, 2019

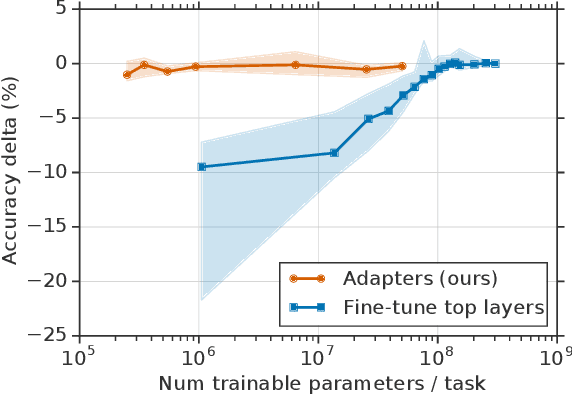

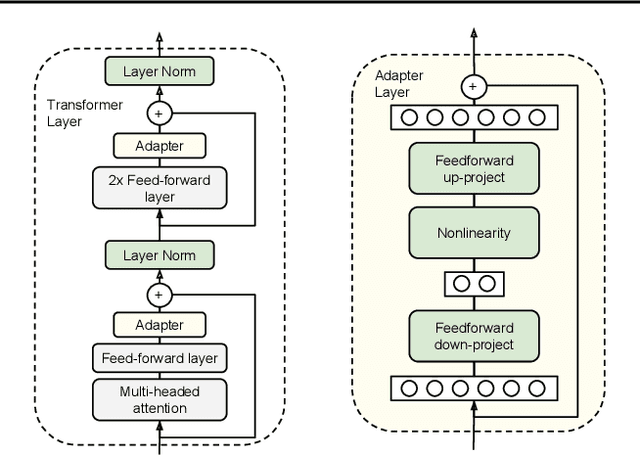

Abstract:Fine-tuning large pre-trained models is an effective transfer mechanism in NLP. However, in the presence of many downstream tasks, fine-tuning is parameter inefficient: an entire new model is required for every task. As an alternative, we propose transfer with adapter modules. Adapter modules yield a compact and extensible model; they add only a few trainable parameters per task, and new tasks can be added without revisiting previous ones. The parameters of the original network remain fixed, yielding a high degree of parameter sharing. To demonstrate adapter's effectiveness, we transfer the recently proposed BERT Transformer model to 26 diverse text classification tasks, including the GLUE benchmark. Adapters attain near state-of-the-art performance, whilst adding only a few parameters per task. On GLUE, we attain within 0.4% of the performance of full fine-tuning, adding only 3.6% parameters per task. By contrast, fine-tuning trains 100% of the parameters per task.

Statistical Estimation: From Denoising to Sparse Regression and Hidden Cliques

Sep 19, 2014

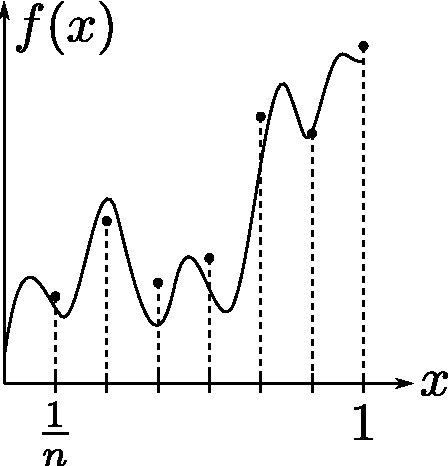

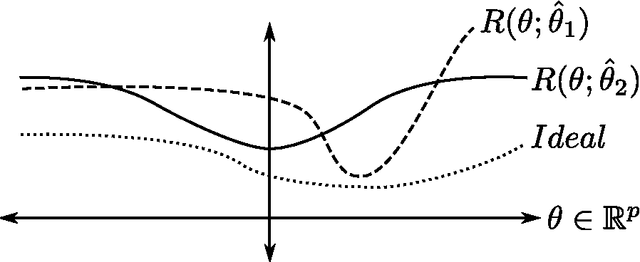

Abstract:These notes review six lectures given by Prof. Andrea Montanari on the topic of statistical estimation for linear models. The first two lectures cover the principles of signal recovery from linear measurements in terms of minimax risk. Subsequent lectures demonstrate the application of these principles to several practical problems in science and engineering. Specifically, these topics include denoising of error-laden signals, recovery of compressively sensed signals, reconstruction of low-rank matrices, and also the discovery of hidden cliques within large networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge