Andre Kokkeler

Vital Signs Estimation Using a 26 GHz Multi-Beam Communication Testbed

Nov 19, 2023

Abstract:This paper presents a novel pipeline for vital sign monitoring using a 26 GHz multi-beam communication testbed. In context of Joint Communication and Sensing (JCAS), the advanced communication capability at millimeter-wave bands is comparable to the radio resource of radars and is promising to sense the surrounding environment. Being able to communicate and sense the vital sign of humans present in the environment will enable new vertical services of telecommunication, i.e., remote health monitoring. The proposed processing pipeline leverages spatially orthogonal beams to estimate the vital sign - breath rate and heart rate - of single and multiple persons in static scenarios from the raw Channel State Information samples. We consider both monostatic and bistatic sensing scenarios. For monostatic scenario, we employ the phase time-frequency calibration and Discrete Wavelet Transform to improve the performance compared to the conventional Fast Fourier Transform based methods. For bistatic scenario, we use K-means clustering algorithm to extract multi-person vital signs due to the distinct frequency-domain signal feature between single and multi-person scenarios. The results show that the estimated breath rate and heart rate reach below 2 beats per minute (bpm) error compared to the reference captured by on-body sensor for the single-person monostatic sensing scenario with body-transceiver distance up to 2 m, and the two-person bistatic sensing scenario with BS-UE distance up to 4 m. The presented work does not optimize the OFDM waveform parameters for sensing; it demonstrates a promising JCAS proof-of-concept in contact-free vital sign monitoring using mmWave multi-beam communication systems.

Intelligent Blockage Recognition using Cellular mmWave Beamforming Data: Feasibility Study

Oct 30, 2022

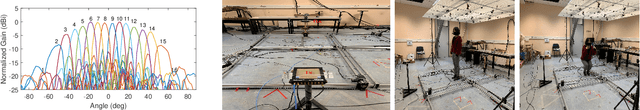

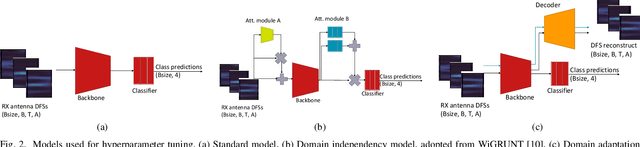

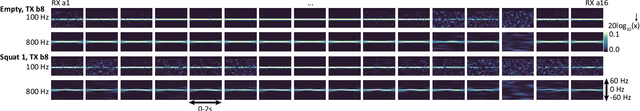

Abstract:Joint Communication and Sensing (JCAS) is envisioned for 6G cellular networks, where sensing the operation environment, especially in presence of humans, is as important as the high-speed wireless connectivity. Sensing, and subsequently recognizing blockage types, is an initial step towards signal blockage avoidance. In this context, we investigate the feasibility of using human motion recognition as a surrogate task for blockage type recognition through a set of hypothesis validation experiments using both qualitative and quantitative analysis (visual inspection and hyperparameter tuning of deep learning (DL) models, respectively). A surrogate task is useful for DL model testing and/or pre-training, thereby requiring a low amount of data to be collected from the eventual JCAS environment. Therefore, we collect and use a small dataset from a 26 GHz cellular multi-user communication device with hybrid beamforming. The data is converted into Doppler Frequency Spectrum (DFS) and used for hypothesis validations. Our research shows that (i) the presence of domain shift between data used for learning and inference requires use of DL models that can successfully handle it, (ii) DFS input data dilution to increase dataset volume should be avoided, (iii) a small volume of input data is not enough for reasonable inference performance, (iv) higher sensing resolution, causing lower sensitivity, should be handled by doing more activities/gestures per frame and lowering sampling rate, and (v) a higher reported sampling rate to STFT during pre-processing may increase performance, but should always be tested on a per learning task basis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge