André Valdestilhas

Where is Linked Data in Question Answering over Linked Data?

May 07, 2020

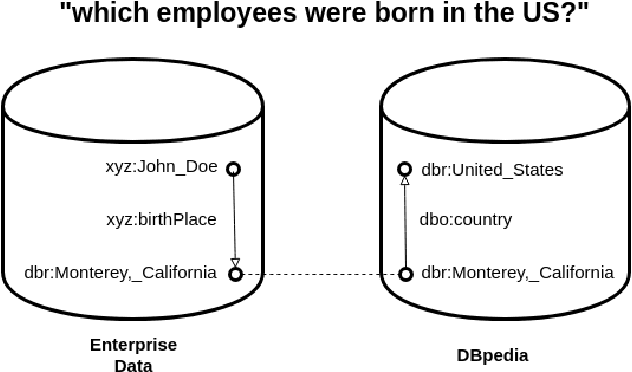

Abstract:We argue that "Question Answering with Knowledge Base" and "Question Answering over Linked Data" are currently two instances of the same problem, despite one explicitly declares to deal with Linked Data. We point out the lack of existing methods to evaluate question answering on datasets which exploit external links to the rest of the cloud or share common schema. To this end, we propose the creation of new evaluation settings to leverage the advantages of the Semantic Web to achieve AI-complete question answering.

Neural Machine Translation for Query Construction and Composition

Jul 09, 2018

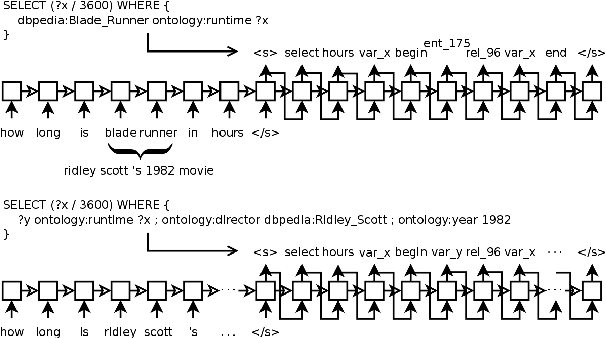

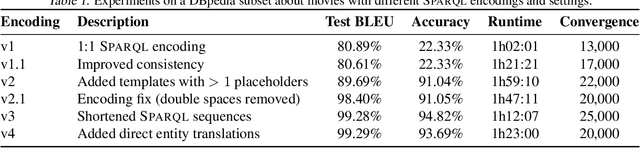

Abstract:Research on question answering with knowledge base has recently seen an increasing use of deep architectures. In this extended abstract, we study the application of the neural machine translation paradigm for question parsing. We employ a sequence-to-sequence model to learn graph patterns in the SPARQL graph query language and their compositions. Instead of inducing the programs through question-answer pairs, we expect a semi-supervised approach, where alignments between questions and queries are built through templates. We argue that the coverage of language utterances can be expanded using late notable works in natural language generation.

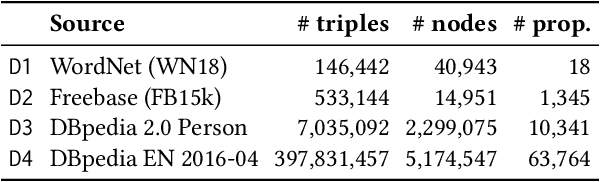

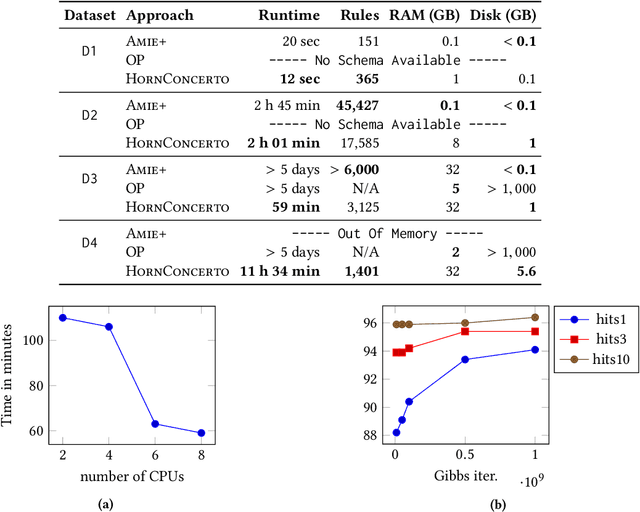

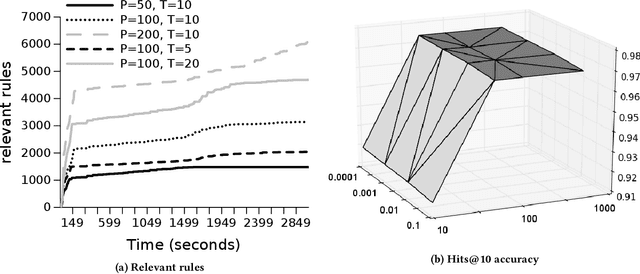

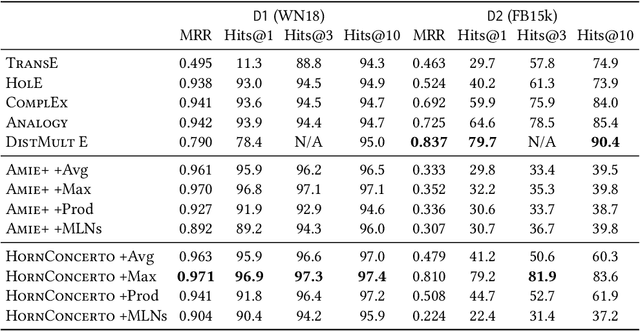

Beyond Markov Logic: Efficient Mining of Prediction Rules in Large Graphs

Feb 13, 2018

Abstract:Graph representations of large knowledge bases may comprise billions of edges. Usually built upon human-generated ontologies, several knowledge bases do not feature declared ontological rules and are far from being complete. Current rule mining approaches rely on schemata or store the graph in-memory, which can be unfeasible for large graphs. In this paper, we introduce HornConcerto, an algorithm to discover Horn clauses in large graphs without the need of a schema. Using a standard fact-based confidence score, we can mine close Horn rules having an arbitrary body size. We show that our method can outperform existing approaches in terms of runtime and memory consumption and mine high-quality rules for the link prediction task, achieving state-of-the-art results on a widely-used benchmark. Moreover, we find that rules alone can perform inference significantly faster than embedding-based methods and achieve accuracies on link prediction comparable to resource-demanding approaches such as Markov Logic Networks.

SPARQL as a Foreign Language

Aug 25, 2017

Abstract:In the last years, the Linked Data Cloud has achieved a size of more than 100 billion facts pertaining to a multitude of domains. However, accessing this information has been significantly challenging for lay users. Approaches to problems such as Question Answering on Linked Data and Link Discovery have notably played a role in increasing information access. These approaches are often based on handcrafted and/or statistical models derived from data observation. Recently, Deep Learning architectures based on Neural Networks called seq2seq have shown to achieve state-of-the-art results at translating sequences into sequences. In this direction, we propose Neural SPARQL Machines, end-to-end deep architectures to translate any natural language expression into sentences encoding SPARQL queries. Our preliminary results, restricted on selected DBpedia classes, show that Neural SPARQL Machines are a promising approach for Question Answering on Linked Data, as they can deal with known problems such as vocabulary mismatch and perform graph pattern composition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge