Anders Hedman

Non-invasive measuring method of skin temperature based on skin sensitivity index and deep learning

Dec 16, 2018

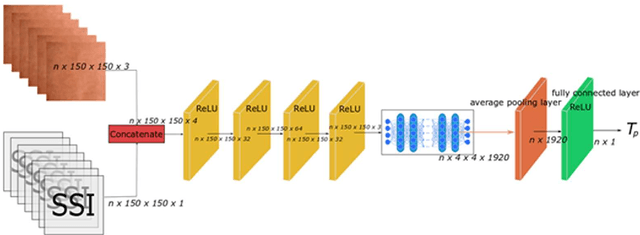

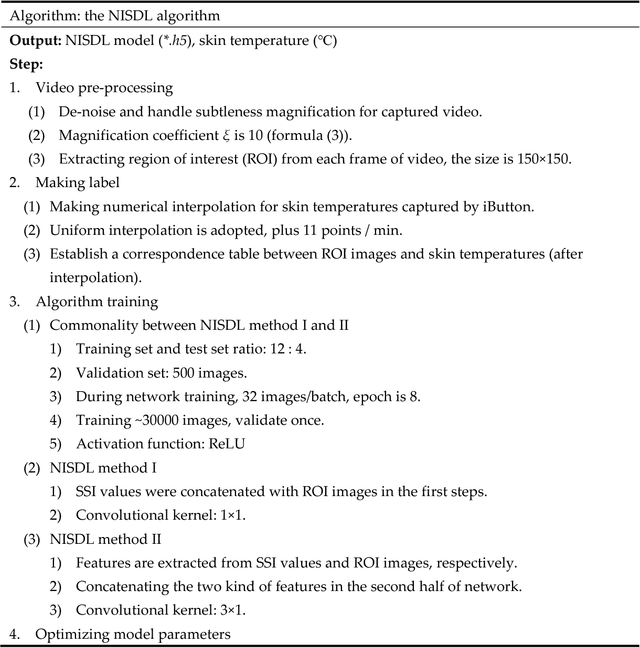

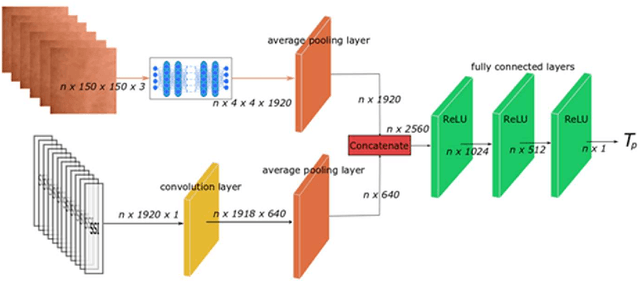

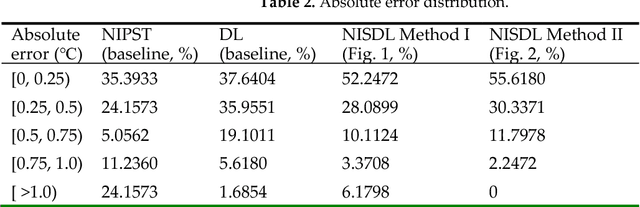

Abstract:In human-centered intelligent building, real-time measurements of human thermal comfort play critical roles and supply feedback control signals for building heating, ventilation, and air conditioning (HVAC) systems. Due to the challenges of intra- and inter-individual differences and skin subtleness variations, there is no satisfactory solution for thermal comfort measurements until now. In this paper, a non-invasive measuring method based on skin sensitivity index and deep learning (NISDL) was proposed to measure real-time skin temperature. A new evaluating index, named skin sensitivity index (SSI), was defined to overcome individual differences and skin subtleness variations. To illustrate the effectiveness of SSI proposed, two multi-layers deep learning framework (NISDL method I and II) was designed and the DenseNet201 was used for extracting features from skin images. The partly personal saturation temperature (NIPST) algorithm was use for algorithm comparisons. Another deep learning algorithm without SSI (DL) was also generated for algorithm comparisons. Finally, a total of 1.44 million image data was used for algorithm validation. The results show that 55.6180% and 52.2472% error values (NISDL method I, II) are scattered at [0, 0.25), and the same error intervals distribution of NIPST is 35.3933%.

Non-invasive thermal comfort perception based on subtleness magnification and deep learning for energy efficiency

Nov 12, 2018

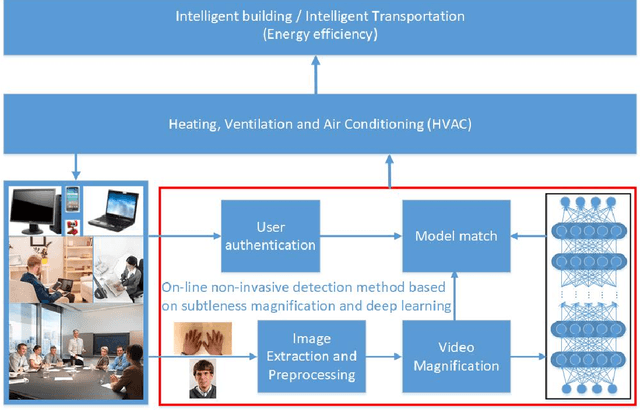

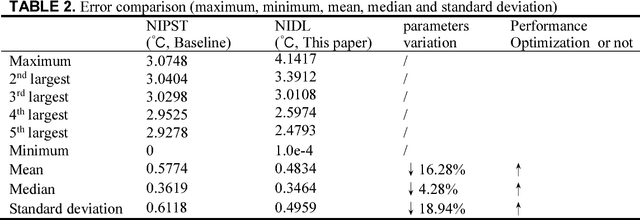

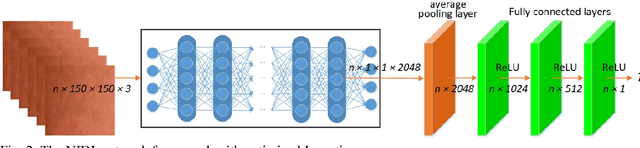

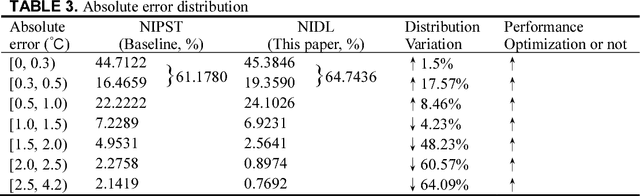

Abstract:Human thermal comfort measurement plays a critical role in giving feedback signals for building energy efficiency. A non-invasive measuring method based on subtleness magnification and deep learning (NIDL) was designed to achieve a comfortable, energy efficient built environment. The method relies on skin feature data, e.g., subtle motion and texture variation, and a 315-layer deep neural network for constructing the relationship between skin features and skin temperature. A physiological experiment was conducted for collecting feature data (1.44 million) and algorithm validation. The non-invasive measurement algorithm based on a partly-personalized saturation temperature model (NIPST) was used for algorithm performance comparisons. The results show that the mean error and median error of the NIDL are 0.4834 Celsius and 0.3464 Celsius which is equivalent to accuracy improvements of 16.28% and 4.28%, respectively.

Expressway visibility estimation based on image entropy and piecewise stationary time series analysis

Apr 08, 2018

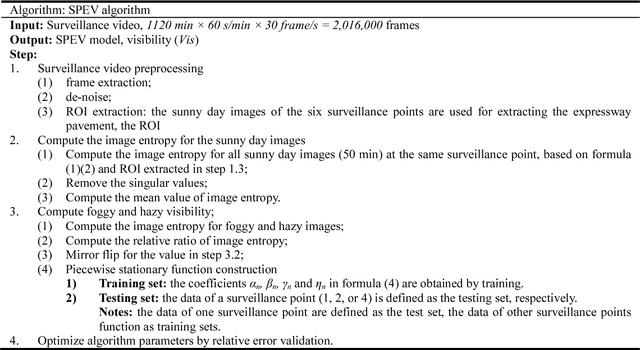

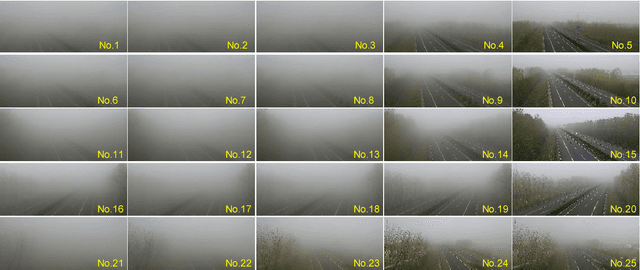

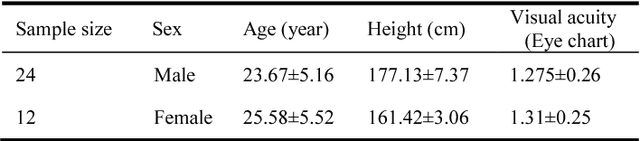

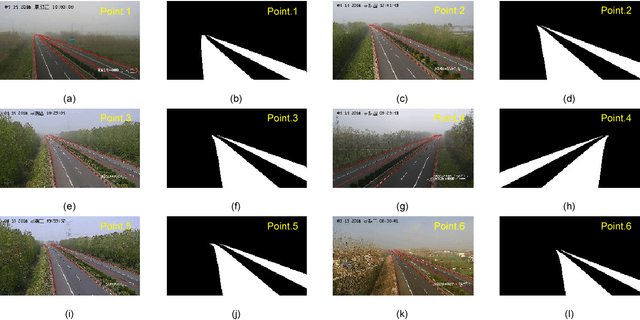

Abstract:Vision-based methods for visibility estimation can play a critical role in reducing traffic accidents caused by fog and haze. To overcome the disadvantages of current visibility estimation methods, we present a novel data-driven approach based on Gaussian image entropy and piecewise stationary time series analysis (SPEV). This is the first time that Gaussian image entropy is used for estimating atmospheric visibility. To lessen the impact of landscape and sunshine illuminance on visibility estimation, we used region of interest (ROI) analysis and took into account relative ratios of image entropy, to improve estimation accuracy. We assume fog and haze cause blurred images and that fog and haze can be considered as a piecewise stationary signal. We used piecewise stationary time series analysis to construct the piecewise causal relationship between image entropy and visibility. To obtain a real-world visibility measure during fog and haze, a subjective assessment was established through a study with 36 subjects who performed visibility observations. Finally, a total of two million videos were used for training the SPEV model and validate its effectiveness. The videos were collected from the constantly foggy and hazy Tongqi expressway in Jiangsu, China. The contrast model of visibility estimation was used for algorithm performance comparison, and the validation results of the SPEV model were encouraging as 99.14% of the relative errors were less than 10%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge