Anastasios Dimou

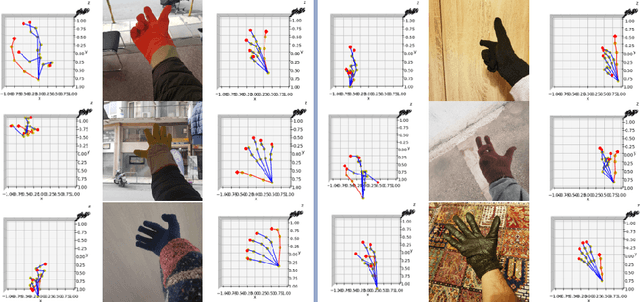

MC-hands-1M: A glove-wearing hand dataset for pose estimation

Oct 19, 2022

Abstract:Nowadays, the need for large amounts of carefully and complexly annotated data for the training of computer vision modules continues to grow. Furthermore, although the research community presents state of the art solutions to many problems, there exist special cases, such as the pose estimation and tracking of a glove-wearing hand, where the general approaches tend to be unable to provide an accurate solution or fail completely. In this work, we are presenting a synthetic dataset1 for 3D pose estimation of glove-wearing hands, in order to depict the value of data synthesis in computer vision. The dataset is used to fine-tune a public hand joint detection model, achieving significant performance in both synthetic and real images of glove-wearing hands.

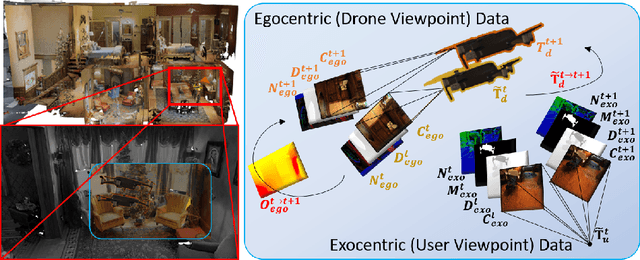

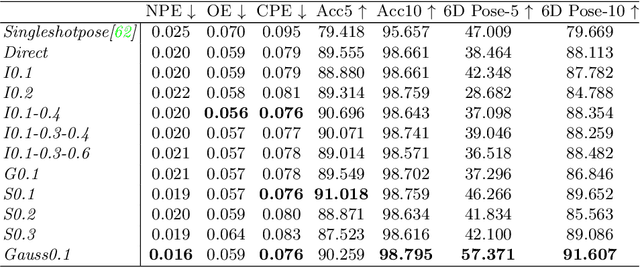

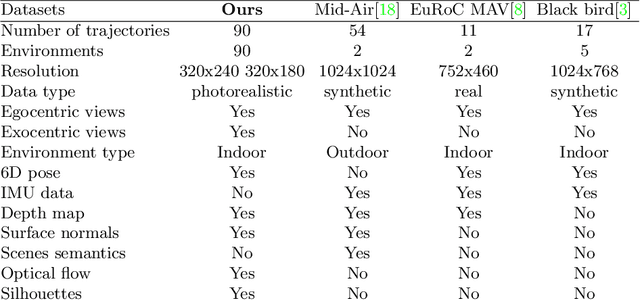

DronePose: Photorealistic UAV-Assistant Dataset Synthesis for 3D Pose Estimation via a Smooth Silhouette Loss

Aug 21, 2020

Abstract:In this work we consider UAVs as cooperative agents supporting human users in their operations. In this context, the 3D localisation of the UAV assistant is an important task that can facilitate the exchange of spatial information between the user and the UAV. To address this in a data-driven manner, we design a data synthesis pipeline to create a realistic multimodal dataset that includes both the exocentric user view, and the egocentric UAV view. We then exploit the joint availability of photorealistic and synthesized inputs to train a single-shot monocular pose estimation model. During training we leverage differentiable rendering to supplement a state-of-the-art direct regression objective with a novel smooth silhouette loss. Our results demonstrate its qualitative and quantitative performance gains over traditional silhouette objectives. Our data and code are available at https://vcl3d.github.io/DronePose

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge