Amit Reza

Grid-based exoplanet atmospheric mass loss predictions through neural network

Feb 03, 2025Abstract:The fast and accurate estimation of planetary mass-loss rates is critical for planet population and evolution modelling. We use machine learning (ML) for fast interpolation across an existing large grid of hydrodynamic upper atmosphere models, providing mass-loss rates for any planet inside the grid boundaries with superior accuracy compared to previously published interpolation schemes. We consider an already available grid comprising about 11000 hydrodynamic upper atmosphere models for training and generate an additional grid of about 250 models for testing purposes. We develop the ML interpolation scheme (dubbed "atmospheric Mass Loss INquiry frameworK"; MLink) using a Dense Neural Network, further comparing the results with what was obtained employing classical approaches (e.g. linear interpolation and radial basis function-based regression). Finally, we study the impact of the different interpolation schemes on the evolution of a small sample of carefully selected synthetic planets. MLink provides high-quality interpolation across the entire parameter space by significantly reducing both the number of points with large interpolation errors and the maximum interpolation error compared to previously available schemes. For most cases, evolutionary tracks computed employing MLink and classical schemes lead to comparable planetary parameters at Gyr-timescales. However, particularly for planets close to the top edge of the radius gap, the difference between the predicted planetary radii at a given age of tracks obtained employing MLink and classical interpolation schemes can exceed the typical observational uncertainties. Machine learning can be successfully used to estimate atmospheric mass-loss rates from model grids paving the way to explore future larger and more complex grids of models computed accounting for more physical processes.

Graph Neural Networks for Identifying Steady-State Behavior in Complex Networks

Feb 02, 2025Abstract:In complex systems, information propagation can be defined as diffused or delocalized, weakly localized, and strongly localized. Can a machine learning model learn the behavior of a linear dynamical system on networks? In this work, we develop a graph neural network framework for identifying the steady-state behavior of the linear dynamical system. We reveal that our model learns the different states with high accuracy. To understand the explainability of our model, we provide an analytical derivation for the forward and backward propagation of our framework. Finally, we use the real-world graphs in our model for validation.

Machine learning-based classification for Single Photon Space Debris Light Curves

Nov 27, 2024

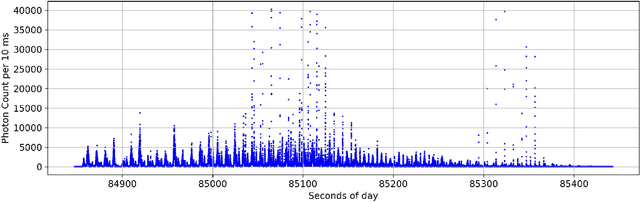

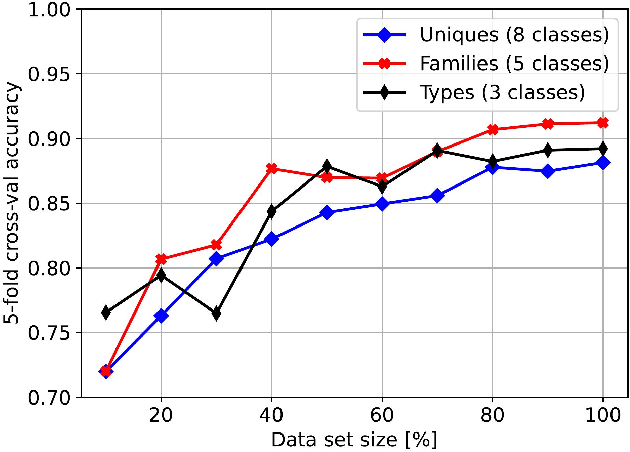

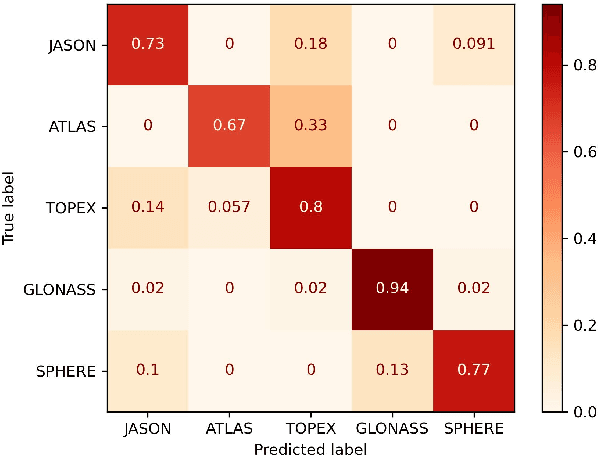

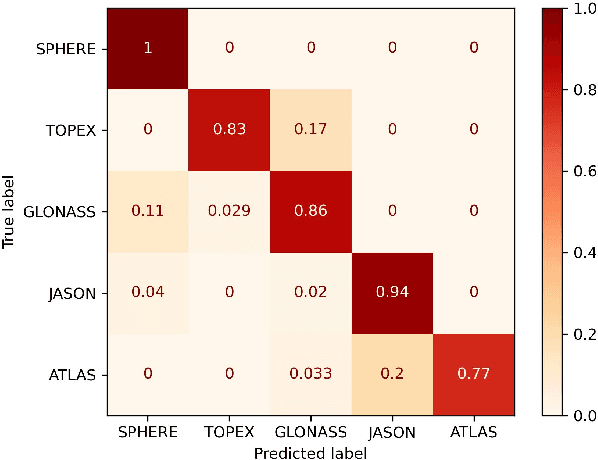

Abstract:The growing number of man-made debris in Earth's orbit poses a threat to active satellite missions due to the risk of collision. Characterizing unknown debris is, therefore, of high interest. Light Curves (LCs) are temporal variations of object brightness and have been shown to contain information such as shape, attitude, and rotational state. Since 2015, the Satellite Laser Ranging (SLR) group of Space Research Institute (IWF) Graz has been building a space debris LC catalogue. The LCs are captured on a Single Photon basis, which sets them apart from CCD-based measurements. In recent years, Machine Learning (ML) models have emerged as a viable technique for analyzing LCs. This work aims to classify Single Photon Space Debris using the ML framework. We have explored LC classification using k-Nearest Neighbour (k-NN), Random Forest (RDF), XGBoost (XGB), and Convolutional Neural Network (CNN) classifiers in order to assess the difference in performance between traditional and deep models. Instead of performing classification on the direct LCs data, we extracted features from the data first using an automated pipeline. We apply our models on three tasks, which are classifying individual objects, objects grouped into families according to origin (e.g., GLONASS satellites), and grouping into general types (e.g., rocket bodies). We successfully classified Space Debris LCs captured on Single Photon basis, obtaining accuracies as high as 90.7%. Further, our experiments show that the classifiers provide better classification accuracy with automated extracted features than other methods.

A Novel Momentum-Based Deep Learning Techniques for Medical Image Classification and Segmentation

Aug 11, 2024

Abstract:Accurately segmenting different organs from medical images is a critical prerequisite for computer-assisted diagnosis and intervention planning. This study proposes a deep learning-based approach for segmenting various organs from CT and MRI scans and classifying diseases. Our study introduces a novel technique integrating momentum within residual blocks for enhanced training dynamics in medical image analysis. We applied our method in two distinct tasks: segmenting liver, lung, & colon data and classifying abdominal pelvic CT and MRI scans. The proposed approach has shown promising results, outperforming state-of-the-art methods on publicly available benchmarking datasets. For instance, in the lung segmentation dataset, our approach yielded significant enhancements over the TransNetR model, including a 5.72% increase in dice score, a 5.04% improvement in mean Intersection over Union (mIoU), an 8.02% improvement in recall, and a 4.42% improvement in precision. Hence, incorporating momentum led to state-of-the-art performance in both segmentation and classification tasks, representing a significant advancement in the field of medical imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge