Amirmohammad Radmehr

Real-Time Diagnostic Integrity Meets Efficiency: A Novel Platform-Agnostic Architecture for Physiological Signal Compression

Jan 04, 2024Abstract:Head-based signals such as EEG, EMG, EOG, and ECG collected by wearable systems will play a pivotal role in clinical diagnosis, monitoring, and treatment of important brain disorder diseases. However, the real-time transmission of the significant corpus physiological signals over extended periods consumes substantial power and time, limiting the viability of battery-dependent physiological monitoring wearables. This paper presents a novel deep-learning framework employing a variational autoencoder (VAE) for physiological signal compression to reduce wearables' computational complexity and energy consumption. Our approach achieves an impressive compression ratio of 1:293 specifically for spectrogram data, surpassing state-of-the-art compression techniques such as JPEG2000, H.264, Direct Cosine Transform (DCT), and Huffman Encoding, which do not excel in handling physiological signals. We validate the efficacy of the compressed algorithms using collected physiological signals from real patients in the Hospital and deploy the solution on commonly used embedded AI chips (i.e., ARM Cortex V8 and Jetson Nano). The proposed framework achieves a 91% seizure detection accuracy using XGBoost, confirming the approach's reliability, practicality, and scalability.

Experimental Study on the Imitation of the Human Head-and-Eye Pose Using the 3-DOF Agile Eye Parallel Robot with ROS and Mediapipe Framework

Nov 04, 2021

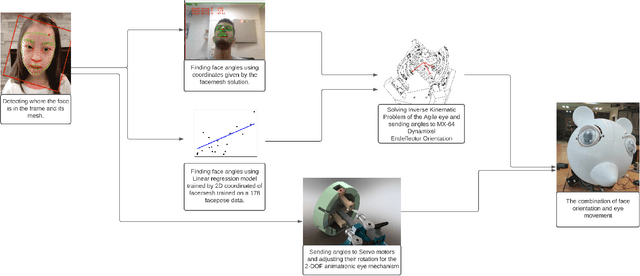

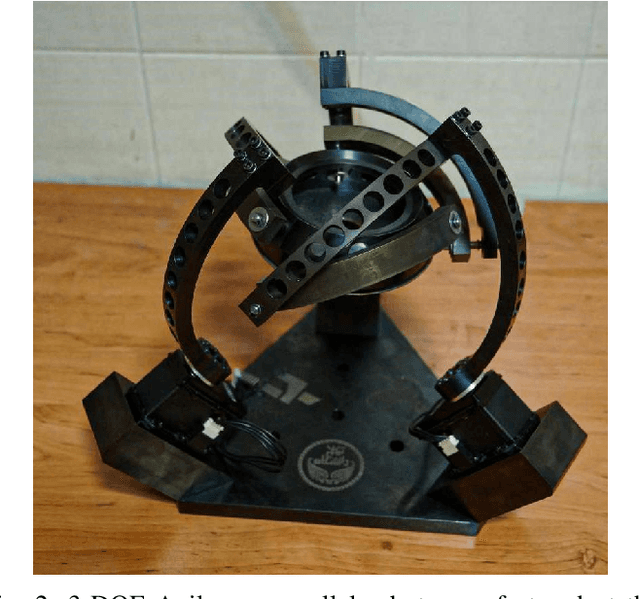

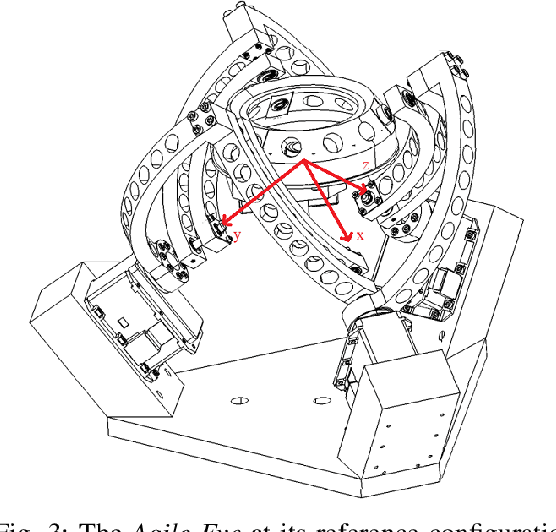

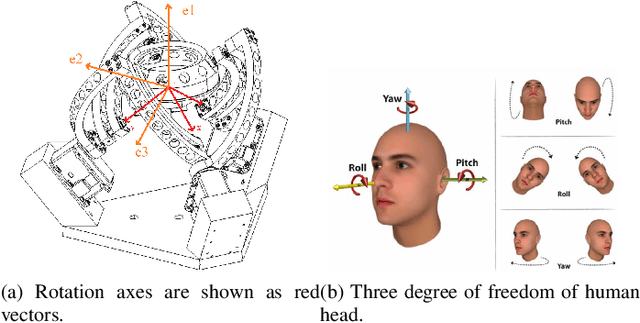

Abstract:In this paper, a method to mimic a human face and eyes is proposed which can be regarded as a combination of computer vision techniques and neural network concepts. From a mechanical standpoint, a 3-DOF spherical parallel robot is used which imitates the human head movement. In what concerns eye movement, a 2-DOF mechanism is attached to the end-effector of the 3-DOF spherical parallel mechanism. In order to have robust and reliable results for the imitation, meaningful information should be extracted from the face mesh for obtaining the pose of a face, i.e., the roll, yaw, and pitch angles. To this end, two methods are proposed where each of them has its own pros and cons. The first method consists in resorting to the so-called Mediapipe library which is a machine learning solution for high-fidelity body pose tracking, introduced by Google. As the second method, a model is trained by a linear regression model for a gathered dataset of face pictures in different poses. In addition, a 3-DOF Agile Eye parallel robot is utilized to show the ability of this robot to be used as a system which is similar to a human head for performing a 3-DOF rotational motion pattern. Furthermore, a 3D printed face and a 2-DOF eye mechanism are fabricated to display the whole system more stylish way. Experimental tests, which are done based on a ROS platform, demonstrate the effectiveness of the proposed methods for tracking the human head and eye movement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge