Amar Shrestha

In-Hardware Learning of Multilayer Spiking Neural Networks on a Neuromorphic Processor

May 08, 2021

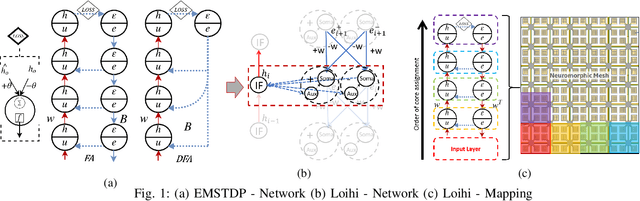

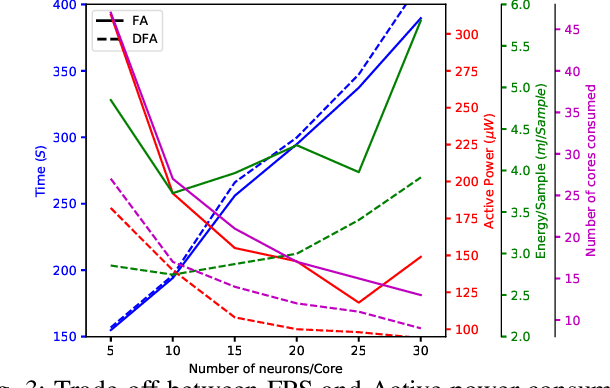

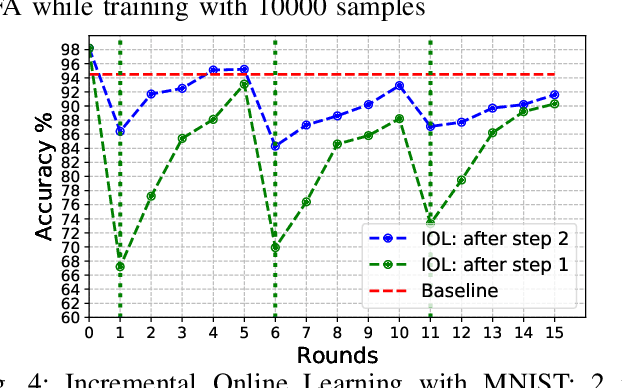

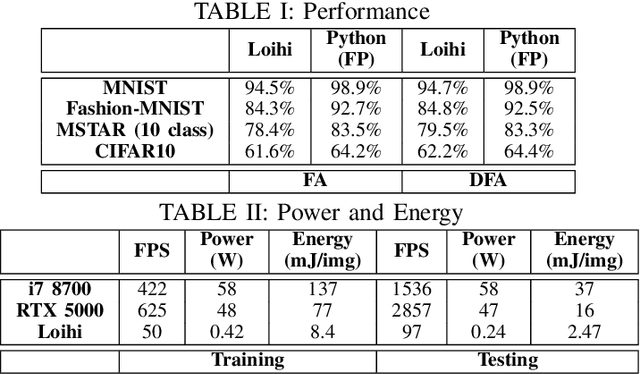

Abstract:Although widely used in machine learning, backpropagation cannot directly be applied to SNN training and is not feasible on a neuromorphic processor that emulates biological neuron and synapses. This work presents a spike-based backpropagation algorithm with biological plausible local update rules and adapts it to fit the constraint in a neuromorphic hardware. The algorithm is implemented on Intel Loihi chip enabling low power in-hardware supervised online learning of multilayered SNNs for mobile applications. We test this implementation on MNIST, Fashion-MNIST, CIFAR-10 and MSTAR datasets with promising performance and energy-efficiency, and demonstrate a possibility of incremental online learning with the implementation.

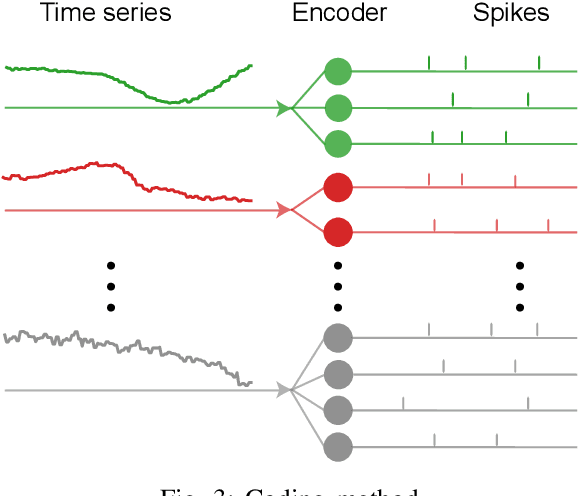

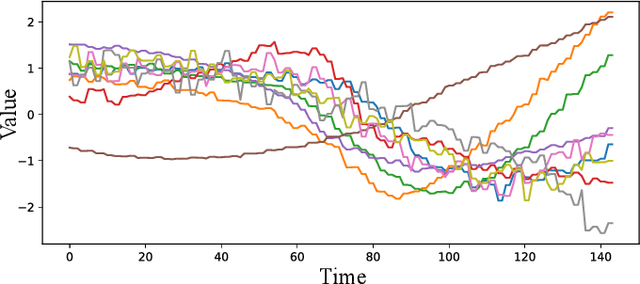

Multivariate Time Series Classification Using Spiking Neural Networks

Jul 07, 2020

Abstract:There is an increasing demand to process streams of temporal data in energy-limited scenarios such as embedded devices, driven by the advancement and expansion of Internet of Things (IoT) and Cyber-Physical Systems (CPS). Spiking neural network has drawn attention as it enables low power consumption by encoding and processing information as sparse spike events, which can be exploited for event-driven computation. Recent works also show SNNs' capability to process spatial temporal information. Such advantages can be exploited by power-limited devices to process real-time sensor data. However, most existing SNN training algorithms focus on vision tasks and temporal credit assignment is not addressed. Furthermore, widely adopted rate encoding ignores temporal information, hence it's not suitable for representing time series. In this work, we present an encoding scheme to convert time series into sparse spatial temporal spike patterns. A training algorithm to classify spatial temporal patterns is also proposed. Proposed approach is evaluated on multiple time series datasets in the UCR repository and achieved performance comparable to deep neural networks.

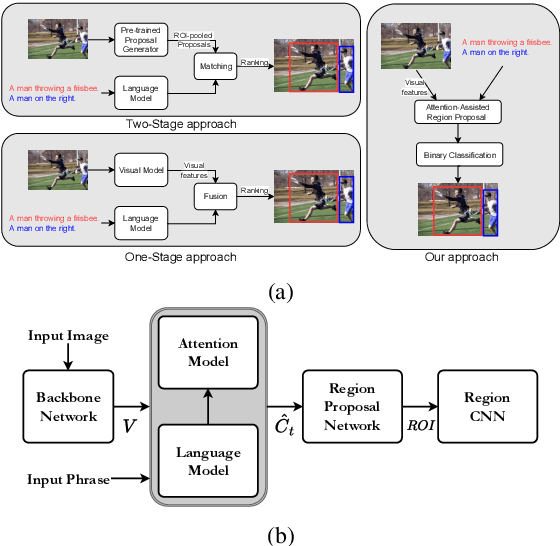

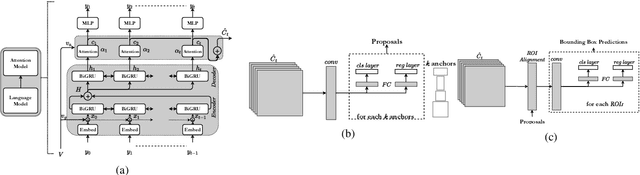

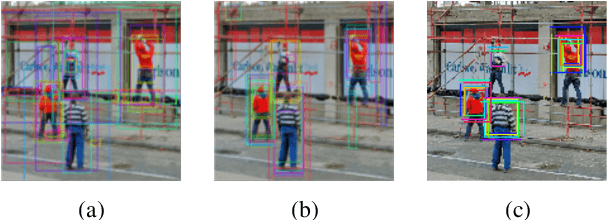

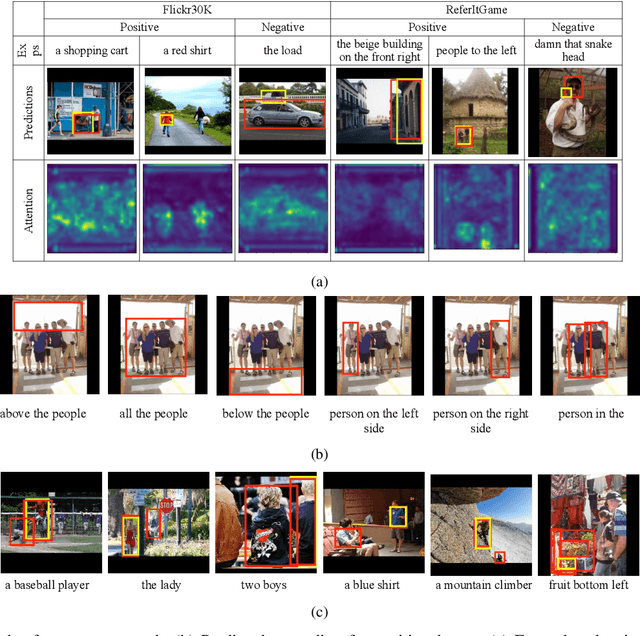

MAGNet: Multi-Region Attention-Assisted Grounding of Natural Language Queries at Phrase Level

Jun 06, 2020

Abstract:Grounding free-form textual queries necessitates an understanding of these textual phrases and its relation to the visual cues to reliably reason about the described locations. Spatial attention networks are known to learn this relationship and focus its gaze on salient objects in the image. Thus, we propose to utilize spatial attention networks for image-level visual-textual fusion preserving local (word) and global (phrase) information to refine region proposals with an in-network Region Proposal Network (RPN) and detect single or multiple regions for a phrase query. We focus only on the phrase query - ground truth pair (referring expression) for a model independent of the constraints of the datasets i.e. additional attributes, context etc. For such referring expression dataset ReferIt game, our Multi-region Attention-assisted Grounding network (MAGNet) achieves over 12\% improvement over the state-of-the-art. Without the context from image captions and attribute information in Flickr30k Entities, we still achieve competitive results compared to the state-of-the-art.

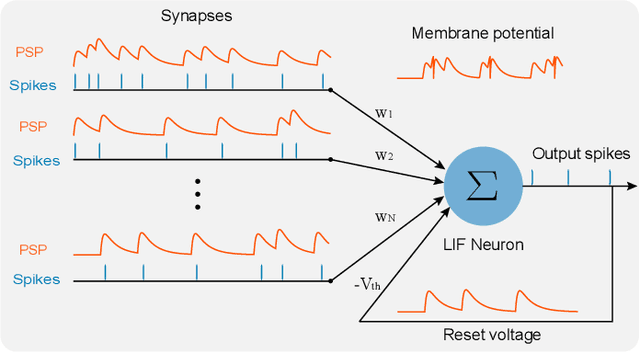

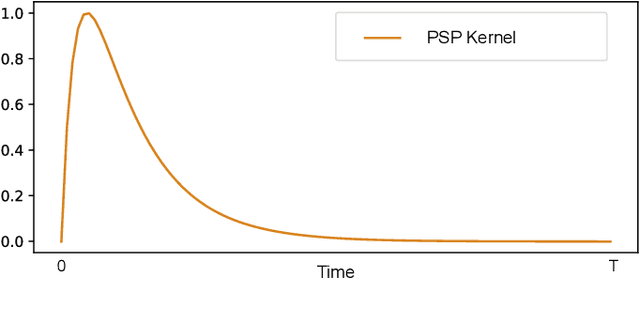

Exploiting Neuron and Synapse Filter Dynamics in Spatial Temporal Learning of Deep Spiking Neural Network

Feb 19, 2020

Abstract:The recent discovered spatial-temporal information processing capability of bio-inspired Spiking neural networks (SNN) has enabled some interesting models and applications. However designing large-scale and high-performance model is yet a challenge due to the lack of robust training algorithms. A bio-plausible SNN model with spatial-temporal property is a complex dynamic system. Each synapse and neuron behave as filters capable of preserving temporal information. As such neuron dynamics and filter effects are ignored in existing training algorithms, the SNN downgrades into a memoryless system and loses the ability of temporal signal processing. Furthermore, spike timing plays an important role in information representation, but conventional rate-based spike coding models only consider spike trains statistically, and discard information carried by its temporal structures. To address the above issues, and exploit the temporal dynamics of SNNs, we formulate SNN as a network of infinite impulse response (IIR) filters with neuron nonlinearity. We proposed a training algorithm that is capable to learn spatial-temporal patterns by searching for the optimal synapse filter kernels and weights. The proposed model and training algorithm are applied to construct associative memories and classifiers for synthetic and public datasets including MNIST, NMNIST, DVS 128 etc.; and their accuracy outperforms state-of-art approaches.

High-Level Plan for Behavioral Robot Navigation with Natural Language Directions and R-NET

Jan 08, 2020

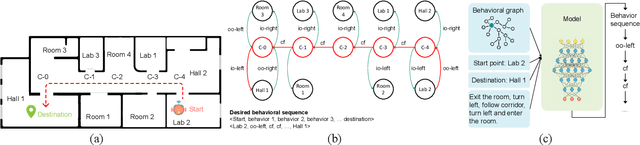

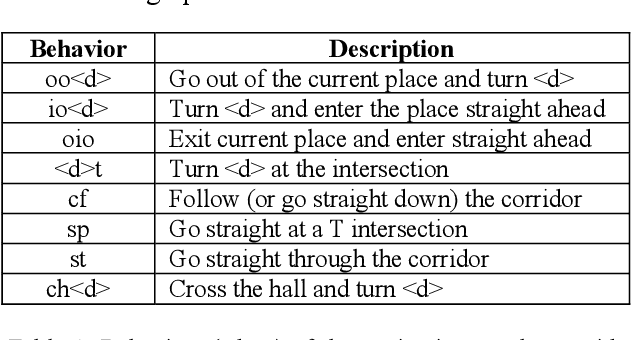

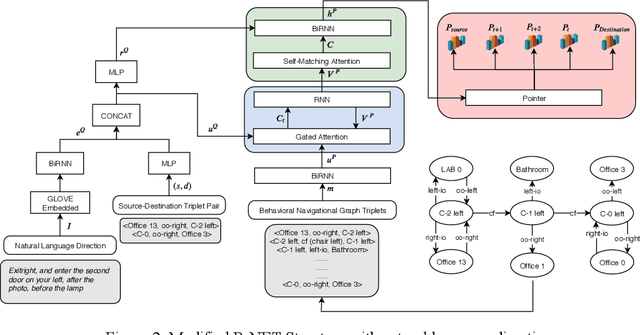

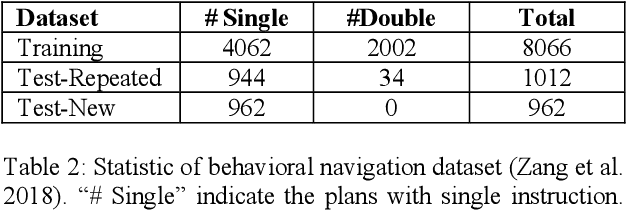

Abstract:When the navigational environment is known, it can be represented as a graph where landmarks are nodes, the robot behaviors that move from node to node are edges, and the route is a set of behavioral instructions. The route path from source to destination can be viewed as a class of combinatorial optimization problems where the path is a sequential subset from a set of discrete items. The pointer network is an attention-based recurrent network that is suitable for such a task. In this paper, we utilize a modified R-NET with gated attention and self-matching attention translating natural language instructions to a high-level plan for behavioral robot navigation by developing an understanding of the behavioral navigational graph to enable the pointer network to produce a sequence of behaviors representing the path. Tests on the navigation graph dataset show that our model outperforms the state-of-the-art approach for both known and unknown environments.

Scalable NoC-based Neuromorphic Hardware Learning and Inference

Sep 18, 2018

Abstract:Bio-inspired neuromorphic hardware is a research direction to approach brain's computational power and energy efficiency. Spiking neural networks (SNN) encode information as sparsely distributed spike trains and employ spike-timing-dependent plasticity (STDP) mechanism for learning. Existing hardware implementations of SNN are limited in scale or do not have in-hardware learning capability. In this work, we propose a low-cost scalable Network-on-Chip (NoC) based SNN hardware architecture with fully distributed in-hardware STDP learning capability. All hardware neurons work in parallel and communicate through the NoC. This enables chip-level interconnection, scalability and reconfigurability necessary for deploying different applications. The hardware is applied to learn MNIST digits as an evaluation of its learning capability. We explore the design space to study the trade-offs between speed, area and energy. How to use this procedure to find optimal architecture configuration is also discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge