Alvaro Veiga

Hierarchical Classification of Financial Transactions Through Context-Fusion of Transformer-based Embeddings and Taxonomy-aware Attention Layer

Dec 12, 2023

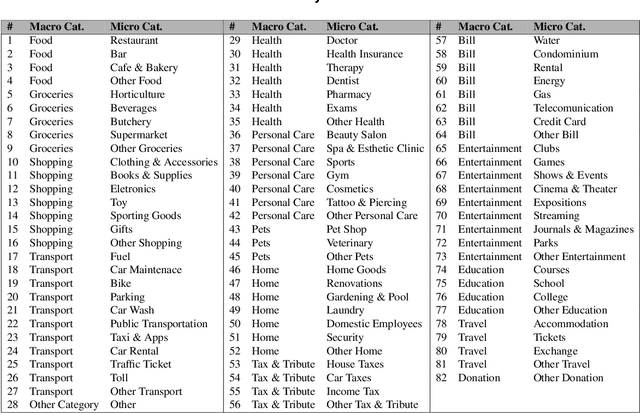

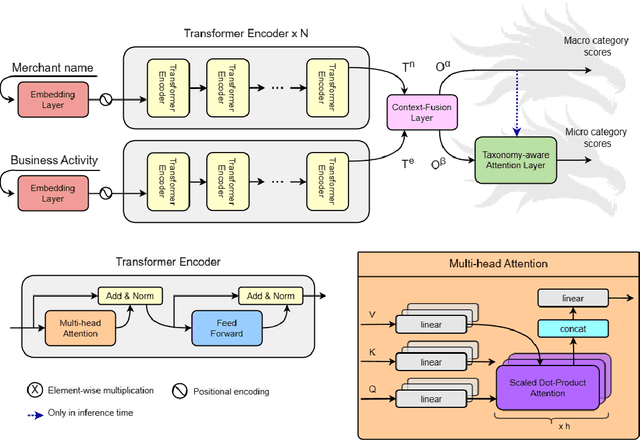

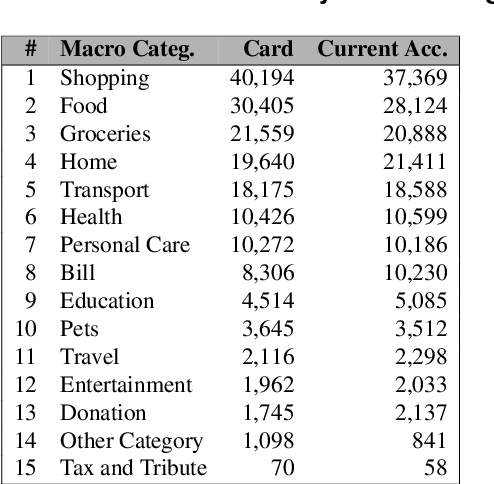

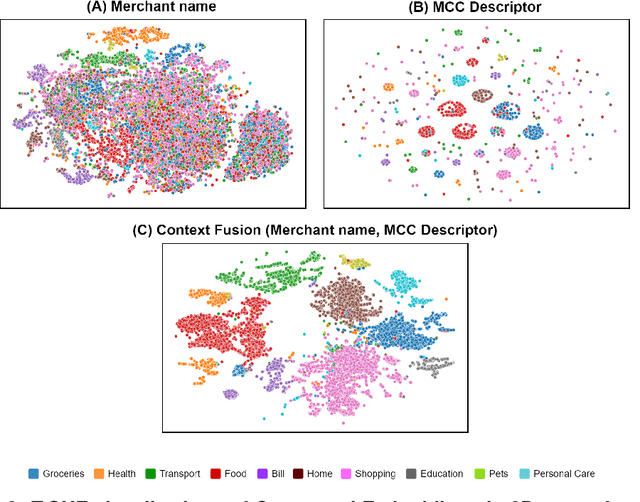

Abstract:This work proposes the Two-headed DragoNet, a Transformer-based model for hierarchical multi-label classification of financial transactions. Our model is based on a stack of Transformers encoder layers that generate contextual embeddings from two short textual descriptors (merchant name and business activity), followed by a Context Fusion layer and two output heads that classify transactions according to a hierarchical two-level taxonomy (macro and micro categories). Finally, our proposed Taxonomy-aware Attention Layer corrects predictions that break categorical hierarchy rules defined in the given taxonomy. Our proposal outperforms classical machine learning methods in experiments of macro-category classification by achieving an F1-score of 93\% on a card dataset and 95% on a current account dataset.

BooST: Boosting Smooth Trees for Partial Effect Estimation in Nonlinear Regressions

Aug 20, 2018

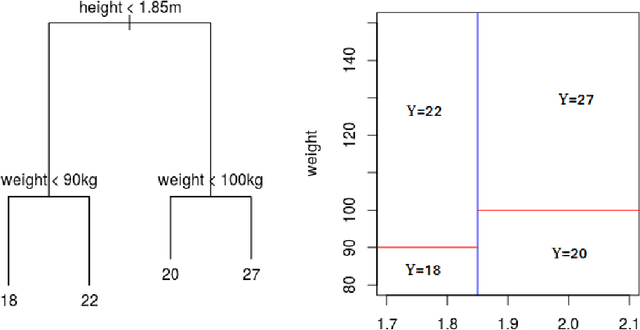

Abstract:In this paper we introduce a new machine learning (ML) model for nonlinear regression called Boosting Smooth Transition Regression Trees (BooST). The main advantage of the BooST model is that it estimates the derivatives (partial effects) of very general nonlinear models, providing more interpretation about the mapping between the covariates and the dependent variable than other tree based models, such as Random Forests. We provide some asymptotic theory that shows consistency of the partial derivative estimates and we present some examples on both simulated and real data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge