Alexander Raß

The Convergence Indicator: Improved and completely characterized parameter bounds for actual convergence of Particle Swarm Optimization

Jun 06, 2020

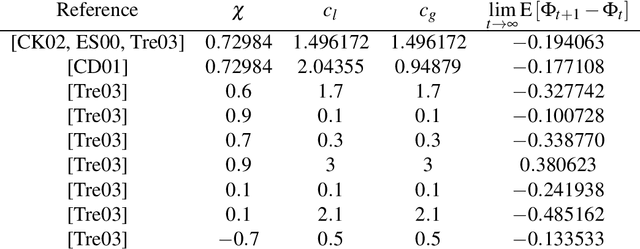

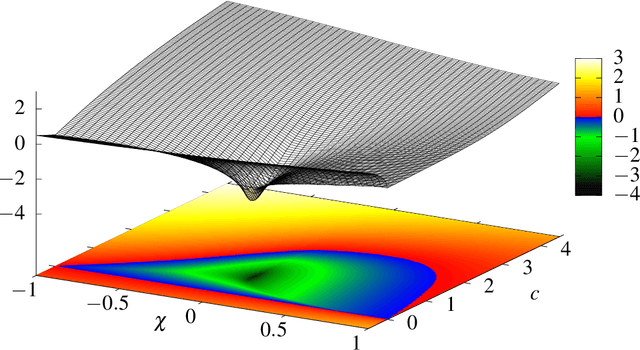

Abstract:Particle Swarm Optimization (PSO) is a meta-heuristic for continuous black-box optimization problems. In this paper we focus on the convergence of the particle swarm, i.e., the exploitation phase of the algorithm. We introduce a new convergence indicator that can be used to calculate whether the particles will finally converge to a single point or diverge. Using this convergence indicator we provide the actual bounds completely characterizing parameter regions that lead to a converging swarm. Our bounds extend the parameter regions where convergence is guaranteed compared to bounds induced by converging variance which are usually used in the literature. To evaluate our criterion we describe a numerical approximation using cubic spline interpolation. Finally we provide experiments showing that our concept, formulas and the resulting convergence bounds represent the actual behavior of PSO.

Efficient Computation of Probabilistic Dominance in Robust Multi-Objective Optimization

Oct 18, 2019

Abstract:Real-world problems typically require the simultaneous optimization of several, often conflicting objectives. Many of these multi-objective optimization problems are characterized by wide ranges of uncertainties in their decision variables or objective functions, which further increases the complexity of optimization. To cope with such uncertainties, robust optimization is widely studied aiming to distinguish candidate solutions with uncertain objectives specified by confidence intervals, probability distributions or sampled data. However, existing techniques mostly either fail to consider the actual distributions or assume uncertainty as instances of uniform or Gaussian distributions. This paper introduces an empirical approach that enables an efficient comparison of candidate solutions with uncertain objectives that can follow arbitrary distributions. Given two candidate solutions under comparison, this operator calculates the probability that one solution dominates the other in terms of each uncertain objective. It can substitute for the standard comparison operator of existing optimization techniques such as evolutionary algorithms to enable discovering robust solutions to problems with multiple uncertain objectives. This paper also proposes to incorporate various uncertainties in well-known multi-objective problems to provide a benchmark for evaluating uncertainty-aware optimization techniques. The proposed comparison operator and benchmark suite are integrated into an existing optimization tool that features a selection of multi-objective optimization problems and algorithms. Experiments show that in comparison with existing techniques, the proposed approach achieves higher optimization quality at lower overheads.

Explanation of Stagnation at Points that are not Local Optima in Particle Swarm Optimization by Potential Analysis

Apr 30, 2015

Abstract:Particle Swarm Optimization (PSO) is a nature-inspired meta-heuristic for solving continuous optimization problems. In the literature, the potential of the particles of swarm has been used to show that slightly modified PSO guarantees convergence to local optima. Here we show that under specific circumstances the unmodified PSO, even with swarm parameters known (from the literature) to be good, almost surely does not yield convergence to a local optimum is provided. This undesirable phenomenon is called stagnation. For this purpose, the particles' potential in each dimension is analyzed mathematically. Additionally, some reasonable assumptions on the behavior if the particles' potential are made. Depending on the objective function and, interestingly, the number of particles, the potential in some dimensions may decrease much faster than in other dimensions. Therefore, these dimensions lose relevance, i.e., the contribution of their entries to the decisions about attractor updates becomes insignificant and, with positive probability, they never regain relevance. If Brownian Motion is assumed to be an approximation of the time-dependent drop of potential, practical, i.e., large values for this probability are calculated. Finally, on chosen multidimensional polynomials of degree two, experiments are provided showing that the required circumstances occur quite frequently. Furthermore, experiments are provided showing that even when the very simple sphere function is processed the described stagnation phenomenon occurs. Consequently, unmodified PSO does not converge to any local optimum of the chosen functions for tested parameter settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge