Alexander Kott

Cybertrust: From Explainable to Actionable and Interpretable AI (AI2)

Jan 26, 2022

Abstract:To benefit from AI advances, users and operators of AI systems must have reason to trust it. Trust arises from multiple interactions, where predictable and desirable behavior is reinforced over time. Providing the system's users with some understanding of AI operations can support predictability, but forcing AI to explain itself risks constraining AI capabilities to only those reconcilable with human cognition. We argue that AI systems should be designed with features that build trust by bringing decision-analytic perspectives and formal tools into AI. Instead of trying to achieve explainable AI, we should develop interpretable and actionable AI. Actionable and Interpretable AI (AI2) will incorporate explicit quantifications and visualizations of user confidence in AI recommendations. In doing so, it will allow examining and testing of AI system predictions to establish a basis for trust in the systems' decision making and ensure broad benefits from deploying and advancing its computational capabilities.

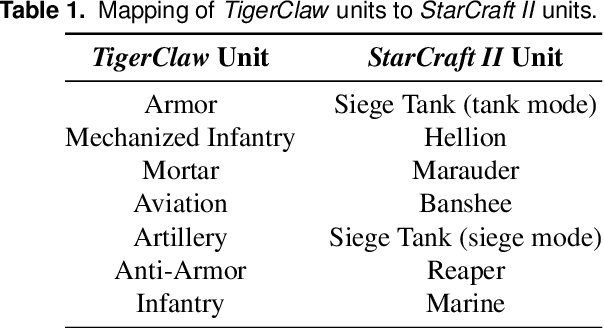

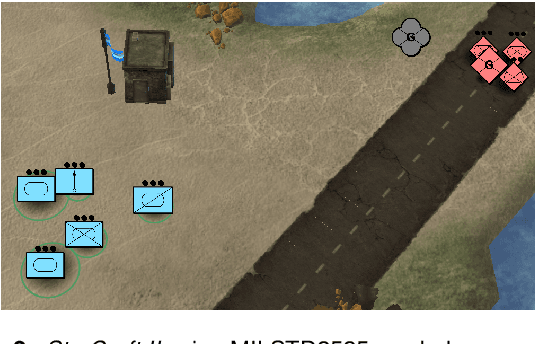

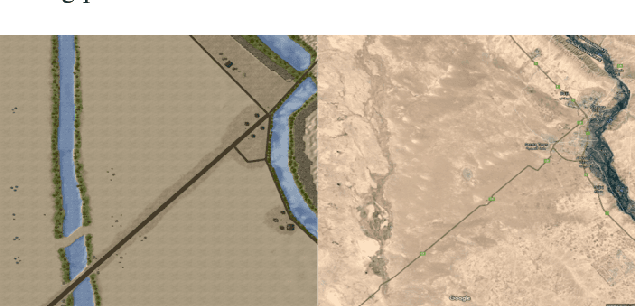

On games and simulators as a platform for development of artificial intelligence for command and control

Oct 21, 2021

Abstract:Games and simulators can be a valuable platform to execute complex multi-agent, multiplayer, imperfect information scenarios with significant parallels to military applications: multiple participants manage resources and make decisions that command assets to secure specific areas of a map or neutralize opposing forces. These characteristics have attracted the artificial intelligence (AI) community by supporting development of algorithms with complex benchmarks and the capability to rapidly iterate over new ideas. The success of artificial intelligence algorithms in real-time strategy games such as StarCraft II have also attracted the attention of the military research community aiming to explore similar techniques in military counterpart scenarios. Aiming to bridge the connection between games and military applications, this work discusses past and current efforts on how games and simulators, together with the artificial intelligence algorithms, have been adapted to simulate certain aspects of military missions and how they might impact the future battlefield. This paper also investigates how advances in virtual reality and visual augmentation systems open new possibilities in human interfaces with gaming platforms and their military parallels.

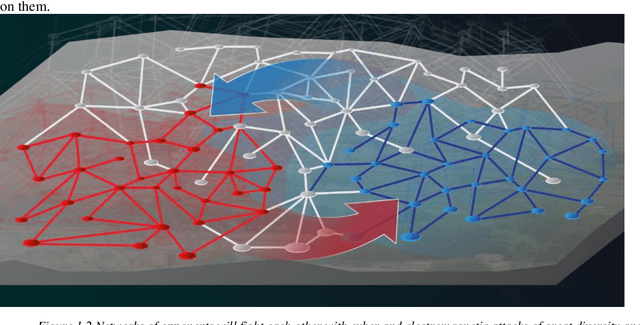

Intelligent Autonomous Things on the Battlefield

Feb 26, 2019

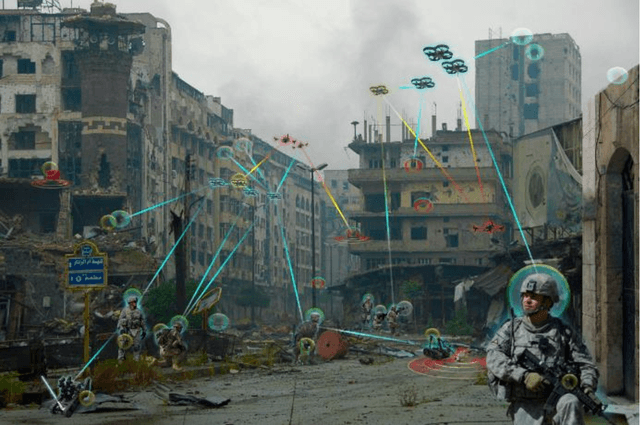

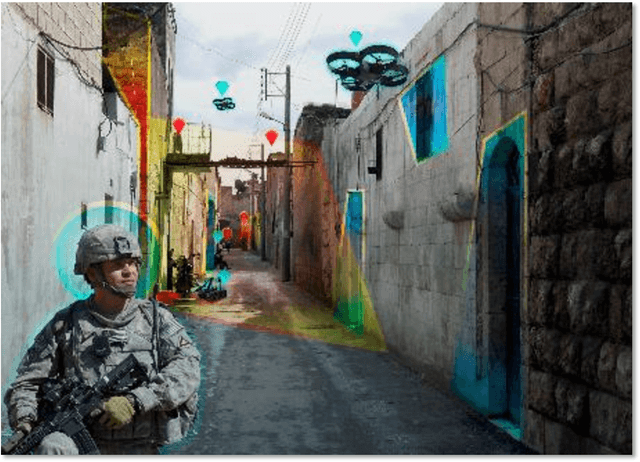

Abstract:Numerous, artificially intelligent, networked things will populate the battlefield of the future, operating in close collaboration with human warfighters, and fighting as teams in highly adversarial environments. This chapter explores the characteristics, capabilities and intelli-gence required of such a network of intelligent things and humans - Internet of Battle Things (IOBT). The IOBT will experience unique challenges that are not yet well addressed by the current generation of AI and machine learning.

* This is a much expanded version of an earlier conference paper available at arXiv:803.11256

Challenges and Characteristics of Intelligent Autonomy for Internet of Battle Things in Highly Adversarial Environments

Apr 13, 2018

Abstract:Numerous, artificially intelligent, networked things will populate the battlefield of the future, operating in close collaboration with human warfighters, and fighting as teams in highly adversarial environments. This paper explores the characteristics, capabilities and intelligence required of such a network of intelligent things and humans - Internet of Battle Things (IOBT). It will experience unique challenges that are not yet well addressed by the current generation of AI and machine learning.

Predicting Enemy's Actions Improves Commander Decision-Making

Jul 22, 2016

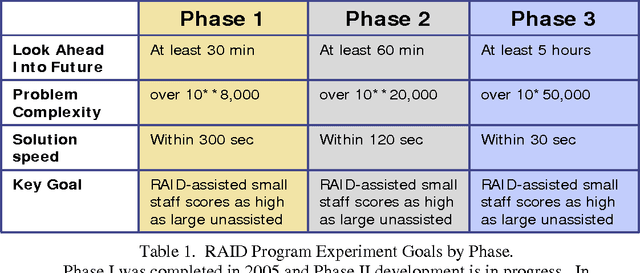

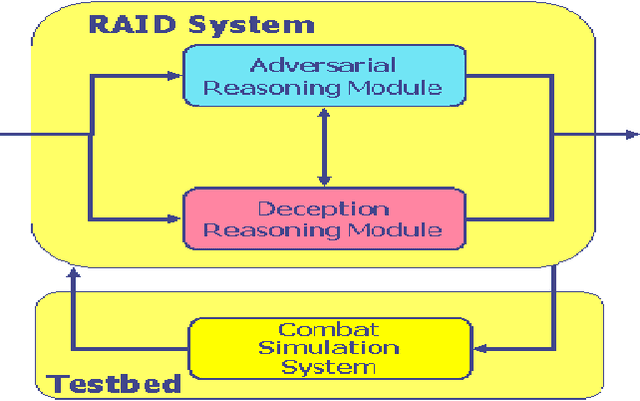

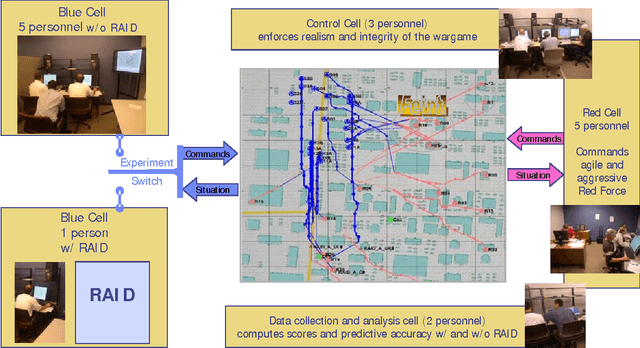

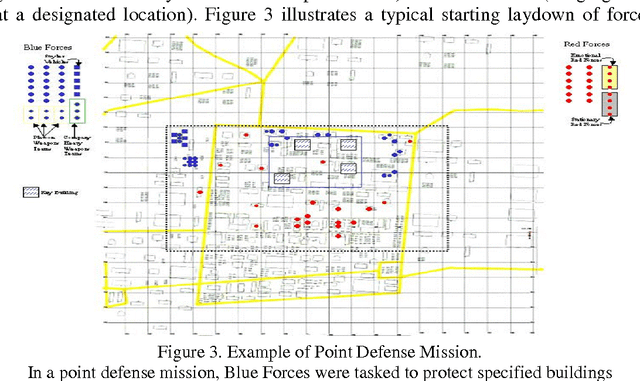

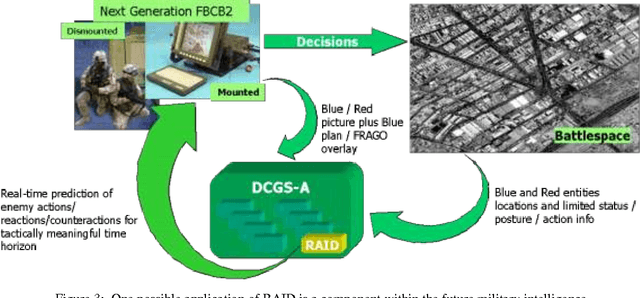

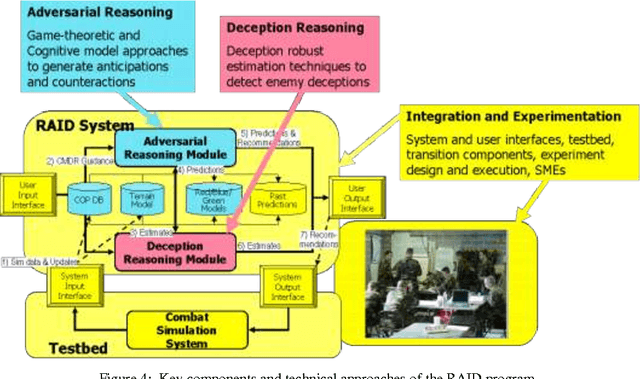

Abstract:The Defense Advanced Research Projects Agency (DARPA) Real-time Adversarial Intelligence and Decision-making (RAID) program is investigating the feasibility of "reading the mind of the enemy" - to estimate and anticipate, in real-time, the enemy's likely goals, deceptions, actions, movements and positions. This program focuses specifically on urban battles at echelons of battalion and below. The RAID program leverages approximate game-theoretic and deception-sensitive algorithms to provide real-time enemy estimates to a tactical commander. A key hypothesis of the program is that these predictions and recommendations will make the commander more effective, i.e. he should be able to achieve his operational goals safer, faster, and more efficiently. Realistic experimentation and evaluation drive the development process using human-in-the-loop wargames to compare humans and the RAID system. Two experiments were conducted in 2005 as part of Phase I to determine if the RAID software could make predictions and recommendations as effectively and accurately as a 4-person experienced staff. This report discusses the intriguing and encouraging results of these first two experiments conducted by the RAID program. It also provides details about the experiment environment and methodology that were used to demonstrate and prove the research goals.

Validation of Information Fusion

Jul 22, 2016

Abstract:We motivate and offer a formal definition of validation as it applies to information fusion systems. Common definitions of validation compare the actual state of the world with that derived by the fusion process. This definition conflates properties of the fusion system with properties of systems that intervene between the world and the fusion system. We propose an alternative definition where validation of an information fusion system references a standard fusion device, such as recognized human experts. We illustrate the approach by describing the validation process implemented in RAID, a program conducted by DARPA and focused on information fusion in adversarial, deceptive environments.

A Survey of Research on Control of Teams of Small Robots in Military Operations

Jun 03, 2016

Abstract:While a number of excellent review articles on military robots have appeared in existing literature, this paper focuses on a distinct sub-space of related problems: small military robots organized into moderately sized squads, operating in a ground combat environment. Specifically, we consider the following: - Command of practical small robots, comparable to current generation, small unmanned ground vehicles (e.g., PackBots) with limited computing and sensor payload, as opposed to larger vehicle-sized robots or micro-scale robots; - Utilization of moderately sized practical forces of 3-10 robots applicable to currently envisioned military ground operations; - Complex three-dimensional physical environments, such as urban areas or mountainous terrains and the inherent difficulties they impose, including limited and variable fields of observation, difficult navigation, and intermittent communication; - Adversarial environments where the active, intelligent enemy is the key consideration in determining the behavior of the robotic force; and - Purposeful, partly autonomous, coordinated behaviors that are necessary for such a robotic force to survive and complete missions; these are far more complex than, for example, formation control or field coverage behavior.

Decision Aids for Adversarial Planning in Military Operations: Algorithms, Tools, and Turing-test-like Experimental Validation

Jan 22, 2016

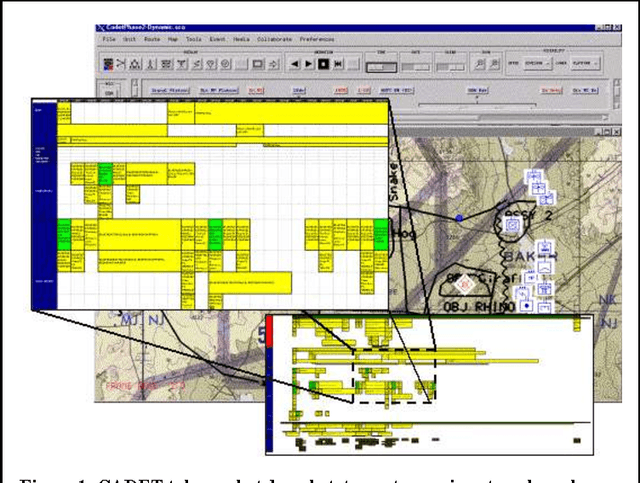

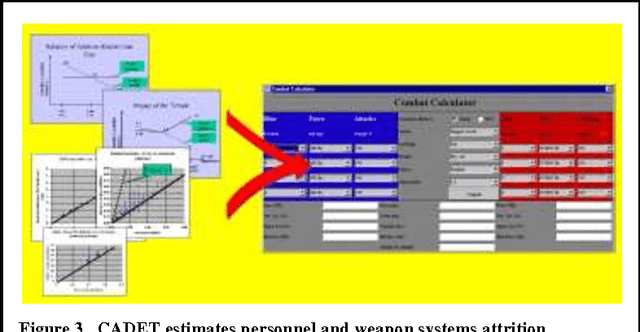

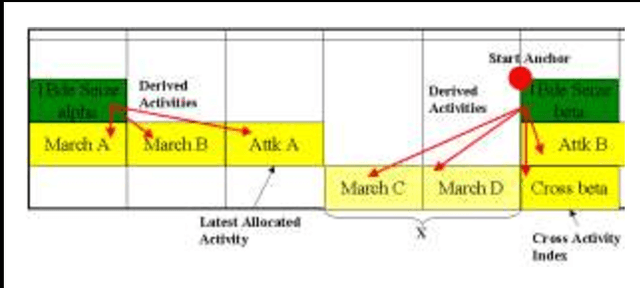

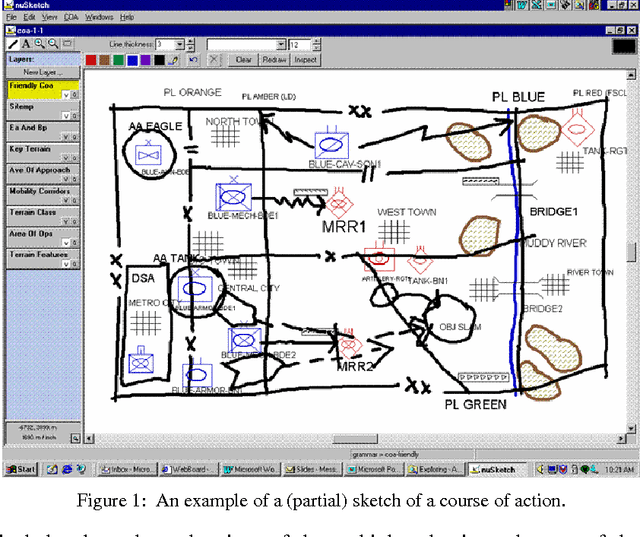

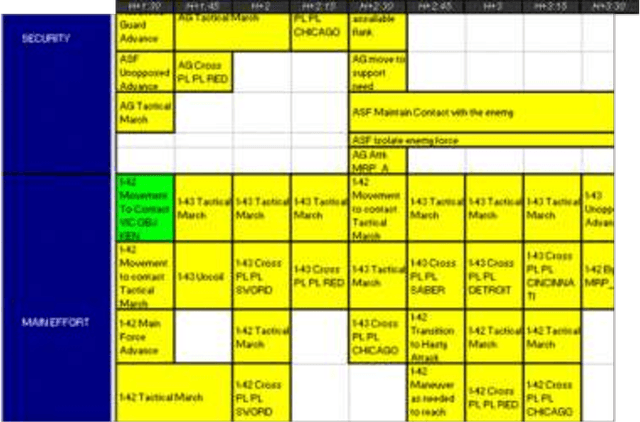

Abstract:Use of intelligent decision aids can help alleviate the challenges of planning complex operations. We describe integrated algorithms, and a tool capable of translating a high-level concept for a tactical military operation into a fully detailed, actionable plan, producing automatically (or with human guidance) plans with realistic degree of detail and of human-like quality. Tight interleaving of several algorithms -- planning, adversary estimates, scheduling, routing, attrition and consumption estimates -- comprise the computational approach of this tool. Although originally developed for Army large-unit operations, the technology is generic and also applies to a number of other domains, particularly in critical situations requiring detailed planning within a constrained period of time. In this paper, we focus particularly on the engineering tradeoffs in the design of the tool. In an experimental evaluation, reminiscent of the Turing test, the tool's performance compared favorably with human planners.

Coalition-based Planning of Military Operations: Adversarial Reasoning Algorithms in an Integrated Decision Aid

Jan 22, 2016

Abstract:Use of knowledge-based planning tools can help alleviate the challenges of planning a complex operation by a coalition of diverse parties in an adversarial environment. We explore these challenges and potential contributions of knowledge-based tools using as an example the CADET system, a knowledge-based tool capable of producing automatically (or with human guidance) battle plans with realistic degree of detail and complexity. In ongoing experiments, it compared favorably with human planners. Interleaved planning, scheduling, routing, attrition and consumption processes comprise the computational approach of this tool. From the coalition operations perspective, such tools offer an important aid in rapid synchronization of assets and actions of heterogeneous assets belonging to multiple organizations, potentially with distinct doctrine and rules of engagement. In this paper, we discuss the functionality of the tool, provide a brief overview of the technical approach and experimental results, and outline the potential value of such tools.

Toward a Research Agenda in Adversarial Reasoning: Computational Approaches to Anticipating the Opponent's Intent and Actions

Dec 25, 2015

Abstract:This paper defines adversarial reasoning as computational approaches to inferring and anticipating an enemy's perceptions, intents and actions. It argues that adversarial reasoning transcends the boundaries of game theory and must also leverage such disciplines as cognitive modeling, control theory, AI planning and others. To illustrate the challenges of applying adversarial reasoning to real-world problems, the paper explores the lessons learned in the CADET - a battle planning system that focuses on brigade-level ground operations and involves adversarial reasoning. From this example of current capabilities, the paper proceeds to describe RAID - a DARPA program that aims to build capabilities in adversarial reasoning, and how such capabilities would address practical requirements in Defense and other application areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge