Alexander Geng

Benefiting from Quantum? A Comparative Study of Q-Seg, Quantum-Inspired Techniques, and U-Net for Crack Segmentation

Oct 14, 2024Abstract:Exploring the potential of quantum hardware for enhancing classical and real-world applications is an ongoing challenge. This study evaluates the performance of quantum and quantum-inspired methods compared to classical models for crack segmentation. Using annotated gray-scale image patches of concrete samples, we benchmark a classical mean Gaussian mixture technique, a quantum-inspired fermion-based method, Q-Seg a quantum annealing-based method, and a U-Net deep learning architecture. Our results indicate that quantum-inspired and quantum methods offer a promising alternative for image segmentation, particularly for complex crack patterns, and could be applied in near-future applications.

Hybrid quantum transfer learning for crack image classification on NISQ hardware

Jul 31, 2023Abstract:Quantum computers possess the potential to process data using a remarkably reduced number of qubits compared to conventional bits, as per theoretical foundations. However, recent experiments have indicated that the practical feasibility of retrieving an image from its quantum encoded version is currently limited to very small image sizes. Despite this constraint, variational quantum machine learning algorithms can still be employed in the current noisy intermediate scale quantum (NISQ) era. An example is a hybrid quantum machine learning approach for edge detection. In our study, we present an application of quantum transfer learning for detecting cracks in gray value images. We compare the performance and training time of PennyLane's standard qubits with IBM's qasm\_simulator and real backends, offering insights into their execution efficiency.

A hybrid quantum image edge detector for the NISQ era

Mar 22, 2022

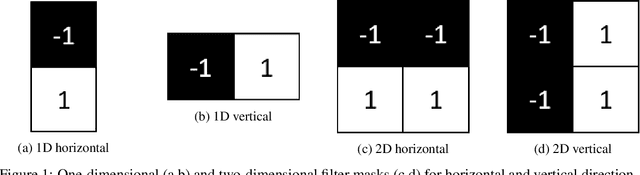

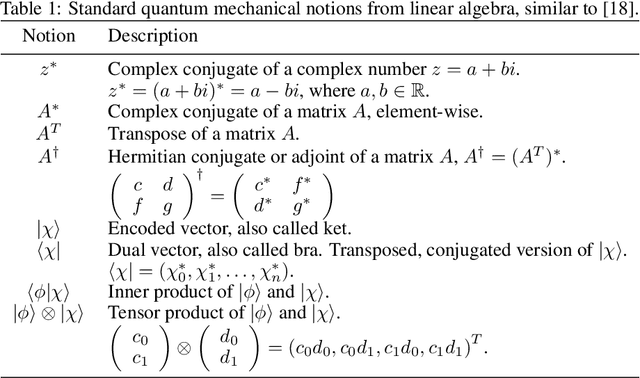

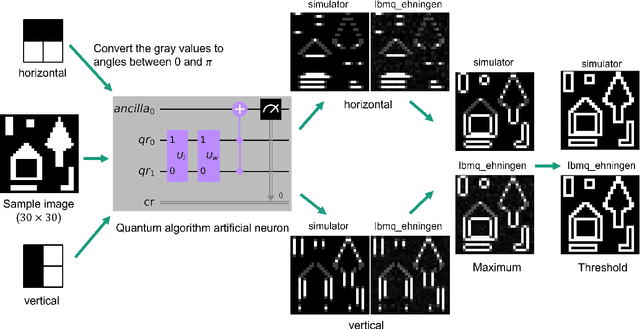

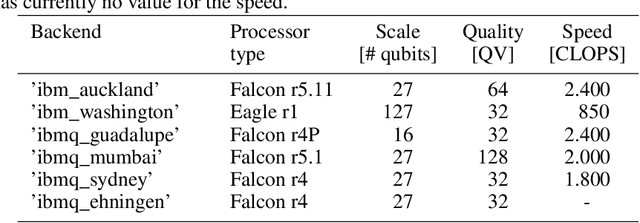

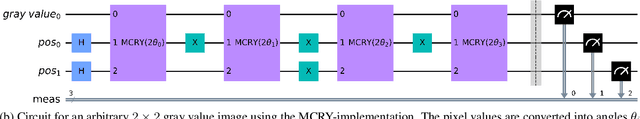

Abstract:Edges are image locations where the gray value intensity changes suddenly. They are among the most important features to understand and segment an image. Edge detection is a standard task in digital image processing, solved for example using filtering techniques. However, the amount of data to be processed grows rapidly and pushes even supercomputers to their limits. Quantum computing promises exponentially lower memory usage in terms of the number of qubits compared to the number of classical bits. In this paper, we propose a hybrid method for quantum edge detection based on the idea of a quantum artificial neuron. Our method can be practically implemented on quantum computers, especially on those of the current noisy intermediate-scale quantum era. We compare six variants of the method to reduce the number of circuits and thus the time required for the quantum edge detection. Taking advantage of the scalability of our method, we can practically detect edges in images considerably larger than reached before.

Improved FRQI on superconducting processors and its restrictions in the NISQ era

Oct 29, 2021

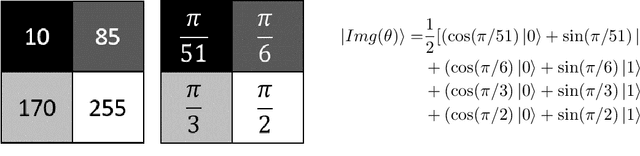

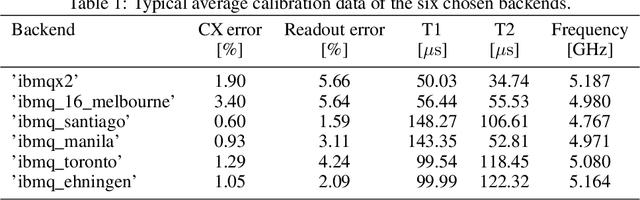

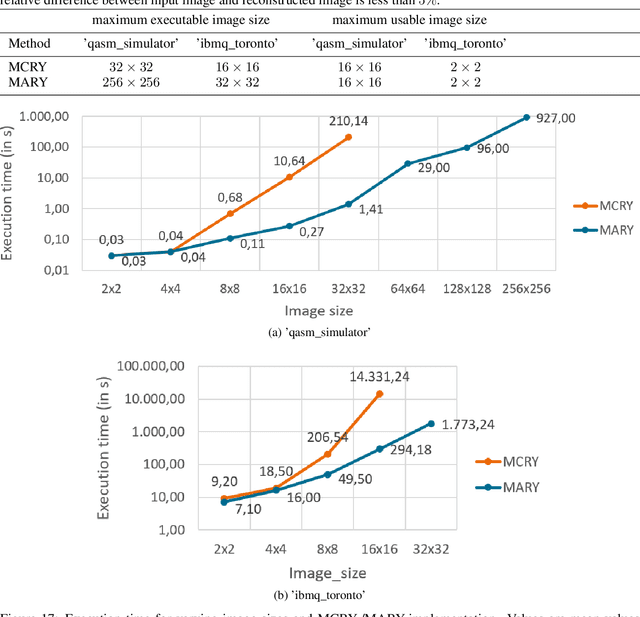

Abstract:In image processing, the amount of data to be processed grows rapidly, in particular when imaging methods yield images of more than two dimensions or time series of images. Thus, efficient processing is a challenge, as data sizes may push even supercomputers to their limits. Quantum image processing promises to encode images with logarithmically less qubits than classical pixels in the image. In theory, this is a huge progress, but so far not many experiments have been conducted in practice, in particular on real backends. Often, the precise conversion of classical data to quantum states, the exact implementation, and the interpretation of the measurements in the classical context are challenging. We investigate these practical questions in this paper. In particular, we study the feasibility of the Flexible Representation of Quantum Images (FRQI). Furthermore, we check experimentally what is the limit in the current noisy intermediate-scale quantum era, i.e. up to which image size an image can be encoded, both on simulators and on real backends. Finally, we propose a method for simplifying the circuits needed for the FRQI. With our alteration, the number of gates needed, especially of the error-prone controlled-NOT gates, can be reduced. As a consequence, the size of manageable images increases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge