Alexander Ecker

Hierarchical clustering with maximum density paths and mixture models

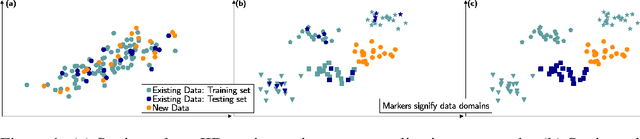

Mar 19, 2025Abstract:Hierarchical clustering is an effective and interpretable technique for analyzing structure in data, offering a nuanced understanding by revealing insights at multiple scales and resolutions. It is particularly helpful in settings where the exact number of clusters is unknown, and provides a robust framework for exploring complex datasets. Additionally, hierarchical clustering can uncover inner structures within clusters, capturing subtle relationships and nested patterns that may be obscured by traditional flat clustering methods. However, existing hierarchical clustering methods struggle with high-dimensional data, especially when there are no clear density gaps between modes. Our method addresses this limitation by leveraging a two-stage approach, first employing a Gaussian or Student's t mixture model to overcluster the data, and then hierarchically merging clusters based on the induced density landscape. This approach yields state-of-the-art clustering performance while also providing a meaningful hierarchy, making it a valuable tool for exploratory data analysis. Code is available at https://github.com/ecker-lab/tneb clustering.

FLASHμ: Fast Localizing And Sizing of Holographic Microparticles

Mar 14, 2025Abstract:Reconstructing the 3D location and size of microparticles from diffraction images - holograms - is a computationally expensive inverse problem that has traditionally been solved using physics-based reconstruction methods. More recently, researchers have used machine learning methods to speed up the process. However, for small particles in large sample volumes the performance of these methods falls short of standard physics-based reconstruction methods. Here we designed a two-stage neural network architecture, FLASH$\mu$, to detect small particles (6-100$\mu$m) from holograms with large sample depths up to 20cm. Trained only on synthetic data with added physical noise, our method reliably detects particles of at least 9$\mu$m diameter in real holograms, comparable to the standard reconstruction-based approaches while operating on smaller crops, at quarter of the original resolution and providing roughly a 600-fold speedup. In addition to introducing a novel approach to a non-local object detection or signal demixing problem, our work could enable low-cost, real-time holographic imaging setups.

TreeLearn: A Comprehensive Deep Learning Method for Segmenting Individual Trees from Forest Point Clouds

Sep 15, 2023

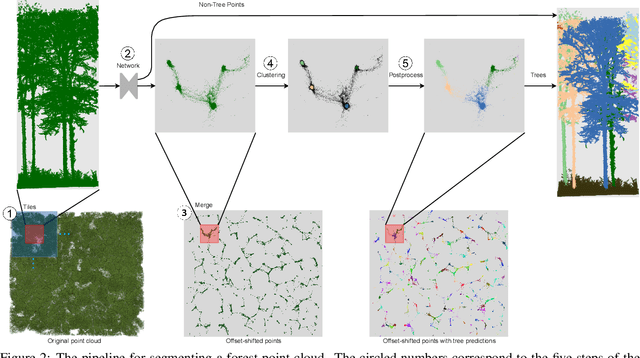

Abstract:Laser-scanned point clouds of forests make it possible to extract valuable information for forest management. To consider single trees, a forest point cloud needs to be segmented into individual tree point clouds. Existing segmentation methods are usually based on hand-crafted algorithms, such as identifying trunks and growing trees from them, and face difficulties in dense forests with overlapping tree crowns. In this study, we propose \mbox{TreeLearn}, a deep learning-based approach for semantic and instance segmentation of forest point clouds. Unlike previous methods, TreeLearn is trained on already segmented point clouds in a data-driven manner, making it less reliant on predefined features and algorithms. Additionally, we introduce a new manually segmented benchmark forest dataset containing 156 full trees, and 79 partial trees, that have been cleanly segmented by hand. This enables the evaluation of instance segmentation performance going beyond just evaluating the detection of individual trees. We trained TreeLearn on forest point clouds of 6665 trees, labeled using the Lidar360 software. An evaluation on the benchmark dataset shows that TreeLearn performs equally well or better than the algorithm used to generate its training data. Furthermore, the method's performance can be vastly improved by fine-tuning on the cleanly labeled benchmark dataset. The TreeLearn code is availabe from https://github.com/ecker-lab/TreeLearn. The data as well as trained models can be found at https://doi.org/10.25625/VPMPID.

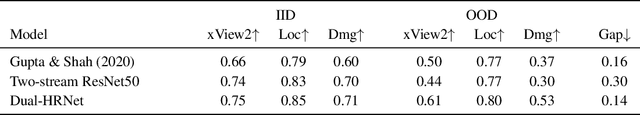

Assessing out-of-domain generalization for robust building damage detection

Nov 20, 2020

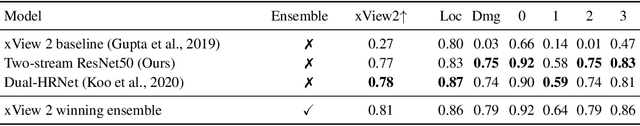

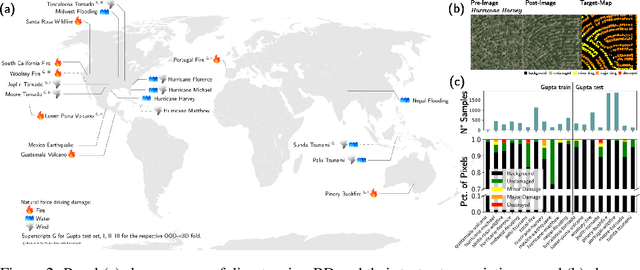

Abstract:An important step for limiting the negative impact of natural disasters is rapid damage assessment after a disaster occurred. For instance, building damage detection can be automated by applying computer vision techniques to satellite imagery. Such models operate in a multi-domain setting: every disaster is inherently different (new geolocation, unique circumstances), and models must be robust to a shift in distribution between disaster imagery available for training and the images of the new event. Accordingly, estimating real-world performance requires an out-of-domain (OOD) test set. However, building damage detection models have so far been evaluated mostly in the simpler yet unrealistic in-distribution (IID) test setting. Here we argue that future work should focus on the OOD regime instead. We assess OOD performance of two competitive damage detection models and find that existing state-of-the-art models show a substantial generalization gap: their performance drops when evaluated OOD on new disasters not used during training. Moreover, IID performance is not predictive of OOD performance, rendering current benchmarks uninformative about real-world performance. Code and model weights are available at https://github.com/ecker-lab/robust-bdd.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge