Alex Coninx

E2R: a Hierarchical-Learning inspired Novelty-Search method to generate diverse repertoires of grasping trajectories

Oct 14, 2022

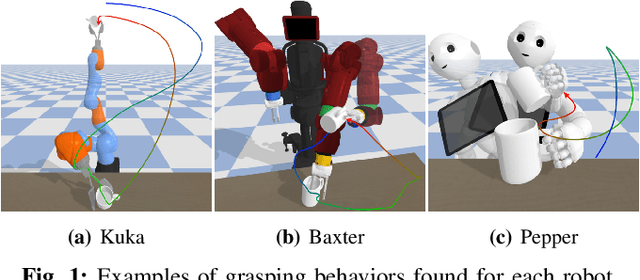

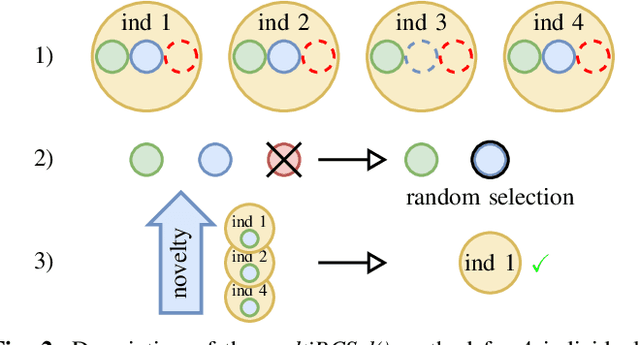

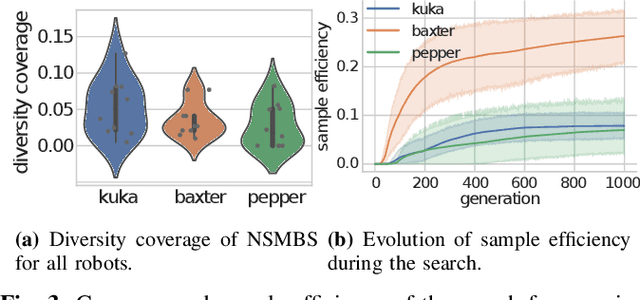

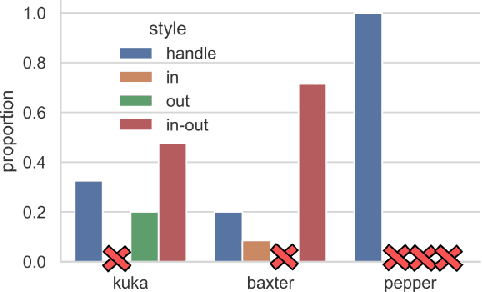

Abstract:Robotics grasping refers to the task of making a robotic system pick an object by applying forces and torques on its surface. Despite the recent advances in data-driven approaches, grasping remains an unsolved problem. Most of the works on this task are relying on priors and heavy constraints to avoid the exploration problem. Novelty Search (NS) refers to evolutionary algorithms that replace selection of best performing individuals with selection of the most novel ones. Such methods have already shown promising results on hard exploration problems. In this work, we introduce a new NS-based method that can generate large datasets of grasping trajectories in a platform-agnostic manner. Inspired by the hierarchical learning paradigm, our method decouples approach and prehension to make the behavioral space smoother. Experiments conducted on 3 different robot-gripper setups and on several standard objects shows that our method outperforms state-of-the-art for generating diverse repertoire of grasping trajectories, getting a higher successful run ratio, as well as a better diversity for both approach and prehension. Some of the generated solutions have been successfully deployed on a real robot, showing the exploitability of the obtained repertoires.

Automatic Acquisition of a Repertoire of Diverse Grasping Trajectories through Behavior Shaping and Novelty Search

May 17, 2022

Abstract:Grasping a particular object may require a dedicated grasping movement that may also be specific to the robot end-effector. No generic and autonomous method does exist to generate these movements without making hypotheses on the robot or on the object. Learning methods could help to autonomously discover relevant grasping movements, but they face an important issue: grasping movements are so rare that a learning method based on exploration has little chance to ever observe an interesting movement, thus creating a bootstrap issue. We introduce an approach to generate diverse grasping movements in order to solve this problem. The movements are generated in simulation, for particular object positions. We test it on several simulated robots: Baxter, Pepper and a Kuka Iiwa arm. Although we show that generated movements actually work on a real Baxter robot, the aim is to use this method to create a large dataset to bootstrap deep learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge