Alejandro Martín

Pushing the boundary on Natural Language Inference

Apr 25, 2025

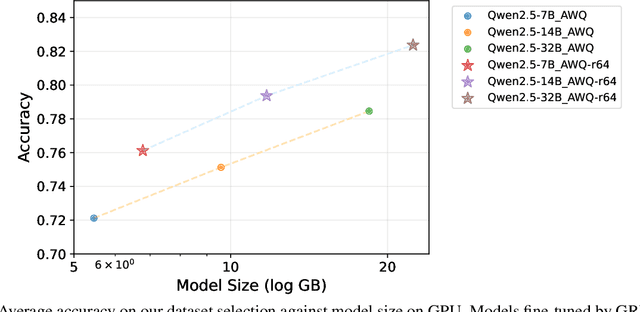

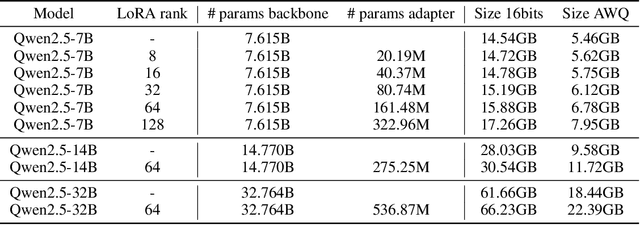

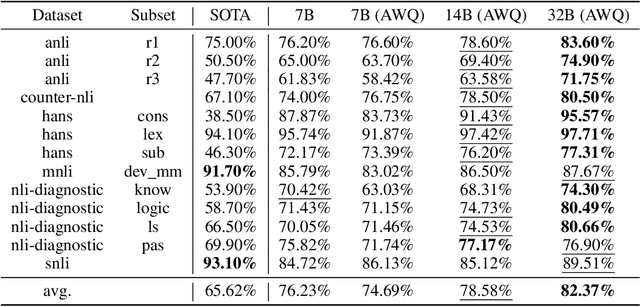

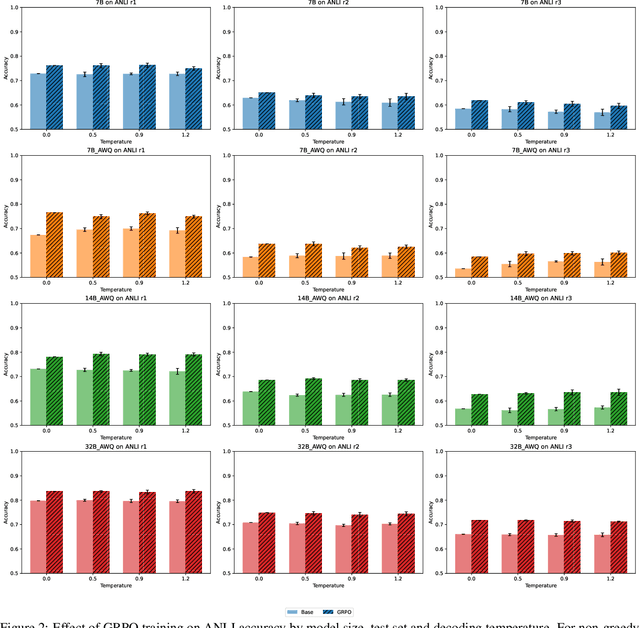

Abstract:Natural Language Inference (NLI) is a central task in natural language understanding with applications in fact-checking, question answering, and information retrieval. Despite its importance, current NLI systems heavily rely on supervised learning with datasets that often contain annotation artifacts and biases, limiting generalization and real-world applicability. In this work, we apply a reinforcement learning-based approach using Group Relative Policy Optimization (GRPO) for Chain-of-Thought (CoT) learning in NLI, eliminating the need for labeled rationales and enabling this type of training on more challenging datasets such as ANLI. We fine-tune 7B, 14B, and 32B language models using parameter-efficient techniques (LoRA and QLoRA), demonstrating strong performance across standard and adversarial NLI benchmarks. Our 32B AWQ-quantized model surpasses state-of-the-art results on 7 out of 11 adversarial sets$\unicode{x2013}$or on all of them considering our replication$\unicode{x2013}$within a 22GB memory footprint, showing that robust reasoning can be retained under aggressive quantization. This work provides a scalable and practical framework for building robust NLI systems without sacrificing inference quality.

On the locality bias and results in the Long Range Arena

Jan 24, 2025Abstract:The Long Range Arena (LRA) benchmark was designed to evaluate the performance of Transformer improvements and alternatives in long-range dependency modeling tasks. The Transformer and its main variants performed poorly on this benchmark, and a new series of architectures such as State Space Models (SSMs) gained some traction, greatly outperforming Transformers in the LRA. Recent work has shown that with a denoising pre-training phase, Transformers can achieve competitive results in the LRA with these new architectures. In this work, we discuss and explain the superiority of architectures such as MEGA and SSMs in the Long Range Arena, as well as the recent improvement in the results of Transformers, pointing to the positional and local nature of the tasks. We show that while the LRA is a benchmark for long-range dependency modeling, in reality most of the performance comes from short-range dependencies. Using training techniques to mitigate data inefficiency, Transformers are able to reach state-of-the-art performance with proper positional encoding. In addition, with the same techniques, we were able to remove all restrictions from SSM convolutional kernels and learn fully parameterized convolutions without decreasing performance, suggesting that the design choices behind SSMs simply added inductive biases and learning efficiency for these particular tasks. Our insights indicate that LRA results should be interpreted with caution and call for a redesign of the benchmark.

Not all tokens are created equal: Perplexity Attention Weighted Networks for AI generated text detection

Jan 07, 2025

Abstract:The rapid advancement in large language models (LLMs) has significantly enhanced their ability to generate coherent and contextually relevant text, raising concerns about the misuse of AI-generated content and making it critical to detect it. However, the task remains challenging, particularly in unseen domains or with unfamiliar LLMs. Leveraging LLM next-token distribution outputs offers a theoretically appealing approach for detection, as they encapsulate insights from the models' extensive pre-training on diverse corpora. Despite its promise, zero-shot methods that attempt to operationalize these outputs have met with limited success. We hypothesize that one of the problems is that they use the mean to aggregate next-token distribution metrics across tokens, when some tokens are naturally easier or harder to predict and should be weighted differently. Based on this idea, we propose the Perplexity Attention Weighted Network (PAWN), which uses the last hidden states of the LLM and positions to weight the sum of a series of features based on metrics from the next-token distribution across the sequence length. Although not zero-shot, our method allows us to cache the last hidden states and next-token distribution metrics on disk, greatly reducing the training resource requirements. PAWN shows competitive and even better performance in-distribution than the strongest baselines (fine-tuned LMs) with a fraction of their trainable parameters. Our model also generalizes better to unseen domains and source models, with smaller variability in the decision boundary across distribution shifts. It is also more robust to adversarial attacks, and if the backbone has multilingual capabilities, it presents decent generalization to languages not seen during supervised training, with LLaMA3-1B reaching a mean macro-averaged F1 score of 81.46% in cross-validation with nine languages.

Isolating authorship from content with semantic embeddings and contrastive learning

Nov 27, 2024

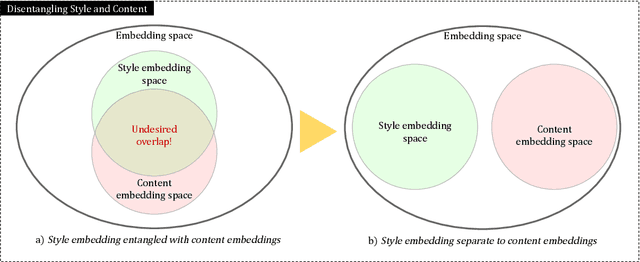

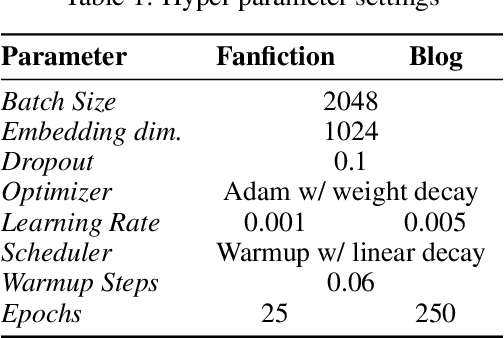

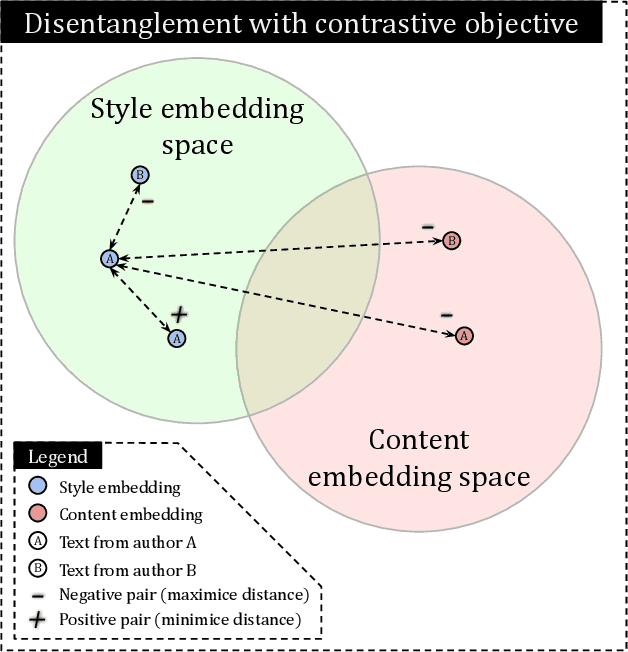

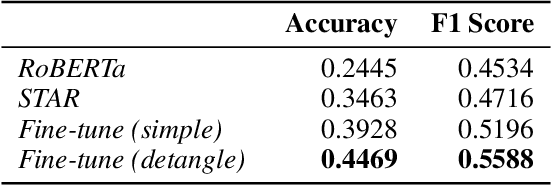

Abstract:Authorship has entangled style and content inside. Authors frequently write about the same topics in the same style, so when different authors write about the exact same topic the easiest way out to distinguish them is by understanding the nuances of their style. Modern neural models for authorship can pick up these features using contrastive learning, however, some amount of content leakage is always present. Our aim is to reduce the inevitable impact and correlation between content and authorship. We present a technique to use contrastive learning (InfoNCE) with additional hard negatives synthetically created using a semantic similarity model. This disentanglement technique aims to distance the content embedding space from the style embedding space, leading to embeddings more informed by style. We demonstrate the performance with ablations on two different datasets and compare them on out-of-domain challenges. Improvements are clearly shown on challenging evaluations on prolific authors with up to a 10% increase in accuracy when the settings are particularly hard. Trials on challenges also demonstrate the preservation of zero-shot capabilities of this method as fine tuning.

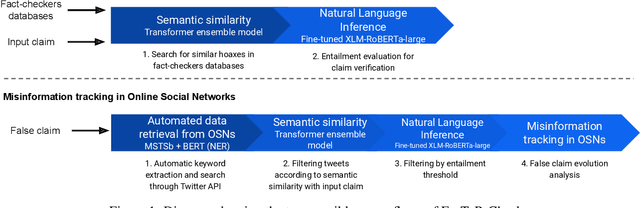

DisTrack: a new Tool for Semi-automatic Misinformation Tracking in Online Social Networks

Aug 01, 2024Abstract:Introduction: This article introduces DisTrack, a methodology and a tool developed for tracking and analyzing misinformation within Online Social Networks (OSNs). DisTrack is designed to combat the spread of misinformation through a combination of Natural Language Processing (NLP) Social Network Analysis (SNA) and graph visualization. The primary goal is to detect misinformation, track its propagation, identify its sources, and assess the influence of various actors within the network. Methods: DisTrack's architecture incorporates a variety of methodologies including keyword search, semantic similarity assessments, and graph generation techniques. These methods collectively facilitate the monitoring of misinformation, the categorization of content based on alignment with known false claims, and the visualization of dissemination cascades through detailed graphs. The tool is tailored to capture and analyze the dynamic nature of misinformation spread in digital environments. Results: The effectiveness of DisTrack is demonstrated through three case studies focused on different themes: discredit/hate speech, anti-vaccine misinformation, and false narratives about the Russia-Ukraine conflict. These studies show DisTrack's capabilities in distinguishing posts that propagate falsehoods from those that counteract them, and tracing the evolution of misinformation from its inception. Conclusions: The research confirms that DisTrack is a valuable tool in the field of misinformation analysis. It effectively distinguishes between different types of misinformation and traces their development over time. By providing a comprehensive approach to understanding and combating misinformation in digital spaces, DisTrack proves to be an essential asset for researchers and practitioners working to mitigate the impact of false information in online social environments.

Camouflage is all you need: Evaluating and Enhancing Language Model Robustness Against Camouflage Adversarial Attacks

Feb 15, 2024Abstract:Adversarial attacks represent a substantial challenge in Natural Language Processing (NLP). This study undertakes a systematic exploration of this challenge in two distinct phases: vulnerability evaluation and resilience enhancement of Transformer-based models under adversarial attacks. In the evaluation phase, we assess the susceptibility of three Transformer configurations, encoder-decoder, encoder-only, and decoder-only setups, to adversarial attacks of escalating complexity across datasets containing offensive language and misinformation. Encoder-only models manifest a 14% and 21% performance drop in offensive language detection and misinformation detection tasks, respectively. Decoder-only models register a 16% decrease in both tasks, while encoder-decoder models exhibit a maximum performance drop of 14% and 26% in the respective tasks. The resilience-enhancement phase employs adversarial training, integrating pre-camouflaged and dynamically altered data. This approach effectively reduces the performance drop in encoder-only models to an average of 5% in offensive language detection and 2% in misinformation detection tasks. Decoder-only models, occasionally exceeding original performance, limit the performance drop to 7% and 2% in the respective tasks. Although not surpassing the original performance, Encoder-decoder models can reduce the drop to an average of 6% and 2% respectively. Results suggest a trade-off between performance and robustness, with some models maintaining similar performance while gaining robustness. Our study and adversarial training techniques have been incorporated into an open-source tool for generating camouflaged datasets. However, methodology effectiveness depends on the specific camouflage technique and data encountered, emphasizing the need for continued exploration.

Countering Malicious Content Moderation Evasion in Online Social Networks: Simulation and Detection of Word Camouflage

Dec 27, 2022

Abstract:Content moderation is the process of screening and monitoring user-generated content online. It plays a crucial role in stopping content resulting from unacceptable behaviors such as hate speech, harassment, violence against specific groups, terrorism, racism, xenophobia, homophobia, or misogyny, to mention some few, in Online Social Platforms. These platforms make use of a plethora of tools to detect and manage malicious information; however, malicious actors also improve their skills, developing strategies to surpass these barriers and continuing to spread misleading information. Twisting and camouflaging keywords are among the most used techniques to evade platform content moderation systems. In response to this recent ongoing issue, this paper presents an innovative approach to address this linguistic trend in social networks through the simulation of different content evasion techniques and a multilingual Transformer model for content evasion detection. In this way, we share with the rest of the scientific community a multilingual public tool, named "pyleetspeak" to generate/simulate in a customizable way the phenomenon of content evasion through automatic word camouflage and a multilingual Named-Entity Recognition (NER) Transformer-based model tuned for its recognition and detection. The multilingual NER model is evaluated in different textual scenarios, detecting different types and mixtures of camouflage techniques, achieving an overall weighted F1 score of 0.8795. This article contributes significantly to countering malicious information by developing multilingual tools to simulate and detect new methods of evasion of content on social networks, making the fight against information disorders more effective.

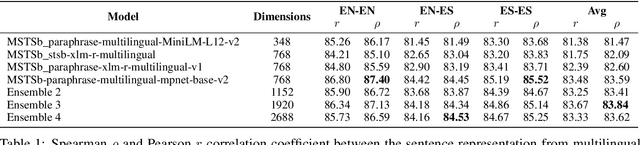

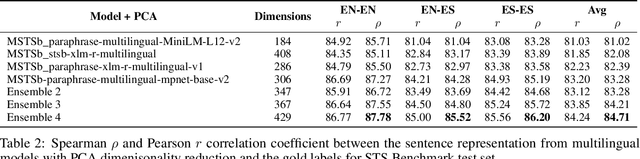

Exploring Dimensionality Reduction Techniques in Multilingual Transformers

Apr 18, 2022

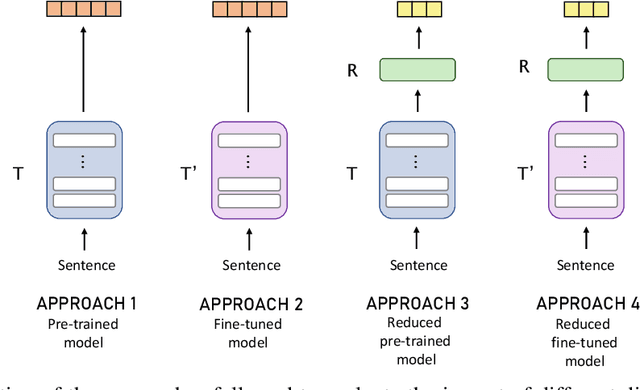

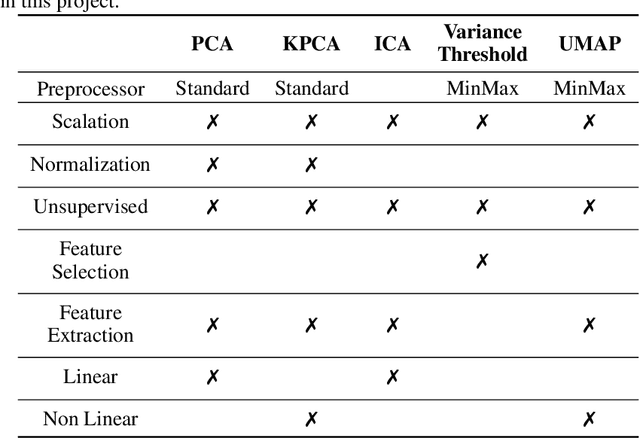

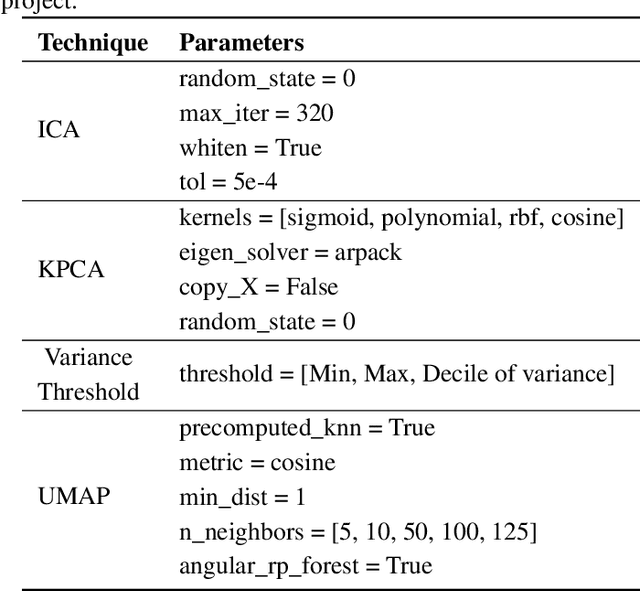

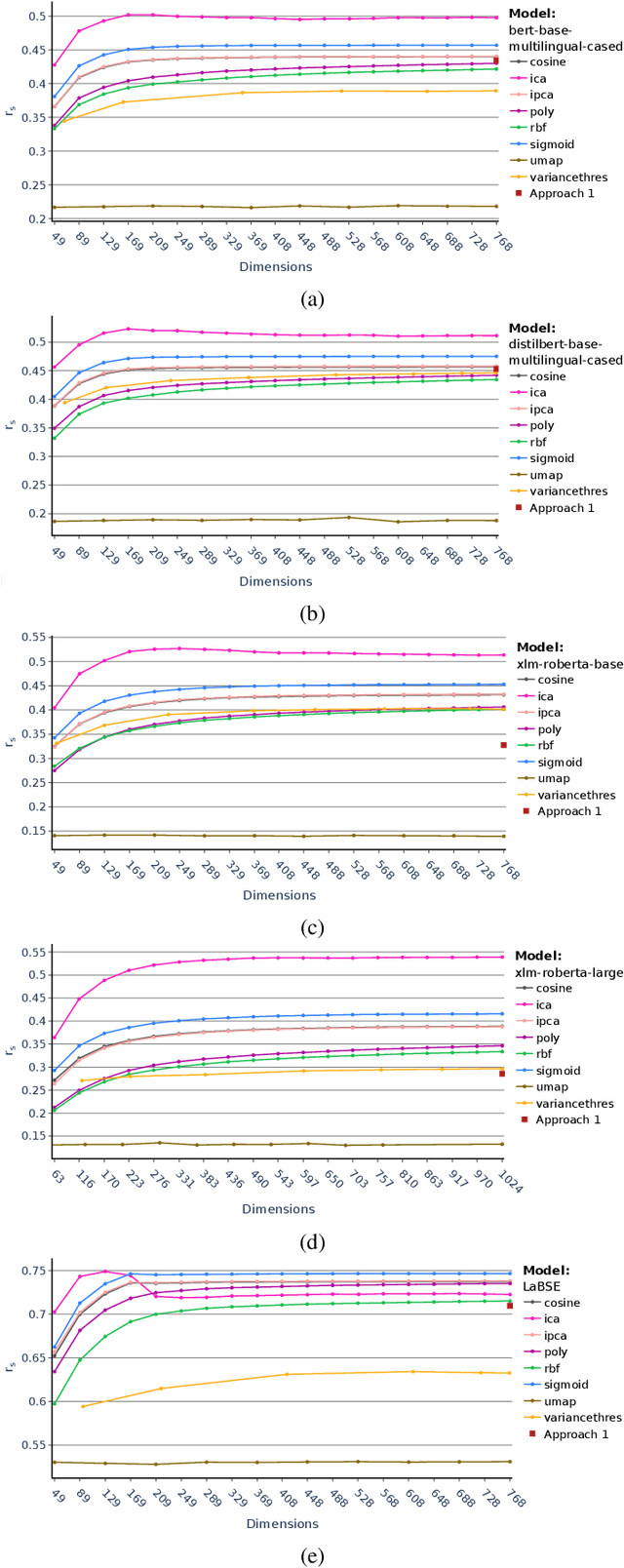

Abstract:Both in scientific literature and in industry,, Semantic and context-aware Natural Language Processing-based solutions have been gaining importance in recent years. The possibilities and performance shown by these models when dealing with complex Language Understanding tasks is unquestionable, from conversational agents to the fight against disinformation in social networks. In addition, considerable attention is also being paid to developing multilingual models to tackle the language bottleneck. The growing need to provide more complex models implementing all these features has been accompanied by an increase in their size, without being conservative in the number of dimensions required. This paper aims to give a comprehensive account of the impact of a wide variety of dimensional reduction techniques on the performance of different state-of-the-art multilingual Siamese Transformers, including unsupervised dimensional reduction techniques such as linear and nonlinear feature extraction, feature selection, and manifold techniques. In order to evaluate the effects of these techniques, we considered the multilingual extended version of Semantic Textual Similarity Benchmark (mSTSb) and two different baseline approaches, one using the pre-trained version of several models and another using their fine-tuned STS version. The results evidence that it is possible to achieve an average reduction in the number of dimensions of $91.58\% \pm 2.59\%$ and $54.65\% \pm 32.20\%$, respectively. This work has also considered the consequences of dimensionality reduction for visualization purposes. The results of this study will significantly contribute to the understanding of how different tuning approaches affect performance on semantic-aware tasks and how dimensional reduction techniques deal with the high-dimensional embeddings computed for the STS task and their potential for highly demanding NLP tasks

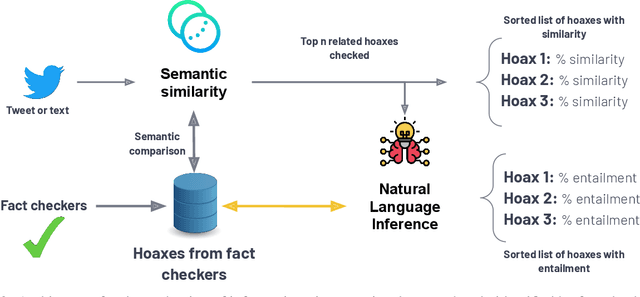

FacTeR-Check: Semi-automated fact-checking through Semantic Similarity and Natural Language Inference

Oct 27, 2021

Abstract:Our society produces and shares overwhelming amounts of information through the Online Social Networks (OSNs). Within this environment, misinformation and disinformation have proliferated, becoming a public safety concern on every country. Allowing the public and professionals to efficiently find reliable evidence about the factual veracity of a claim is crucial to mitigate this harmful spread. To this end, we propose FacTeR-Check, a multilingual architecture for semi-automated fact-checking that can be used for either the general public but also useful for fact-checking organisations. FacTeR-Check enables retrieving fact-checked information, unchecked claims verification and tracking dangerous information over social media. This architectures involves several modules developed to evaluate semantic similarity, to calculate natural language inference and to retrieve information from Online Social Networks. The union of all these modules builds a semi-automated fact-checking tool able of verifying new claims, to extract related evidence, and to track the evolution of a hoax on a OSN. While individual modules are validated on related benchmarks (mainly MSTS and SICK), the complete architecture is validated using a new dataset called NLI19-SP that is publicly released with COVID-19 related hoaxes and tweets from Spanish social media. Our results show state-of-the-art performance on the individual benchmarks, as well as producing useful analysis of the evolution over time of 61 different hoaxes.

SML: a new Semantic Embedding Alignment Transformer for efficient cross-lingual Natural Language Inference

Mar 18, 2021

Abstract:The ability of Transformers to perform with precision a variety of tasks such as question answering, Natural Language Inference (NLI) or summarising, have enable them to be ranked as one of the best paradigms to address this kind of tasks at present. NLI is one of the best scenarios to test these architectures, due to the knowledge required to understand complex sentences and established a relation between a hypothesis and a premise. Nevertheless, these models suffer from incapacity to generalise to other domains or difficulties to face multilingual scenarios. The leading pathway in the literature to address these issues involve designing and training extremely large architectures, which leads to unpredictable behaviours and to establish barriers which impede broad access and fine tuning. In this paper, we propose a new architecture, siamese multilingual transformer (SML), to efficiently align multilingual embeddings for Natural Language Inference. SML leverages siamese pre-trained multi-lingual transformers with frozen weights where the two input sentences attend each other to later be combined through a matrix alignment method. The experimental results carried out in this paper evidence that SML allows to reduce drastically the number of trainable parameters while still achieving state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge