Alejandro González

A 3D Facial Reconstruction Evaluation Methodology: Comparing Smartphone Scans with Deep Learning Based Methods Using Geometry and Morphometry Criteria

Feb 13, 2025Abstract:Three-dimensional (3D) facial shape analysis has gained interest due to its potential clinical applications. However, the high cost of advanced 3D facial acquisition systems limits their widespread use, driving the development of low-cost acquisition and reconstruction methods. This study introduces a novel evaluation methodology that goes beyond traditional geometry-based benchmarks by integrating morphometric shape analysis techniques, providing a statistical framework for assessing facial morphology preservation. As a case study, we compare smartphone-based 3D scans with state-of-the-art deep learning reconstruction methods from 2D images, using high-end stereophotogrammetry models as ground truth. This methodology enables a quantitative assessment of global and local shape differences, offering a biologically meaningful validation approach for low-cost 3D facial acquisition and reconstruction techniques.

BioFace3D: A fully automatic pipeline for facial biomarkers extraction of 3D face reconstructions segmented from MRI

Oct 01, 2024Abstract:Facial dysmorphologies have emerged as potential critical indicators in the diagnosis and prognosis of genetic, psychotic and rare disorders. While in certain conditions these dysmorphologies are severe, in other cases may be subtle and not perceivable to the human eye, requiring precise quantitative tools for their identification. Manual coding of facial dysmorphologies is a burdensome task and is subject to inter- and intra-observer variability. To overcome this gap, we present BioFace3D as a fully automatic tool for the calculation of facial biomarkers using facial models reconstructed from magnetic resonance images. The tool is divided into three automatic modules for the extraction of 3D facial models from magnetic resonance images, the registration of homologous 3D landmarks encoding facial morphology, and the calculation of facial biomarkers from anatomical landmarks coordinates using geometric morphometrics techniques.

Guiding GANs: How to control non-conditional pre-trained GANs for conditional image generation

Jan 04, 2021

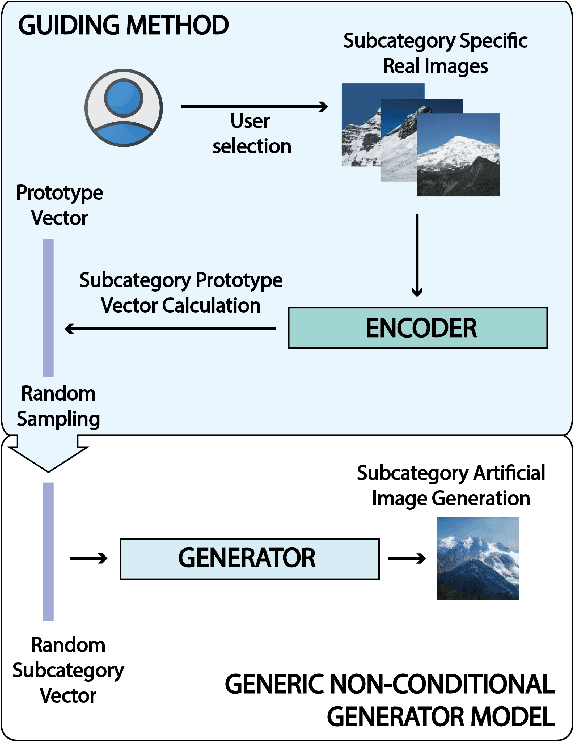

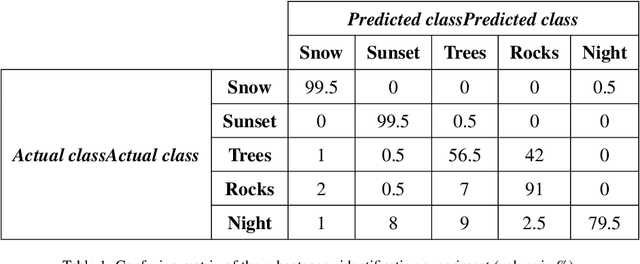

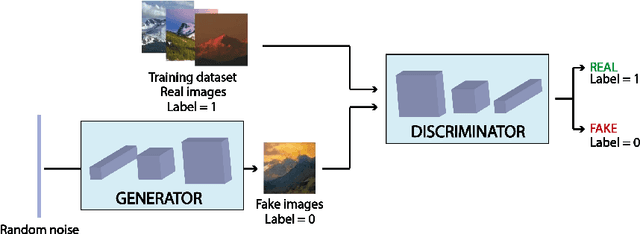

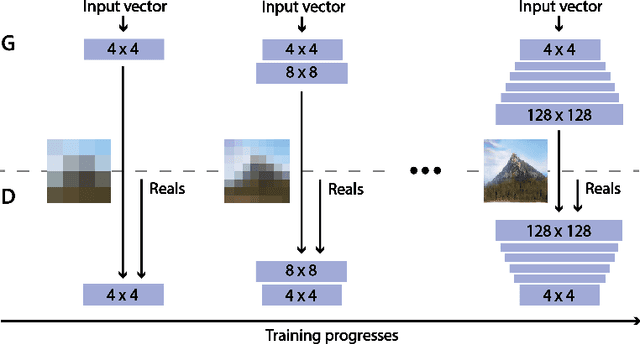

Abstract:Generative Adversarial Networks (GANs) are an arrange of two neural networks -- the generator and the discriminator -- that are jointly trained to generate artificial data, such as images, from random inputs. The quality of these generated images has recently reached such levels that can often lead both machines and humans into mistaking fake for real examples. However, the process performed by the generator of the GAN has some limitations when we want to condition the network to generate images from subcategories of a specific class. Some recent approaches tackle this \textit{conditional generation} by introducing extra information prior to the training process, such as image semantic segmentation or textual descriptions. While successful, these techniques still require defining beforehand the desired subcategories and collecting large labeled image datasets representing them to train the GAN from scratch. In this paper we present a novel and alternative method for guiding generic non-conditional GANs to behave as conditional GANs. Instead of re-training the GAN, our approach adds into the mix an encoder network to generate the high-dimensional random input vectors that are fed to the generator network of a non-conditional GAN to make it generate images from a specific subcategory. In our experiments, when compared to training a conditional GAN from scratch, our guided GAN is able to generate artificial images of perceived quality comparable to that of non-conditional GANs after training the encoder on just a few hundreds of images, which substantially accelerates the process and enables adding new subcategories seamlessly.

Spatiotemporal Stacked Sequential Learning for Pedestrian Detection

Jul 14, 2014

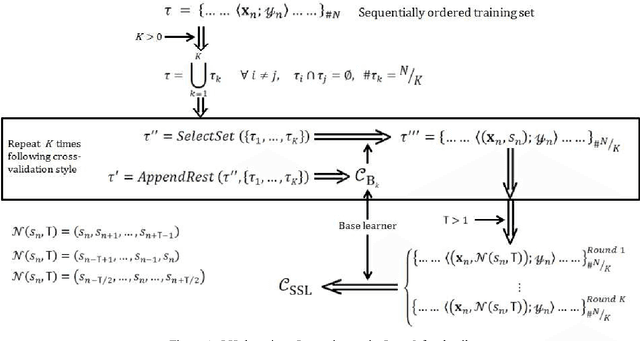

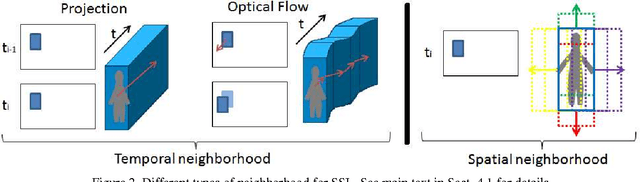

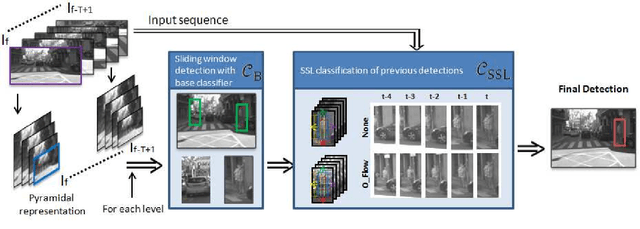

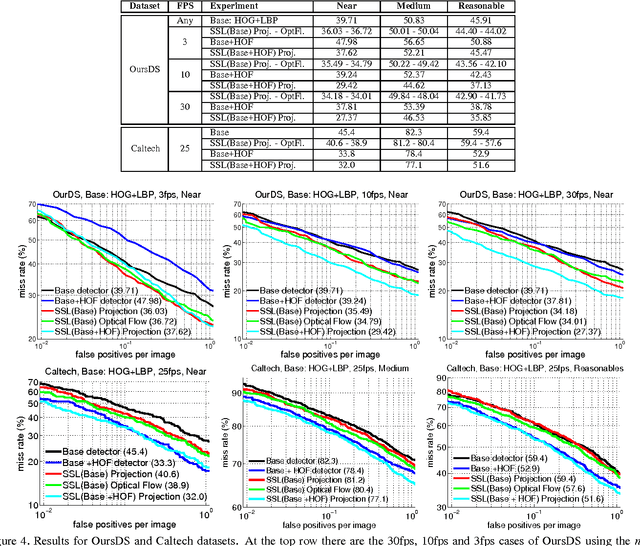

Abstract:Pedestrian classifiers decide which image windows contain a pedestrian. In practice, such classifiers provide a relatively high response at neighbor windows overlapping a pedestrian, while the responses around potential false positives are expected to be lower. An analogous reasoning applies for image sequences. If there is a pedestrian located within a frame, the same pedestrian is expected to appear close to the same location in neighbor frames. Therefore, such a location has chances of receiving high classification scores during several frames, while false positives are expected to be more spurious. In this paper we propose to exploit such correlations for improving the accuracy of base pedestrian classifiers. In particular, we propose to use two-stage classifiers which not only rely on the image descriptors required by the base classifiers but also on the response of such base classifiers in a given spatiotemporal neighborhood. More specifically, we train pedestrian classifiers using a stacked sequential learning (SSL) paradigm. We use a new pedestrian dataset we have acquired from a car to evaluate our proposal at different frame rates. We also test on a well known dataset: Caltech. The obtained results show that our SSL proposal boosts detection accuracy significantly with a minimal impact on the computational cost. Interestingly, SSL improves more the accuracy at the most dangerous situations, i.e. when a pedestrian is close to the camera.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge