Manel Mateos

Guiding GANs: How to control non-conditional pre-trained GANs for conditional image generation

Jan 04, 2021

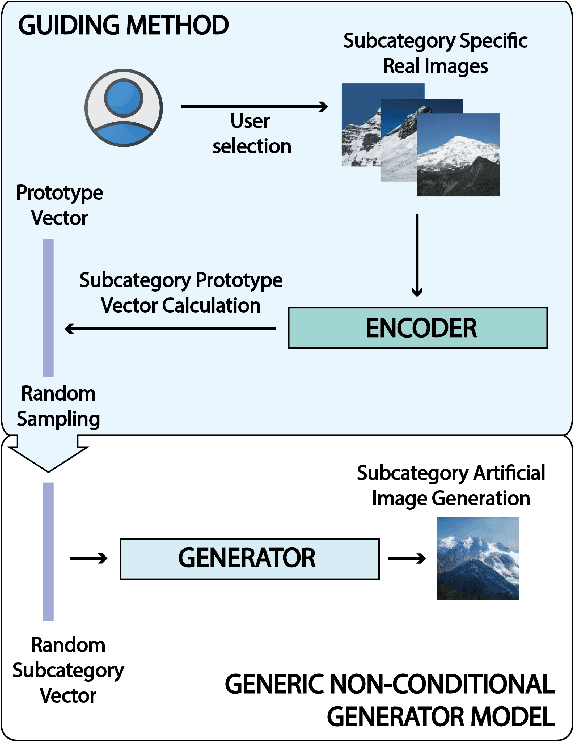

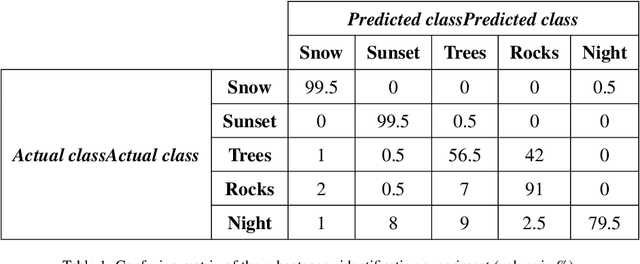

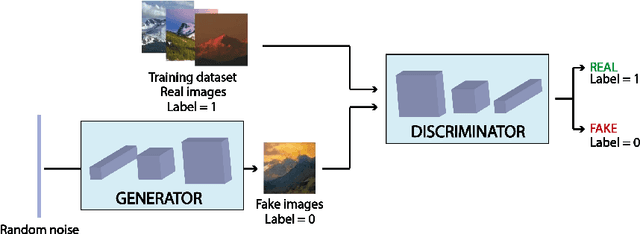

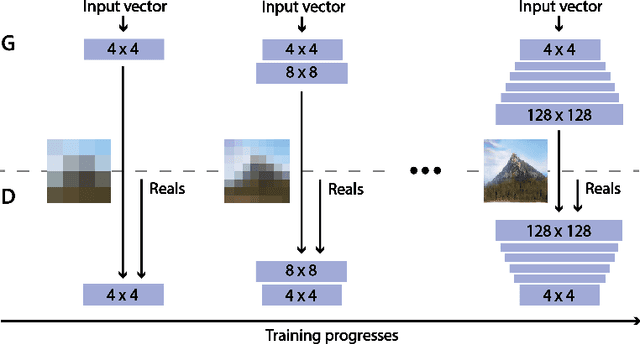

Abstract:Generative Adversarial Networks (GANs) are an arrange of two neural networks -- the generator and the discriminator -- that are jointly trained to generate artificial data, such as images, from random inputs. The quality of these generated images has recently reached such levels that can often lead both machines and humans into mistaking fake for real examples. However, the process performed by the generator of the GAN has some limitations when we want to condition the network to generate images from subcategories of a specific class. Some recent approaches tackle this \textit{conditional generation} by introducing extra information prior to the training process, such as image semantic segmentation or textual descriptions. While successful, these techniques still require defining beforehand the desired subcategories and collecting large labeled image datasets representing them to train the GAN from scratch. In this paper we present a novel and alternative method for guiding generic non-conditional GANs to behave as conditional GANs. Instead of re-training the GAN, our approach adds into the mix an encoder network to generate the high-dimensional random input vectors that are fed to the generator network of a non-conditional GAN to make it generate images from a specific subcategory. In our experiments, when compared to training a conditional GAN from scratch, our guided GAN is able to generate artificial images of perceived quality comparable to that of non-conditional GANs after training the encoder on just a few hundreds of images, which substantially accelerates the process and enables adding new subcategories seamlessly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge