Aida Rahmattalabi

Promises and Challenges of Causality for Ethical Machine Learning

Jan 26, 2022Abstract:In recent years, there has been increasing interest in causal reasoning for designing fair decision-making systems due to its compatibility with legal frameworks, interpretability for human stakeholders, and robustness to spurious correlations inherent in observational data, among other factors. The recent attention to causal fairness, however, has been accompanied with great skepticism due to practical and epistemological challenges with applying current causal fairness approaches in the literature. Motivated by the long-standing empirical work on causality in econometrics, social sciences, and biomedical sciences, in this paper we lay out the conditions for appropriate application of causal fairness under the "potential outcomes framework." We highlight key aspects of causal inference that are often ignored in the causal fairness literature. In particular, we discuss the importance of specifying the nature and timing of interventions on social categories such as race or gender. Precisely, instead of postulating an intervention on immutable attributes, we propose a shift in focus to their perceptions and discuss the implications for fairness evaluation. We argue that such conceptualization of the intervention is key in evaluating the validity of causal assumptions and conducting sound causal analysis including avoiding post-treatment bias. Subsequently, we illustrate how causality can address the limitations of existing fairness metrics, including those that depend upon statistical correlations. Specifically, we introduce causal variants of common statistical notions of fairness, and we make a novel observation that under the causal framework there is no fundamental disagreement between different notions of fairness. Finally, we conduct extensive experiments where we demonstrate our approach for evaluating and mitigating unfairness, specially when post-treatment variables are present.

Learning Resource Allocation Policies from Observational Data with an Application to Homeless Services Delivery

Jan 25, 2022

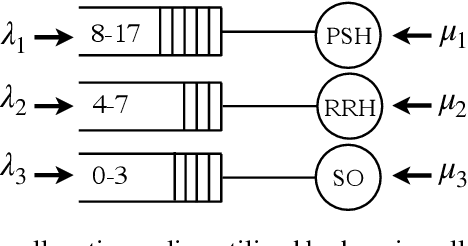

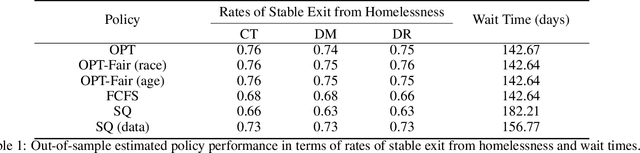

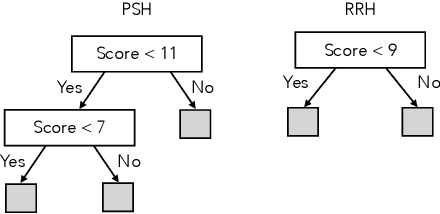

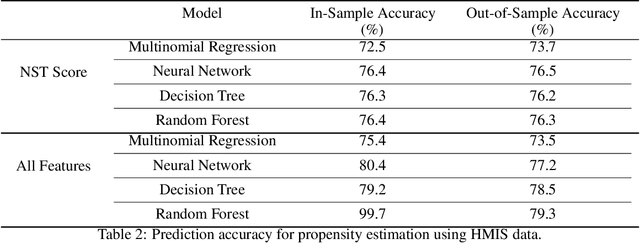

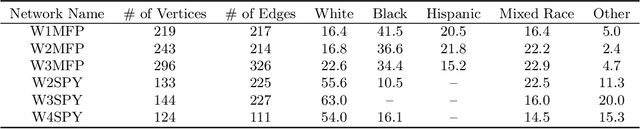

Abstract:We study the problem of learning, from observational data, fair and interpretable policies that effectively match heterogeneous individuals to scarce resources of different types. We model this problem as a multi-class multi-server queuing system where both individuals and resources arrive stochastically over time. Each individual, upon arrival, is assigned to a queue where they wait to be matched to a resource. The resources are assigned in a first come first served (FCFS) fashion according to an eligibility structure that encodes the resource types that serve each queue. We propose a methodology based on techniques in modern causal inference to construct the individual queues as well as learn the matching outcomes and provide a mixed-integer optimization (MIO) formulation to optimize the eligibility structure. The MIO problem maximizes policy outcome subject to wait time and fairness constraints. It is very flexible, allowing for additional linear domain constraints. We conduct extensive analyses using synthetic and real-world data. In particular, we evaluate our framework using data from the U.S. Homeless Management Information System (HMIS). We obtain wait times as low as an FCFS policy while improving the rate of exit from homelessness for underserved or vulnerable groups (7% higher for the Black individuals and 15% higher for those below 17 years old) and overall.

Fair Influence Maximization: A Welfare Optimization Approach

Jun 14, 2020

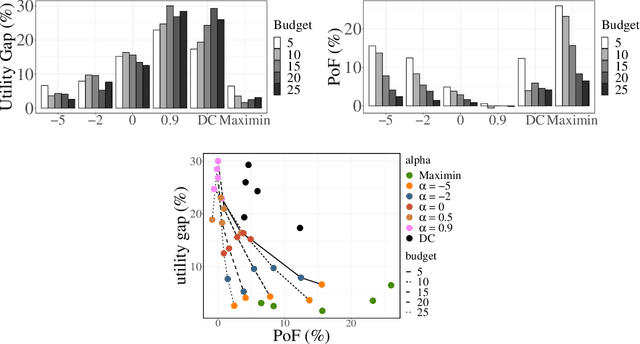

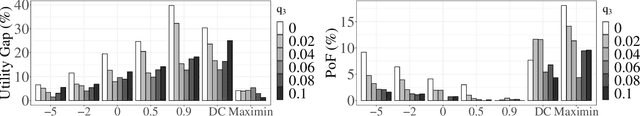

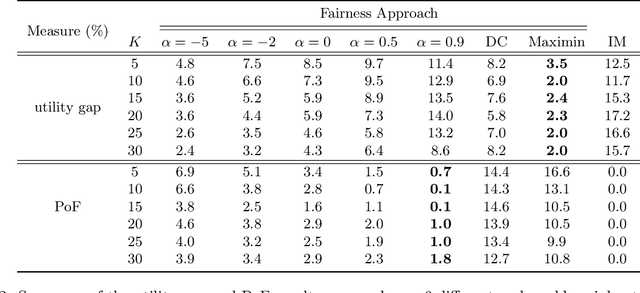

Abstract:Several social interventions (e.g., suicide and HIV prevention) leverage social network information to maximize outreach. Algorithmic influence maximization techniques have been proposed to aid with the choice of influencers (or peer leaders) in such interventions. Traditional algorithms for influence maximization have not been designed with social interventions in mind. As a result, they may disproportionately exclude minority communities from the benefits of the intervention. This has motivated research on fair influence maximization. Existing techniques require committing to a single domain-specific fairness measure. This makes it hard for a decision maker to meaningfully compare these notions and their resulting trade-offs across different applications. We address these shortcomings by extending the principles of cardinal welfare to the influence maximization setting, which is underlain by complex connections between members of different communities. We generalize the theory regarding these principles and show under what circumstances these principles can be satisfied by a welfare function. We then propose a family of welfare functions that are governed by a single inequity aversion parameter which allows a decision maker to study task-dependent trade-offs between fairness and total influence and effectively trade off quantities like influence gap by varying this parameter. We use these welfare functions as a fairness notion to rule out undesirable allocations. We show that the resulting optimization problem is monotone and submodular and can be solved with optimality guarantees. Finally, we carry out a detailed experimental analysis on synthetic and real social networks and should that high welfare can be achieved without sacrificing the total influence significantly. Interestingly we can show there exists welfare functions that empirically satisfy all of the principles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge