Ahsan Pervaiz

SCOPE: Safe Exploration for Dynamic Computer Systems Optimization

Apr 22, 2022

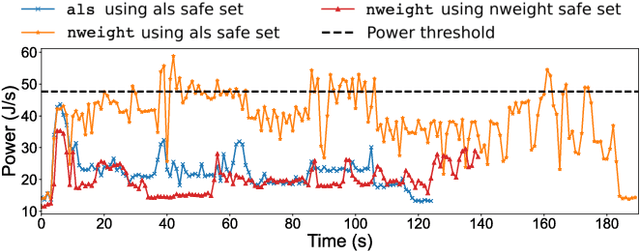

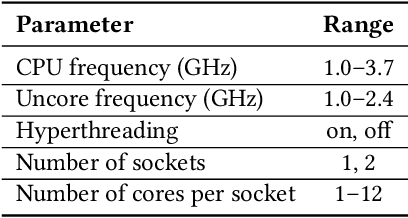

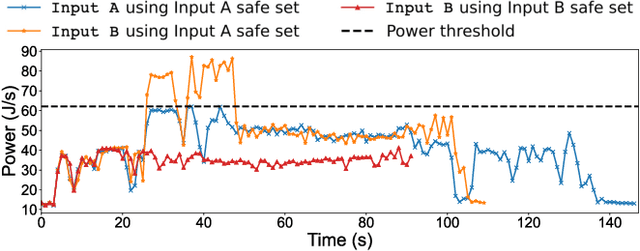

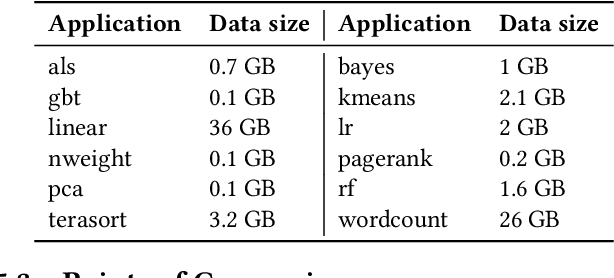

Abstract:Modern computer systems need to execute under strict safety constraints (e.g., a power limit), but doing so often conflicts with their ability to deliver high performance (i.e. minimal latency). Prior work uses machine learning to automatically tune hardware resources such that the system execution meets safety constraints optimally. Such solutions monitor past system executions to learn the system's behavior under different hardware resource allocations before dynamically tuning resources to optimize the application execution. However, system behavior can change significantly between different applications and even different inputs of the same applications. Hence, the models learned using data collected a priori are often suboptimal and violate safety constraints when used with new applications and inputs. To address this limitation, we introduce the concept of an execution space, which is the cross product of hardware resources, input features, and applications. To dynamically and safely allocate hardware resources from the execution space, we present SCOPE, a resource manager that leverages a novel safe exploration framework. We evaluate SCOPE's ability to deliver improved latency while minimizing power constraint violations by dynamically configuring hardware while running a variety of Apache Spark applications. Compared to prior approaches that minimize power constraint violations, SCOPE consumes comparable power while improving latency by up to 9.5X. Compared to prior approaches that minimize latency, SCOPE achieves similar latency but reduces power constraint violation rates by up to 45.88X, achieving almost zero safety constraint violations across all applications.

Cello: Efficient Computer Systems Optimization with Predictive Early Termination and Censored Regression

Apr 11, 2022

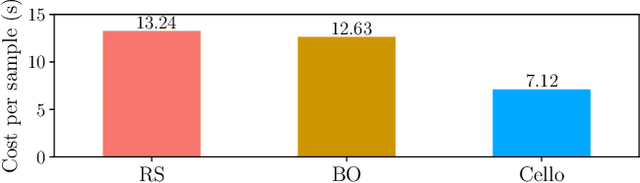

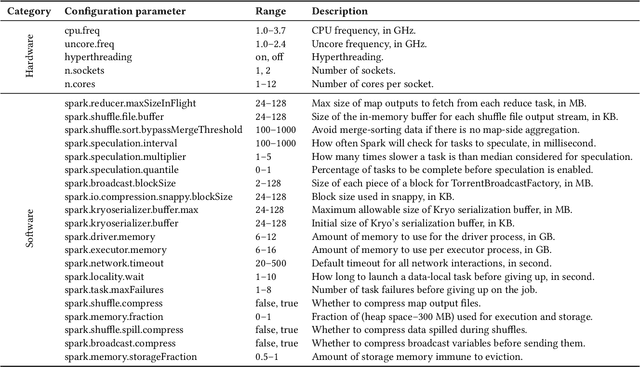

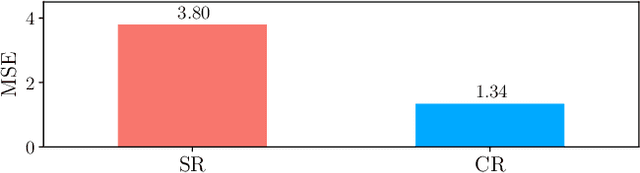

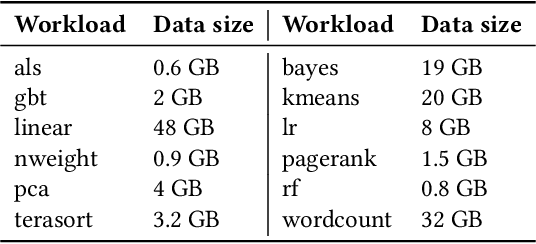

Abstract:Sample-efficient machine learning (SEML) has been widely applied to find optimal latency and power tradeoffs for configurable computer systems. Instead of randomly sampling from the configuration space, SEML reduces the search cost by dramatically reducing the number of configurations that must be sampled to optimize system goals (e.g., low latency or energy). Nevertheless, SEML only reduces one component of cost -- the total number of samples collected -- but does not decrease the cost of collecting each sample. Critically, not all samples are equal; some take much longer to collect because they correspond to slow system configurations. This paper present Cello, a computer systems optimization framework that reduces sample collection costs -- especially those that come from the slowest configurations. The key insight is to predict ahead of time whether samples will have poor system behavior (e.g., long latency or high energy) and terminate these samples early before their measured system behavior surpasses the termination threshold, which we call it predictive early termination. To predict the future system behavior accurately before it manifests as high runtime or energy, Cello uses censored regression to produces accurate predictions for running samples. We evaluate Cello by optimizing latency and energy for Apache Spark workloads. We give Cello a fixed amount of time to search a combined space of hardware and software configuration parameters. Our evaluation shows that compared to the state-of-the-art SEML approach in computer systems optimization, Cello improves latency by 1.19X for minimizing latency under a power constraint, and improves energy by 1.18X for minimizing energy under a latency constraint.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge