Advaith Venkatramanan Sethuraman

TURTLMap: Real-time Localization and Dense Mapping of Low-texture Underwater Environments with a Low-cost Unmanned Underwater Vehicle

Aug 02, 2024Abstract:Significant work has been done on advancing localization and mapping in underwater environments. Still, state-of-the-art methods are challenged by low-texture environments, which is common for underwater settings. This makes it difficult to use existing methods in diverse, real-world scenes. In this paper, we present TURTLMap, a novel solution that focuses on textureless underwater environments through a real-time localization and mapping method. We show that this method is low-cost, and capable of tracking the robot accurately, while constructing a dense map of a low-textured environment in real-time. We evaluate the proposed method using real-world data collected in an indoor water tank with a motion capture system and ground truth reference map. Qualitative and quantitative results validate the proposed system achieves accurate and robust localization and precise dense mapping, even when subject to wave conditions. The project page for TURTLMap is https://umfieldrobotics.github.io/TURTLMap.

STARS: Zero-shot Sim-to-Real Transfer for Segmentation of Shipwrecks in Sonar Imagery

Oct 02, 2023Abstract:In this paper, we address the problem of sim-to-real transfer for object segmentation when there is no access to real examples of an object of interest during training, i.e. zero-shot sim-to-real transfer for segmentation. We focus on the application of shipwreck segmentation in side scan sonar imagery. Our novel segmentation network, STARS, addresses this challenge by fusing a predicted deformation field and anomaly volume, allowing it to generalize better to real sonar images and achieve more effective zero-shot sim-to-real transfer for image segmentation. We evaluate the sim-to-real transfer capabilities of our method on a real, expert-labeled side scan sonar dataset of shipwrecks collected from field work surveys with an autonomous underwater vehicle (AUV). STARS is trained entirely in simulation and performs zero-shot shipwreck segmentation with no additional fine-tuning on real data. Our method provides a significant 20% increase in segmentation performance for the targeted shipwreck class compared to the best baseline.

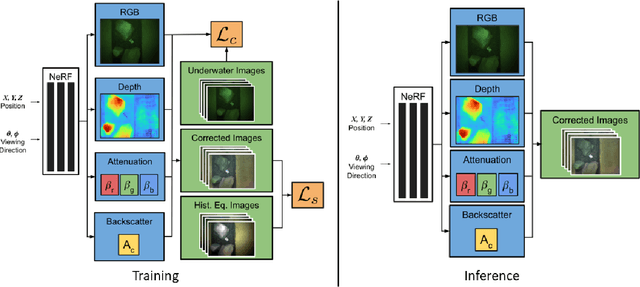

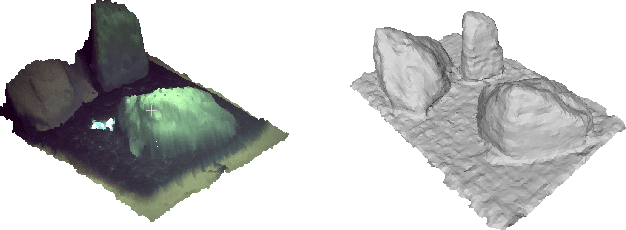

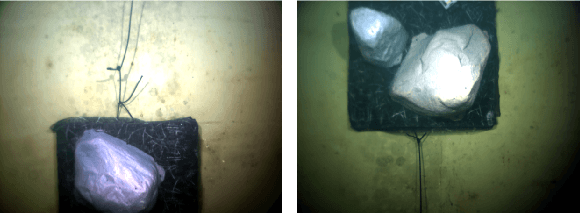

WaterNeRF: Neural Radiance Fields for Underwater Scenes

Sep 27, 2022

Abstract:Underwater imaging is a critical task performed by marine robots for a wide range of applications including aquaculture, marine infrastructure inspection, and environmental monitoring. However, water column effects, such as attenuation and backscattering, drastically change the color and quality of imagery captured underwater. Due to varying water conditions and range-dependency of these effects, restoring underwater imagery is a challenging problem. This impacts downstream perception tasks including depth estimation and 3D reconstruction. In this paper, we advance state-of-the-art in neural radiance fields (NeRFs) to enable physics-informed dense depth estimation and color correction. Our proposed method, WaterNeRF, estimates parameters of a physics-based model for underwater image formation, leading to a hybrid data-driven and model-based solution. After determining the scene structure and radiance field, we can produce novel views of degraded as well as corrected underwater images, along with dense depth of the scene. We evaluate the proposed method qualitatively and quantitatively on a real underwater dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge