Abigail Millings

Automatic recognition of child speech for robotic applications in noisy environments

Nov 08, 2016

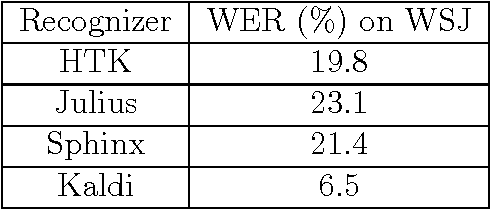

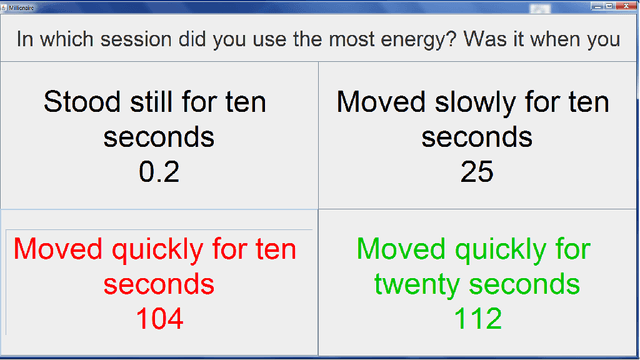

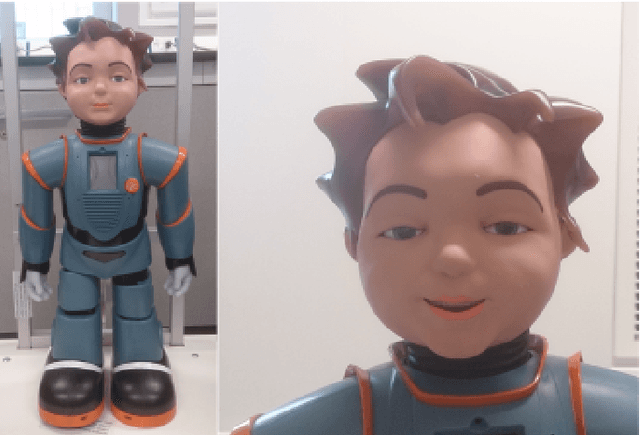

Abstract:Automatic speech recognition (ASR) allows a natural and intuitive interface for robotic educational applications for children. However there are a number of challenges to overcome to allow such an interface to operate robustly in realistic settings, including the intrinsic difficulties of recognising child speech and high levels of background noise often present in classrooms. As part of the EU EASEL project we have provided several contributions to address these challenges, implementing our own ASR module for use in robotics applications. We used the latest deep neural network algorithms which provide a leap in performance over the traditional GMM approach, and apply data augmentation methods to improve robustness to noise and speaker variation. We provide a close integration between the ASR module and the rest of the dialogue system, allowing the ASR to receive in real-time the language models relevant to the current section of the dialogue, greatly improving the accuracy. We integrated our ASR module into an interactive, multimodal system using a small humanoid robot to help children learn about exercise and energy. The system was installed at a public museum event as part of a research study where 320 children (aged 3 to 14) interacted with the robot, with our ASR achieving 90% accuracy for fluent and near-fluent speech.

Impact of robot responsiveness and adult involvement on children's social behaviours in human-robot interaction

Jun 20, 2016

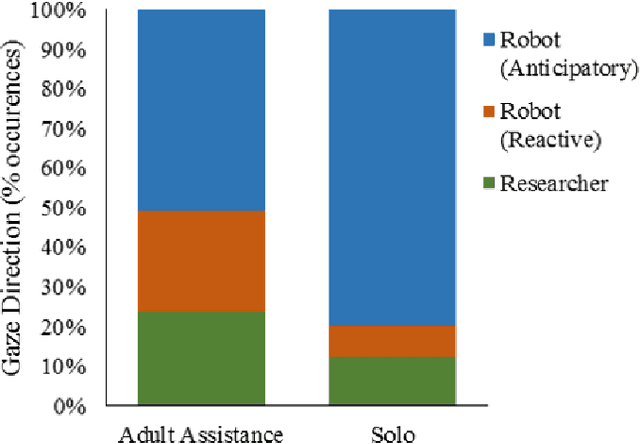

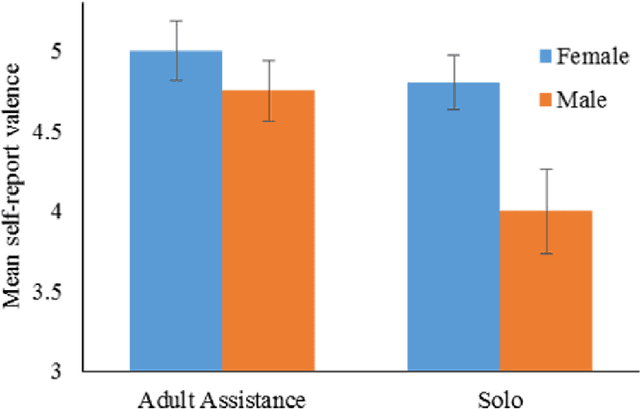

Abstract:A key challenge in developing engaging social robots is creating convincing, autonomous and responsive agents, which users perceive, and treat, as social beings. As a part of the collaborative project: Expressive Agents for Symbiotic Education and Learning (EASEL), this study examines the impact of autonomous response to children's speech, by the humanoid robot Zeno, on their interactions with it as a social entity. Results indicate that robot autonomy and adult assistance during HRI can substantially influence children's behaviour during interaction and their affect after. Children working with a fully-autonomous, responsive robot demonstrated greater physical activity following robot instruction than those working with a less responsive robot, which required adult assistance to interact with. During dialogue with the robot, children working with the fully-autonomous robot also looked towards the robot in anticipation of its vocalisations on more occasions. In contrast, a less responsive robot, requiring adult assistance to interact with, led to greater self-report positive affect and more occasions of children looking to the robot in response to its vocalisations. We discuss the broader implications of these findings in terms of anthropomorphism of social robots and in relation to the overall project strategy to further the understanding of how interactions with social robots could lead to task-appropriate symbiotic relationships.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge