Abari Bhattacharya

Aspect-oriented Consumer Health Answer Summarization

May 10, 2024Abstract:Community Question-Answering (CQA) forums have revolutionized how people seek information, especially those related to their healthcare needs, placing their trust in the collective wisdom of the public. However, there can be several answers in response to a single query, which makes it hard to grasp the key information related to the specific health concern. Typically, CQA forums feature a single top-voted answer as a representative summary for each query. However, a single answer overlooks the alternative solutions and other information frequently offered in other responses. Our research focuses on aspect-based summarization of health answers to address this limitation. Summarization of responses under different aspects such as suggestions, information, personal experiences, and questions can enhance the usability of the platforms. We formalize a multi-stage annotation guideline and contribute a unique dataset comprising aspect-based human-written health answer summaries. We build an automated multi-faceted answer summarization pipeline with this dataset based on task-specific fine-tuning of several state-of-the-art models. The pipeline leverages question similarity to retrieve relevant answer sentences, subsequently classifying them into the appropriate aspect type. Following this, we employ several recent abstractive summarization models to generate aspect-based summaries. Finally, we present a comprehensive human analysis and find that our summaries rank high in capturing relevant content and a wide range of solutions.

Reference Resolution and Context Change in Multimodal Situated Dialogue for Exploring Data Visualizations

Sep 06, 2022

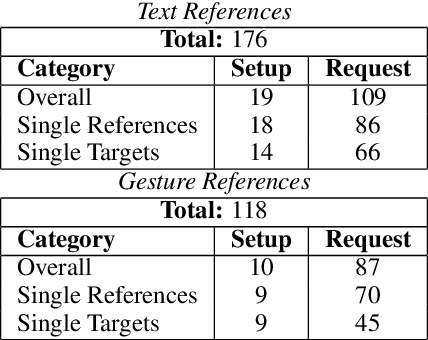

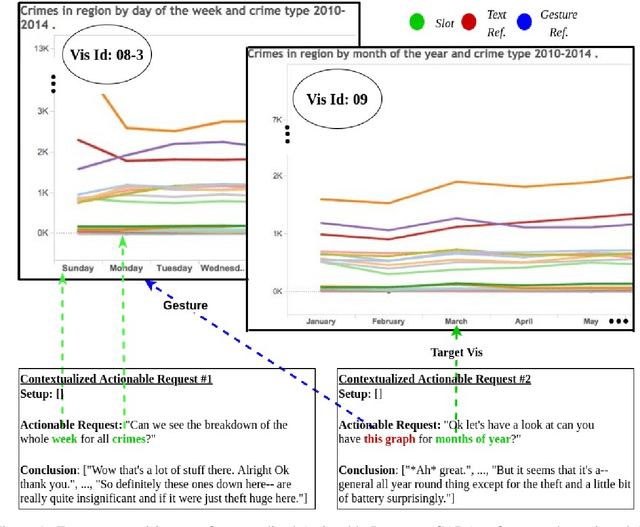

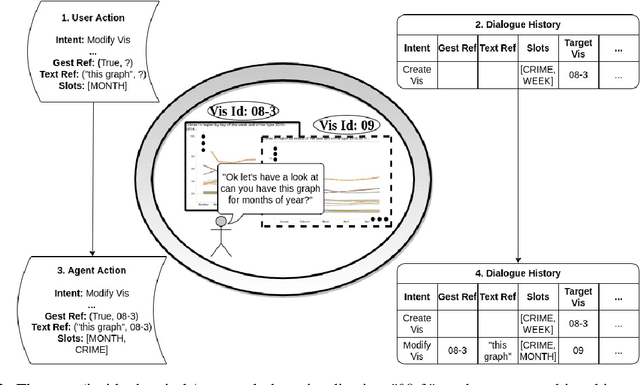

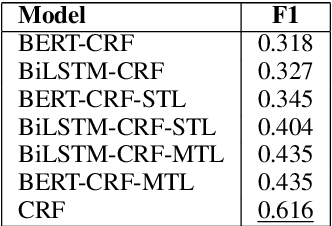

Abstract:Reference resolution, which aims to identify entities being referred to by a speaker, is more complex in real world settings: new referents may be created by processes the agents engage in and/or be salient only because they belong to the shared physical setting. Our focus is on resolving references to visualizations on a large screen display in multimodal dialogue; crucially, reference resolution is directly involved in the process of creating new visualizations. We describe our annotations for user references to visualizations appearing on a large screen via language and hand gesture and also new entity establishment, which results from executing the user request to create a new visualization. We also describe our reference resolution pipeline which relies on an information-state architecture to maintain dialogue context. We report results on detecting and resolving references, effectiveness of contextual information on the model, and under-specified requests for creating visualizations. We also experiment with conventional CRF and deep learning / transformer models (BiLSTM-CRF and BERT-CRF) for tagging references in user utterance text. Our results show that transfer learning significantly boost performance of the deep learning methods, although CRF still out-performs them, suggesting that conventional methods may generalize better for low resource data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge