Aashaka Desai

Systemic Biases in Sign Language AI Research: A Deaf-Led Call to Reevaluate Research Agendas

Mar 05, 2024Abstract:Growing research in sign language recognition, generation, and translation AI has been accompanied by calls for ethical development of such technologies. While these works are crucial to helping individual researchers do better, there is a notable lack of discussion of systemic biases or analysis of rhetoric that shape the research questions and methods in the field, especially as it remains dominated by hearing non-signing researchers. Therefore, we conduct a systematic review of 101 recent papers in sign language AI. Our analysis identifies significant biases in the current state of sign language AI research, including an overfocus on addressing perceived communication barriers, a lack of use of representative datasets, use of annotations lacking linguistic foundations, and development of methods that build on flawed models. We take the position that the field lacks meaningful input from Deaf stakeholders, and is instead driven by what decisions are the most convenient or perceived as important to hearing researchers. We end with a call to action: the field must make space for Deaf researchers to lead the conversation in sign language AI.

ASL Citizen: A Community-Sourced Dataset for Advancing Isolated Sign Language Recognition

Apr 12, 2023

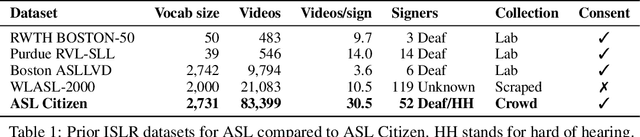

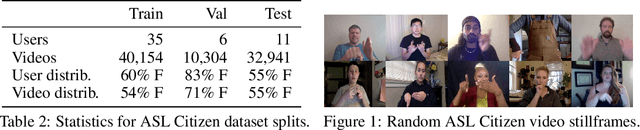

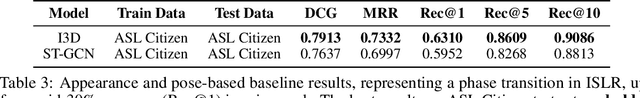

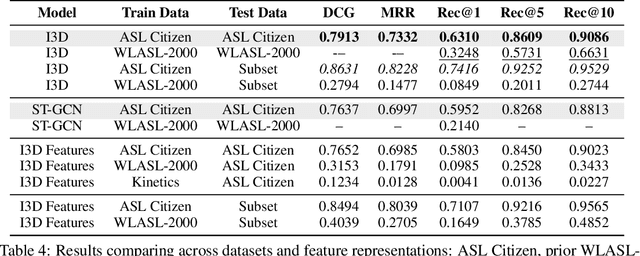

Abstract:Sign languages are used as a primary language by approximately 70 million D/deaf people world-wide. However, most communication technologies operate in spoken and written languages, creating inequities in access. To help tackle this problem, we release ASL Citizen, the largest Isolated Sign Language Recognition (ISLR) dataset to date, collected with consent and containing 83,912 videos for 2,731 distinct signs filmed by 52 signers in a variety of environments. We propose that this dataset be used for sign language dictionary retrieval for American Sign Language (ASL), where a user demonstrates a sign to their own webcam with the aim of retrieving matching signs from a dictionary. We show that training supervised machine learning classifiers with our dataset greatly advances the state-of-the-art on metrics relevant for dictionary retrieval, achieving, for instance, 62% accuracy and a recall-at-10 of 90%, evaluated entirely on videos of users who are not present in the training or validation sets. An accessible PDF of this article is available at https://aashakadesai.github.io/research/ASL_Dataset__arxiv_.pdf

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge