Aaron Long

Whiterabbit AI, Inc., 3930 Freedom Circle Suite 101, Santa Clara, CA 95054

A multi-site study of a breast density deep learning model for full-field digital mammography and digital breast tomosynthesis exams

Jan 23, 2020

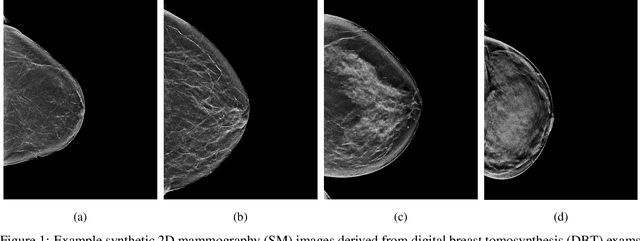

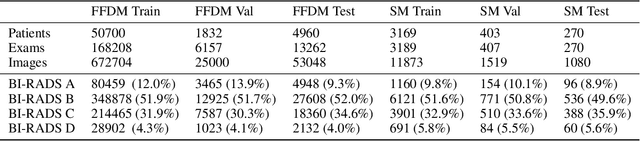

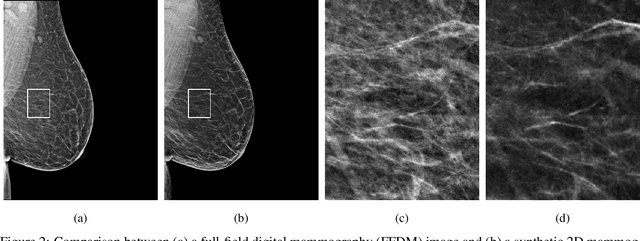

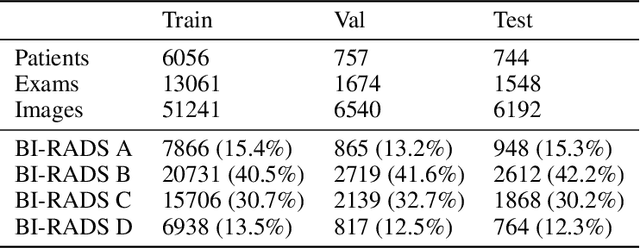

Abstract:$\textbf{Purpose:}$ To develop a Breast Imaging Reporting and Data System (BI-RADS) breast density DL model in a multi-site setting for synthetic 2D mammography (SM) images derived from 3D DBT exams using FFDM images and limited SM data. $\textbf{Materials and Methods:}$ A DL model was trained to predict BI-RADS breast density using FFDM images acquired from 2008 to 2017 (Site 1: 57492 patients, 187627 exams, 750752 images) for this retrospective study. The FFDM model was evaluated using SM datasets from two institutions (Site 1: 3842 patients, 3866 exams, 14472 images, acquired from 2016 to 2017; Site 2: 7557 patients, 16283 exams, 63973 images, 2015 to 2019). Adaptation methods were investigated to improve performance on the SM datasets and the effect of dataset size on each adaptation method is considered. Statistical significance was assessed using confidence intervals (CI), estimated by bootstrapping. $\textbf{Results:}$ Without adaptation, the model demonstrated close agreement with the original reporting radiologists for all three datasets (Site 1 FFDM: linearly-weighted $\kappa_w$ = 0.75, 95\% CI: [0.74, 0.76]; Site 1 SM: $\kappa_w$ = 0.71, CI: [0.64, 0.78]; Site 2 SM: $\kappa_w$ = 0.72, CI: [0.70, 0.75]). With adaptation, performance improved for Site 2 (Site 1: $\kappa_w$ = 0.72, CI: [0.66, 0.79], Site 2: $\kappa_w$ = 0.79, CI: [0.76, 0.81]) using only 500 SM images from each site. $\textbf{Conclusion:}$ A BI-RADS breast density DL model demonstrated strong performance on FFDM and SM images from two institutions without training on SM images and improved using few SM images.

A Hypersensitive Breast Cancer Detector

Jan 23, 2020

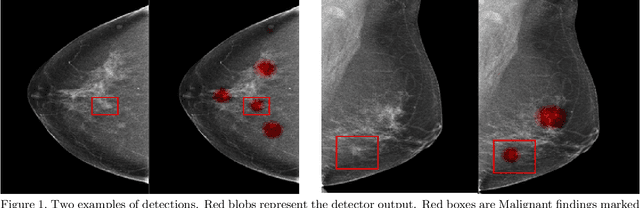

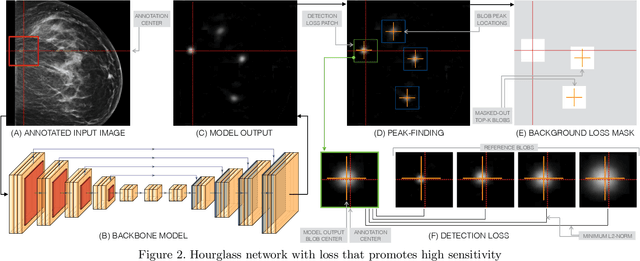

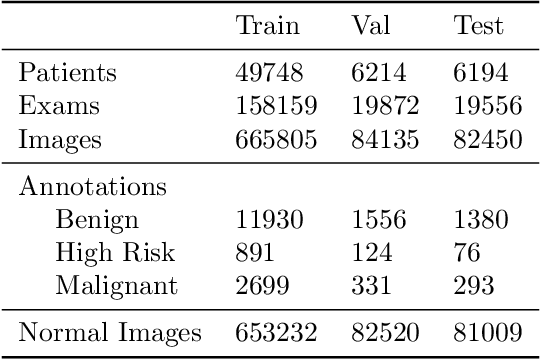

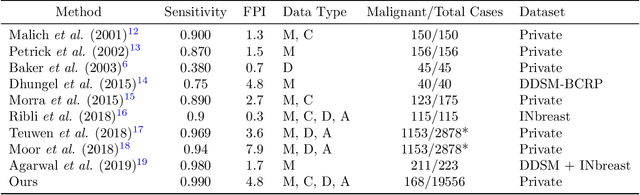

Abstract:Early detection of breast cancer through screening mammography yields a 20-35% increase in survival rate; however, there are not enough radiologists to serve the growing population of women seeking screening mammography. Although commercial computer aided detection (CADe) software has been available to radiologists for decades, it has failed to improve the interpretation of full-field digital mammography (FFDM) images due to its low sensitivity over the spectrum of findings. In this work, we leverage a large set of FFDM images with loose bounding boxes of mammographically significant findings to train a deep learning detector with extreme sensitivity. Building upon work from the Hourglass architecture, we train a model that produces segmentation-like images with high spatial resolution, with the aim of producing 2D Gaussian blobs centered on ground-truth boxes. We replace the pixel-wise $L_2$ norm with a weak-supervision loss designed to achieve high sensitivity, asymmetrically penalizing false positives and false negatives while softening the noise of the loose bounding boxes by permitting a tolerance in misaligned predictions. The resulting system achieves a sensitivity for malignant findings of 0.99 with only 4.8 false positive markers per image. When utilized in a CADe system, this model could enable a novel workflow where radiologists can focus their attention with trust on only the locations proposed by the model, expediting the interpretation process and bringing attention to potential findings that could otherwise have been missed. Due to its nearly perfect sensitivity, the proposed detector can also be used as a high-performance proposal generator in two-stage detection systems.

Adaptation of a deep learning malignancy model from full-field digital mammography to digital breast tomosynthesis

Jan 23, 2020

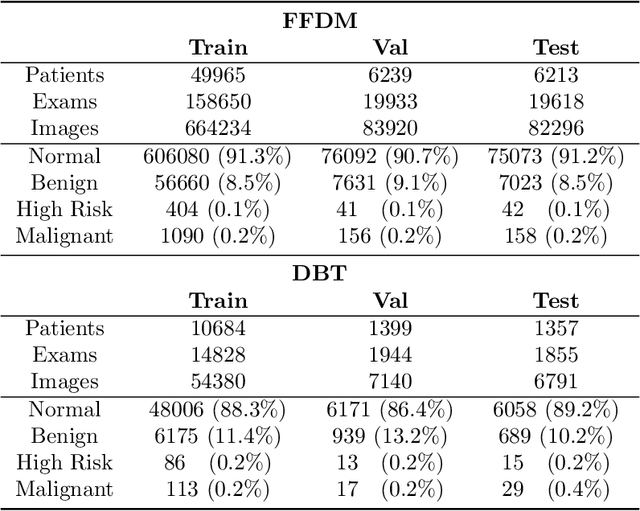

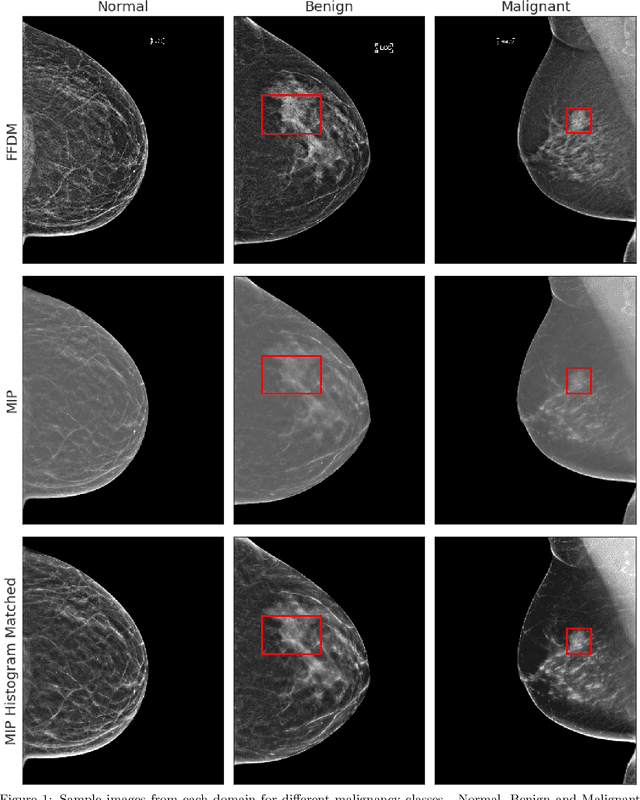

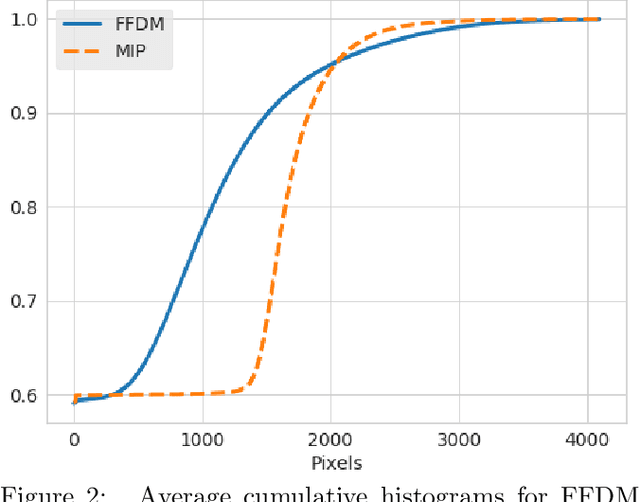

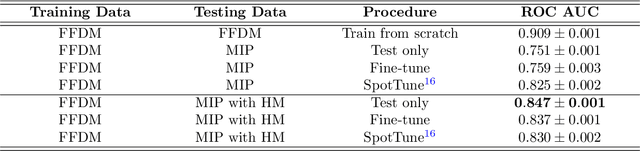

Abstract:Mammography-based screening has helped reduce the breast cancer mortality rate, but has also been associated with potential harms due to low specificity, leading to unnecessary exams or procedures, and low sensitivity. Digital breast tomosynthesis (DBT) improves on conventional mammography by increasing both sensitivity and specificity and is becoming common in clinical settings. However, deep learning (DL) models have been developed mainly on conventional 2D full-field digital mammography (FFDM) or scanned film images. Due to a lack of large annotated DBT datasets, it is difficult to train a model on DBT from scratch. In this work, we present methods to generalize a model trained on FFDM images to DBT images. In particular, we use average histogram matching (HM) and DL fine-tuning methods to generalize a FFDM model to the 2D maximum intensity projection (MIP) of DBT images. In the proposed approach, the differences between the FFDM and DBT domains are reduced via HM and then the base model, which was trained on abundant FFDM images, is fine-tuned. When evaluating on image patches extracted around identified findings, we are able to achieve similar areas under the receiver operating characteristic curve (ROC AUC) of $\sim 0.9$ for FFDM and $\sim 0.85$ for MIP images, as compared to a ROC AUC of $\sim 0.75$ when tested directly on MIP images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge