Aadi Kothari

Fast and Modular Autonomy Software for Autonomous Racing Vehicles

Aug 27, 2024

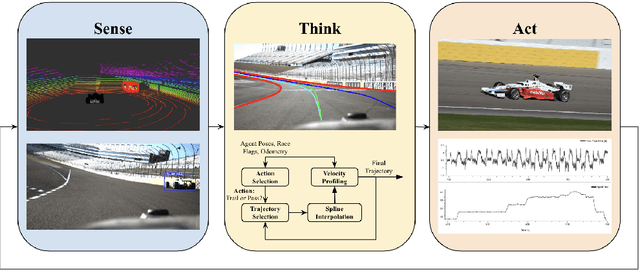

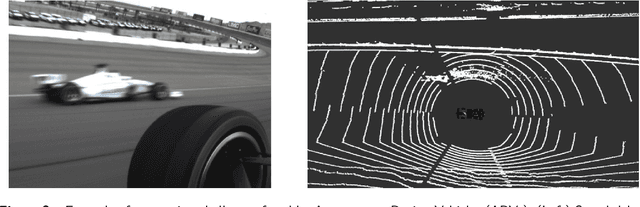

Abstract:Autonomous motorsports aim to replicate the human racecar driver with software and sensors. As in traditional motorsports, Autonomous Racing Vehicles (ARVs) are pushed to their handling limits in multi-agent scenarios at extremely high ($\geq 150mph$) speeds. This Operational Design Domain (ODD) presents unique challenges across the autonomy stack. The Indy Autonomous Challenge (IAC) is an international competition aiming to advance autonomous vehicle development through ARV competitions. While far from challenging what a human racecar driver can do, the IAC is pushing the state of the art by facilitating full-sized ARV competitions. This paper details the MIT-Pitt-RW Team's approach to autonomous racing in the IAC. In this work, we present our modular and fast approach to agent detection, motion planning and controls to create an autonomy stack. We also provide analysis of the performance of the software stack in single and multi-agent scenarios for rapid deployment in a fast-paced competition environment. We also cover what did and did not work when deployed on a physical system the Dallara AV-21 platform and potential improvements to address these shortcomings. Finally, we convey lessons learned and discuss limitations and future directions for improvement.

* Published in Journal of Field Robotics

Enhancing Track Management Systems with Vehicle-To-Vehicle Enabled Sensor Fusion

Apr 26, 2024Abstract:In the rapidly advancing landscape of connected and automated vehicles (CAV), the integration of Vehicle-to-Everything (V2X) communication in traditional fusion systems presents a promising avenue for enhancing vehicle perception. Addressing current limitations with vehicle sensing, this paper proposes a novel Vehicle-to-Vehicle (V2V) enabled track management system that leverages the synergy between V2V signals and detections from radar and camera sensors. The core innovation lies in the creation of independent priority track lists, consisting of fused detections validated through V2V communication. This approach enables more flexible and resilient thresholds for track management, particularly in scenarios with numerous occlusions where the tracked objects move outside the field of view of the perception sensors. The proposed system considers the implications of falsification of V2X signals which is combated through an initial vehicle identification process using detection from perception sensors. Presented are the fusion algorithm, simulated environments, and validation mechanisms. Experimental results demonstrate the improved accuracy and robustness of the proposed system in common driving scenarios, highlighting its potential to advance the reliability and efficiency of autonomous vehicles.

Enhanced Human-Robot Collaboration using Constrained Probabilistic Human-Motion Prediction

Oct 05, 2023Abstract:Human motion prediction is an essential step for efficient and safe human-robot collaboration. Current methods either purely rely on representing the human joints in some form of neural network-based architecture or use regression models offline to fit hyper-parameters in the hope of capturing a model encompassing human motion. While these methods provide good initial results, they are missing out on leveraging well-studied human body kinematic models as well as body and scene constraints which can help boost the efficacy of these prediction frameworks while also explicitly avoiding implausible human joint configurations. We propose a novel human motion prediction framework that incorporates human joint constraints and scene constraints in a Gaussian Process Regression (GPR) model to predict human motion over a set time horizon. This formulation is combined with an online context-aware constraints model to leverage task-dependent motions. It is tested on a human arm kinematic model and implemented on a human-robot collaborative setup with a UR5 robot arm to demonstrate the real-time capability of our approach. Simulations were also performed on datasets like HA4M and ANDY. The simulation and experimental results demonstrate considerable improvements in a Gaussian Process framework when these constraints are explicitly considered.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge