Zeroth-Order Alternating Randomized Gradient Projection Algorithms for General Nonconvex-Concave Minimax Problems

Paper and Code

Aug 05, 2021

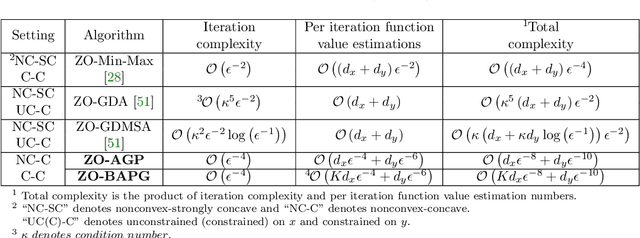

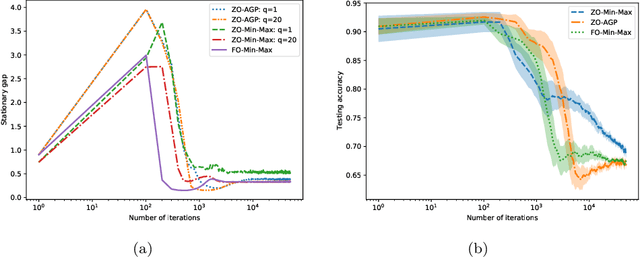

In this paper, we study zeroth-order algorithms for nonconvex-concave minimax problems, which have attracted widely attention in machine learning, signal processing and many other fields in recent years. We propose a zeroth-order alternating randomized gradient projection (ZO-AGP) algorithm for smooth nonconvex-concave minimax problems, and its iteration complexity to obtain an $\varepsilon$-stationary point is bounded by $\mathcal{O}(\varepsilon^{-4})$, and the number of function value estimation is bounded by $\mathcal{O}(d_{x}\varepsilon^{-4}+d_{y}\varepsilon^{-6})$ per iteration. Moreover, we propose a zeroth-order block alternating randomized proximal gradient algorithm (ZO-BAPG) for solving block-wise nonsmooth nonconvex-concave minimax optimization problems, and the iteration complexity to obtain an $\varepsilon$-stationary point is bounded by $\mathcal{O}(\varepsilon^{-4})$ and the number of function value estimation per iteration is bounded by $\mathcal{O}(K d_{x}\varepsilon^{-4}+d_{y}\varepsilon^{-6})$. To the best of our knowledge, this is the first time that zeroth-order algorithms with iteration complexity gurantee are developed for solving both general smooth and block-wise nonsmooth nonconvex-concave minimax problems. Numerical results on data poisoning attack problem validate the efficiency of the proposed algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge