Winning Ticket in Noisy Image Classification

Paper and Code

Feb 23, 2021

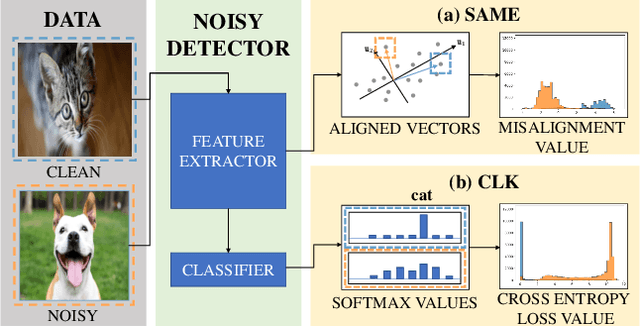

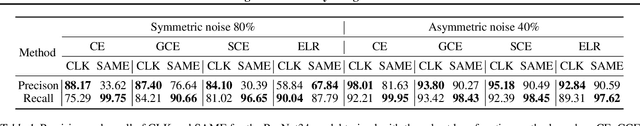

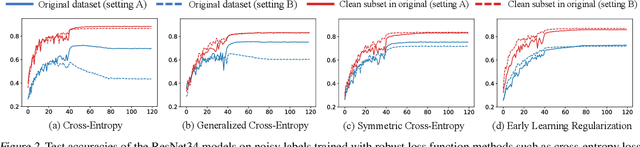

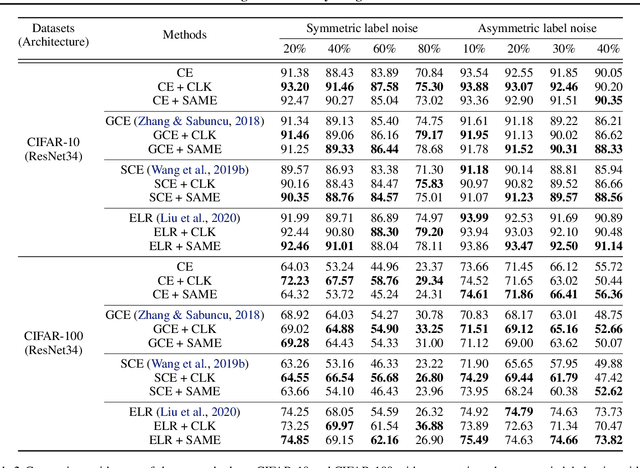

Modern deep neural networks (DNNs) become frail when the datasets contain noisy (incorrect) class labels. Many robust techniques have emerged via loss adjustment, robust loss function, and clean sample selection to mitigate this issue using the whole dataset. Here, we empirically observe that the dataset which contains only clean instances in original noisy datasets leads to better optima than the original dataset even with fewer data. Based on these results, we state the winning ticket hypothesis: regardless of robust methods, any DNNs reach the best performance when trained on the dataset possessing only clean samples from the original (winning ticket). We propose two simple yet effective strategies to identify winning tickets by looking at the loss landscape and latent features in DNNs. We conduct numerical experiments by collaborating the two proposed methods purifying data and existing robust methods for CIFAR-10 and CIFAR-100. The results support that our framework consistently and remarkably improves performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge