What Catches the Eye? Visualizing and Understanding Deep Saliency Models

Paper and Code

Mar 22, 2018

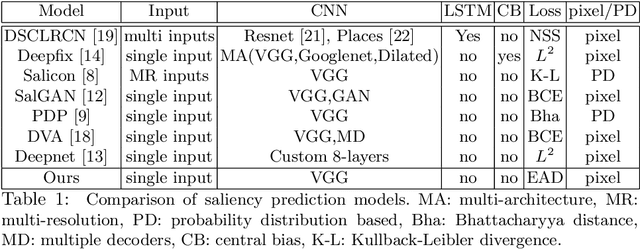

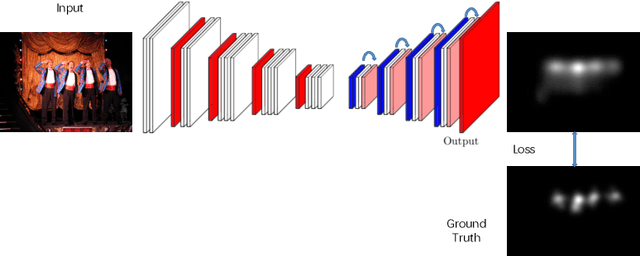

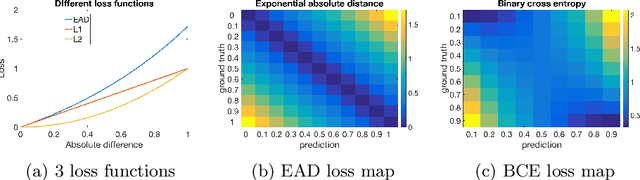

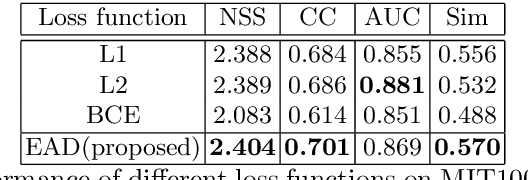

Deep convolutional neural networks have demonstrated high performances for fixation prediction in recent years. How they achieve this, however, is less explored and they remain to be black box models. Here, we attempt to shed light on the internal structure of deep saliency models and study what features they extract for fixation prediction. Specifically, we use a simple yet powerful architecture, consisting of only one CNN and a single resolution input, combined with a new loss function for pixel-wise fixation prediction during free viewing of natural scenes. We show that our simple method is on par or better than state-of-the-art complicated saliency models. Furthermore, we propose a method, related to saliency model evaluation metrics, to visualize deep models for fixation prediction. Our method reveals the inner representations of deep models for fixation prediction and provides evidence that saliency, as experienced by humans, is likely to involve high-level semantic knowledge in addition to low-level perceptual cues. Our results can be useful to measure the gap between current saliency models and the human inter-observer model and to build new models to close this gap.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge